Billions of pixels are captured every second from cameras, phones, and sensors. On their own, they’re only raw data, without much value. However, using a branch of artificial intelligence (AI) like computer vision can turn that raw visual data into meaningful information and insights. This is a game-changer for many use cases, like navigation in autonomous vehicles or online content moderation.

These systems use AI models to analyze visual streams in real time. They can perform tasks like detecting objects, recognizing patterns, and making decisions that reduce the need for constant human supervision.

But building an AI model is only part of the story; the real challenge is keeping it accurate and reliable as new data flows in. Without a proper structure, retraining the AI models and reorganizing datasets (a collection of data) becomes overwhelming.

This is where Machine Learning Operations (MLOps) makes the difference. MLOps provides the framework that brings together data engineering, model development, deployment, and monitoring. You can think of MLOps like an airport control tower. It coordinates pilots (data scientists), planes (AI models), runways (deployment), and radar (monitoring) so everything works together smoothly and safely.

MLOps integrates data science, machine learning, and DevOps.

MLOps keeps computer vision systems running in sync, allowing them to scale without breaking down under increasing complexity. To make this possible, it relies on key practices. Among them, two stand out as especially important: dataset versioning and continuous validation.

In this article, we’ll explore these key MLOPs practices and how they can help build computer vision workflows that are both reliable and scalable. Let’s get started!

Before learning about MLOps, let’s get a better understanding of what DevOps involves. ‘Development and Operations’ (DevOps) is a combination of culture, processes, and tools that help organizations deliver software and services faster and more reliably.

A DevOps team brings together software developers and IT operations to work closely throughout the product lifecycle. Instead of working separately, the two groups often merge into one team where engineers handle everything from building and testing to launching and maintaining applications. DevOps relies on automation tools that speed up work, making systems more reliable. These tools support key practices like continuous integration, continuous delivery, and teamwork.

You must be wondering how DevOps is related to MLOps. MLOps takes DevOps concepts, such as automation, version control, and continuous delivery, and applies them to machine learning or AI projects. While DevOps focuses on delivering AI software quickly, MLOps handles the extra challenges of working with data and AI models.

When it comes to computer vision, MLOps creates workflows that guide an AI model through its entire lifecycle. The primary goal of MLOps is to ensure that the AI models can be reproduced, scaled, and maintained without any confusion or wasted effort. Think of it like a well-run kitchen, recipes (workflows) guide chefs (data scientists) so every dish (AI model) can be reliably recreated, scaled up for more guests, and kept consistent over time.

MLOps helps teams trace results back to the exact data and setup used, so work is always reproducible. Automated pipelines help scale models quickly, whether in the cloud or on devices. By giving engineers, data scientists, and business teams a shared framework, overall collaboration improves. Since everything is tracked and documented, meeting compliance requirements becomes much simpler. These benefits aren’t theoretical; they are making a real difference in AI adoption.

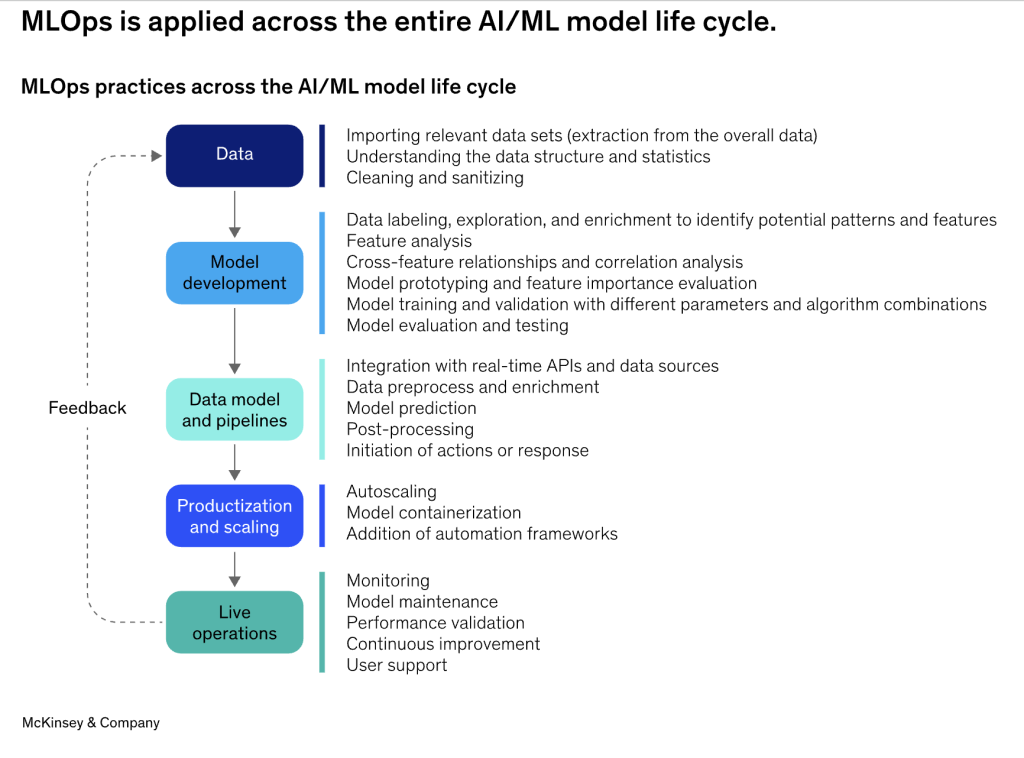

MLOps is applied across the entire AI/ML model life cycle. (Source)

For example, in medical research, the NaIA-RD project improved diabetic retinopathy screening by applying AI with MLOps. The system enhanced fundus images (photos of the back of the eye, including the retina, optic disc, and macula), detected disease, and integrated seamlessly into hospital systems.

To ensure reliability, the project used modular microservices, thorough testing, and continuous monitoring. Feedback loops addressed low-quality images and dataset drift, leading to more accurate detection and smoother adoption.

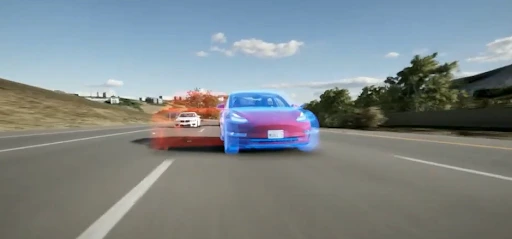

Now that we understand what MLOps is and its role in computer vision, let’s look at an example. Tesla, for instance, applies MLOps to power and improve its vision AI systems for autonomous driving.

Every car in Tesla’s fleet runs a “shadow mode” network alongside the driver. The ‘shadow mode’ observes and makes decisions in real time, letting engineers collect data, compare AI predictions with actual driver actions, and improve models safely. When the AI model disagrees with the driver’s action, the system records the event and uploads that data. Engineers then group these cases into structured and versioned datasets and use them to retrain Tesla’s Autopilot systems.

Updated models are then tested again in shadow mode before the final release. This closed feedback loop, which involves collecting, versioning, retraining, and validating, shows how MLOps keeps Tesla’s computer vision models reliable and adaptive to new driving scenarios.

Shadow Mode in Tesla Cars. (Source)

Next, let’s take a look at the two important features of MLOps: dataset versioning and continuous validation. We’ll start with dataset versioning first.

In AI projects, datasets often change over time. New images get added, or existing ones are corrected with better data labeling and annotations, so the AI model can learn more accurately. Without dataset version control, it becomes difficult to know which dataset was used to train a model. This can lead to problems like dataset drift, where small changes in data cause unstable or unreliable final results.

Dataset versioning solves this by keeping track of every dataset update, making it possible to reproduce experiments, roll back to earlier versions if new data hurts performance, and record important details like labeling methods, data sources, and preprocessing steps. You can think of dataset versioning like keeping photo albums for each year. You can always go back and see exactly what everything looked like at a specific time.

Continuous validation is another practice in MLOps that is used to automatically evaluate vision models against new or updated data labeling and annotations. This ensures that they remain accurate and reliable.

When it comes to computer vision models, they may end up degrading in performance over time. This can lead to performance issues when faced with challenging situations, such as environmental conditions, lighting, camera angles, or object variations.

However, it can be avoided by running continuous validation checks. Using such methods, teams can prevent model degradation before it impacts the system. This practice is used in many real-world use cases. For example, autonomous vehicles rely on continuous validation of their object detection systems, where even small errors can have serious safety consequences.

Continuous validation can be done in a few ways:

Data labeling and annotation can be compared to assigning unique names to streets and places on a map. In computer vision, the contents (objects) in the images are labeled to show what they actually are. This helps AI models learn patterns to recognize those objects.

However, if the model is trained on data that is incorrectly labeled, the AI models learn the wrong patterns and give out the wrong predictions. It’s like getting lost in a city because you used a mislabeled map with incorrect street names.

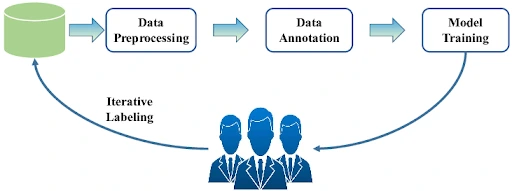

There are three primary approaches to data labeling and annotation: manual annotation, semi-automatic data annotation, and automatic data annotation. Manual annotation relies on people (annotators) to label each image or frame, which offers high-quality data but is time-consuming. Semi-automatic data annotation, on the other hand, can speed up the whole process using AI tools to pre-label data. This frees up a lot of time for annotators, and they can just review the final labels.

A Data Labeling and Annotation Process That Uses Human Reviews. (Source)

On top of this, there’s fully automatic data annotation, where AI assigns labels on its own. This method is quick and can handle large amounts of data, but it is prone to mistakes as there is no human oversight. That’s why humans in the loop are still essential, as they review, correct, and guide automated tools to keep the data accurate and trustworthy.

With a better understanding of MLOps and its practices, let’s take a look at the challenges companies face when trying to scale it for computer vision projects. Here are several key challenges to consider:

Partnering with the right data experts makes these challenges easier to manage. At Objectways, we help teams structure, label, and review data so computer vision pipelines can scale with confidence.

MLOps is a dynamic space, especially in computer vision. Here are some of the trends making waves right now:

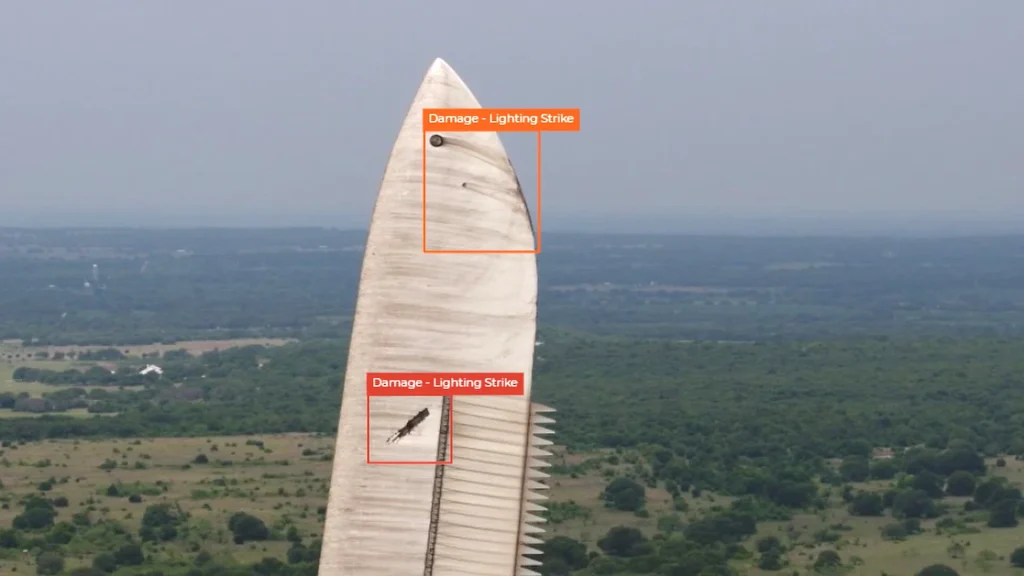

These aren’t just future possibilities; many innovations are already moving in this direction. For example, in climate protection, AutoML has been used to automate wind turbine blade inspections, reducing manual image review by half and allowing engineers to focus on real damage. This goes to show that AutoML can make complex computer vision tasks more manageable, even in industries without deep machine learning expertise

An example of using computer vision to detect wind turbine damage. (Source).

Building reliable computer vision systems depends on managing data well and keeping models functional over time. Practices such as dataset versioning make experiments reproducible, while continuous validation ensures models stay accurate as new data arrives. These practices form the foundation of efficient MLOps pipelines, and they help computer vision projects scale up smoothly.

At Objectways, we help teams design structured, high-quality datasets that make these workflows possible. Better data leads to better models, and ultimately, better results. Book a call with our experts to see how we can support your computer vision journey.