As humans, we often learn new tasks by building on skills we already have. If you can ride a bicycle, picking up how to ride a scooter isn’t so hard; you’re already familiar with the rhythm of balancing and moving on two wheels.

Similarly, some AI models are now learning to use what they already know to solve tasks they are not specifically trained for. This is called zero-shot learning, and it helps AI models respond to new prompts or tasks without needing labeled examples.

Traditional AI models learn by example. They need many high-quality labeled inputs to recognize patterns and perform well. For instance, a model trained to detect fruits needs hundreds of labeled fruit images to succeed.

Zero-shot learning removes this barrier by helping systems generalize based on language, structure, or other related data. This makes it useful in fields where collecting labeled data is time-consuming or expensive.

In this article, we’ll take a closer look at zero-shot learning, its current importance, how it compares to traditional training methods, and why it’s gaining attention in the AI community.

Zero-shot learning is a machine learning method that makes it possible for an AI system to perform a task without being trained on examples specific to that task. Instead of learning from direct samples, the model uses information from related data or previous tasks to understand and complete something new.

This ability relies on generalization. The model connects patterns from one area and applies them to another, often through natural language or contextual clues.

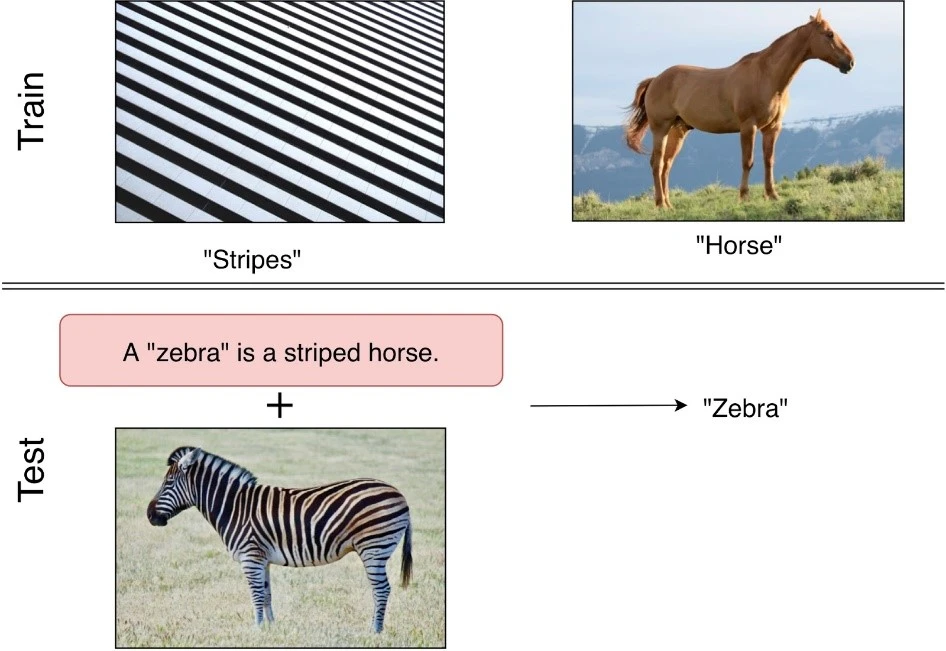

An example of inferring what a zebra looks like using zero-shot learning. (Source)

It’s a bit like trying to build IKEA furniture without the instructions. Even if you’ve never assembled that exact piece before, you can usually make sense of it based on your experience with similar items. In the same way, zero-shot learning enables a model to rely on task descriptions or instructions – rather than example data – to produce results.

This technique is especially useful in domains where labeled data is scarce or where tasks constantly change, such as language understanding or document classification. It helps models stay flexible and adapt to unseen challenges without constant retraining.

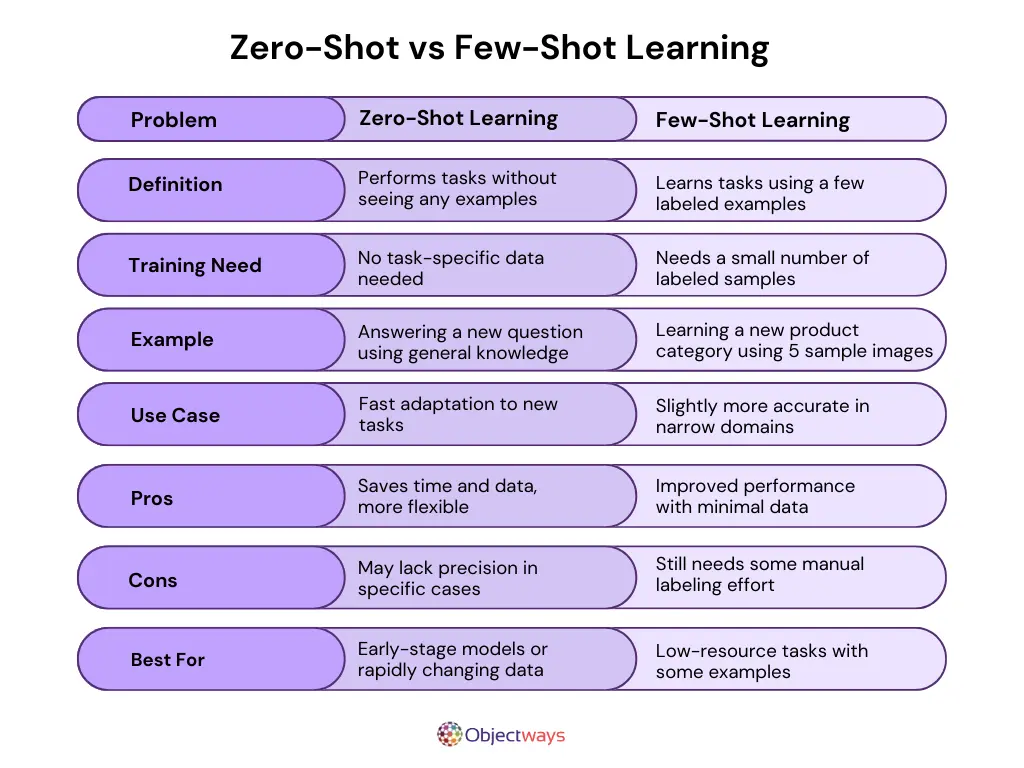

As AI takes on more diverse tasks, choosing the right learning approach becomes key. Two common approaches are zero-shot learning and few-shot learning. Zero-shot learning works with no task-specific examples, while few-shot learning uses just a handful to get started.

While they sound similar, they solve problems in different ways, as shown in the image below:

Zero-Shot Learning vs Few-Shot Learning

Giving a prompt to an AI model is a bit like writing a to-do list for someone new to the job. If the instructions are clear, they can usually figure it out using what they already know. But if the prompt is vague, they might miss the point entirely.

In zero-shot prompting, the model depends completely on the prompt to understand and perform the task without seeing examples during training.

Here’s a look at how zero-shot prompting works, step by step:

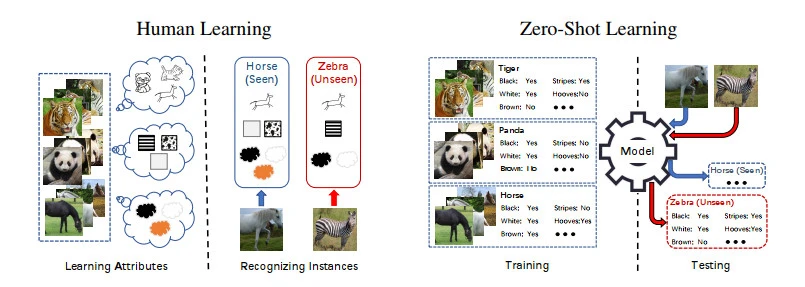

1. The model starts with no examples: In zero-shot learning, the AI system has not seen examples of the task it’s being asked to do.

2. A prompt sets the direction: The task is introduced through a written prompt, which acts as a simple instruction instead of training data.

3. The model draws from what it already knows: It uses its general language understanding to make sense of the prompt and generate a response.

4. The clarity of the prompt influences the outcome: Well-written prompts lead to better results, while vague ones can cause confusion or inaccurate answers.

5. The prompt becomes the entire setup: Since there is no task-specific training, the prompt plays a key role in guiding the model’s behavior and output.

Comparing Human and Zero-Shot Learning Approaches (Source)

Now, let’s explore what makes zero-shot learning so impactful and why it’s a game-changer in the AI space.

Building datasets is one of the most resource-intensive stages of any AI project. It requires coordination across labeling teams, quality checks, and domain-specific reviews. Zero-shot learning reduces this load by enabling models to operate based on instructions rather than examples.

In fact, this approach is already working well in real-world tests. Researchers from OpenAI and Meta have shown that large language models can perform a variety of tasks with little to no task-specific training data, simply by following well-crafted prompts.

Zero-shot learning can reduce the cost of development, especially in fields where annotation is expensive or restricted. It also improves scalability. Developers can move from idea to model configuration without pausing to collect new samples. The model’s ability to generalize from prior training helps to explore more tasks with fewer manual steps.

AI deployment often slows down due to the need for fresh data and repeated model fine-tuning. Zero-shot learning helps teams skip many of these steps by using task instructions at runtime. It reduces the number of retraining cycles and makes it easier to apply existing models to new problems.

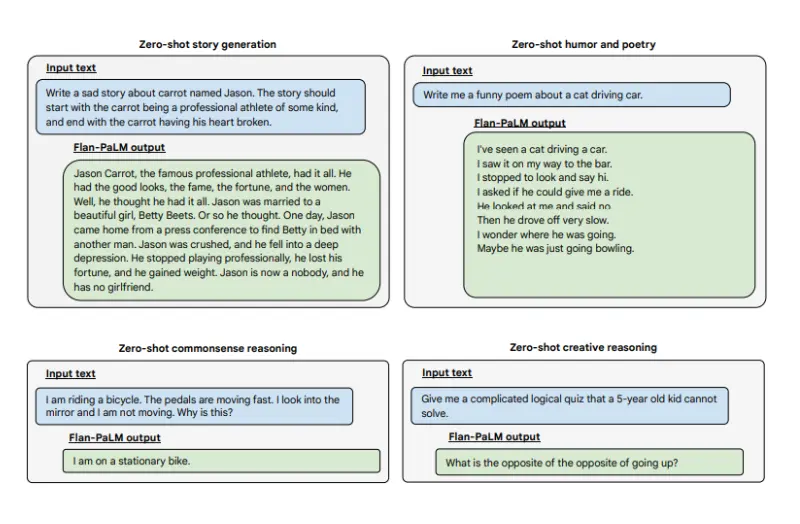

For instance, a recent study found that Natural Language Processing (NLP) models like T5 and PaLM achieved strong performance using zero-shot prompting on unseen benchmarks. Since zero-shot models are task-agnostic, they can be deployed in more settings without manual updates.

This makes it easier to keep AI systems up to date without slowing things down. Teams can just change the prompt instead of rewriting code or gathering new data. It saves time and helps new features go live faster.

Examples of Zero-Shot Tasks Using the PaLM Model (Source)

In many AI projects, testing new ideas takes time because each task often needs a separate model with labeled data. Zero-shot learning helps remove these blockers by enabling models to respond to new instructions without retraining.

According to Meta’s 2022 report on Open Pretrained Transformer (OPT) models, prompt design alone can guide the model to complete different tasks without task-specific examples. This makes it easier to explore multiple ideas at once, using the same base model.

If one prompt does not produce a useful result, teams can adjust the wording and try again without restarting the process. Since the model stays the same, the testing process becomes faster and more scalable.

Researchers and developers can test ideas by writing new prompts instead of preparing new datasets. This results in continuous trials and faster feedback cycles during development.

Now that we have a better understanding of why an AI developer might choose zero-shot learning, let’s take a look at how it’s being used in real-world applications.

Classifying text into categories is one of the most common NLP tasks, and zero-shot learning can be very effective here. For example, zero-shot learning has been used to classify financial documents without labeled training data. It can also support more complex tasks, such as answering complex financial questions by prompting large language models to generate and run code.

This is particularly impactful in fields like compliance or law, where data labeling requires domain expertise and time. Zero-shot learning means teams can respond faster to real-world data without needing to rebuild or retrain models from scratch.

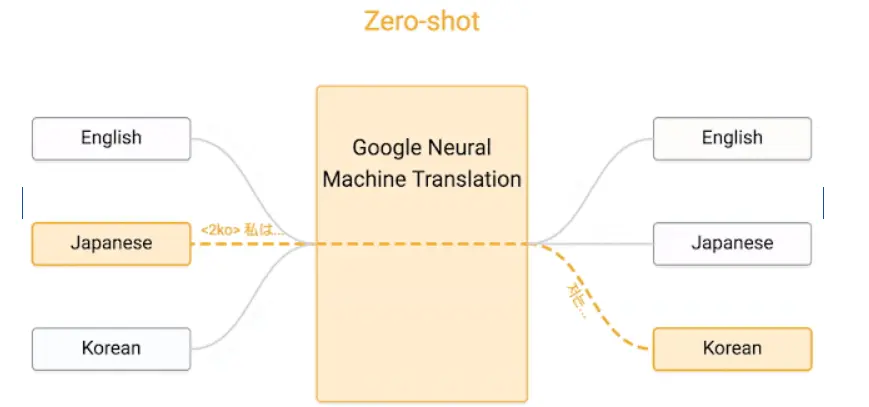

Zero-shot learning allows language models to translate between language pairs they haven’t been explicitly trained on. Rather than relying on parallel examples for every combination, the model draws on its shared understanding of language to bridge the gap.

A good example of this is Google’s Multilingual Neural Machine Translation (MNMT) system. It supports translation across more than 100 languages, including low-resource ones like Basque and Zulu. These translations are possible even when no direct training data exists for the specific language pair. This method allows developers to launch translation tools in new regions faster, without waiting to gather large bilingual datasets. It also supports global communication by making translation more accessible across a wider set of languages.

Zero-Shot Translation with Google’s Multilingual Neural Machine Translation System (Source)

For organizations that work in multilingual settings, zero-shot learning can reduce costs and make scaling easier. It offers a practical path toward inclusive, language-aware AI systems that serve more users with fewer data requirements.

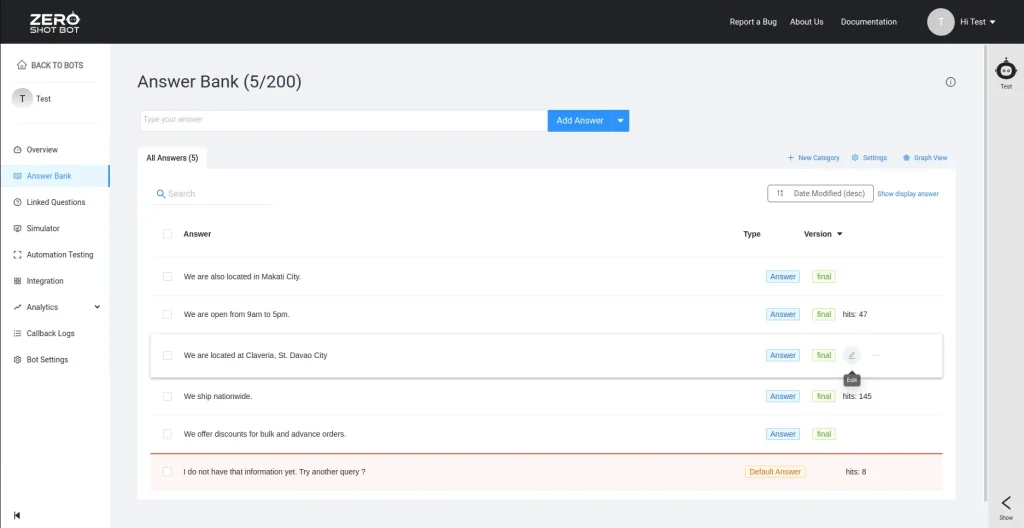

Zero-shot learning is also making it easier for businesses to set up AI-powered customer support without needing large sets of training data. One example is ZeroShotBot, a chatbot platform built for companies that want to launch support tools quickly, without writing code, and with no training data.

The system uses an answer bank, where businesses can add common questions and clear answers. The model then responds to customer queries by matching them with the most relevant answers.

Even if a question has not been asked before, the chatbot can still reply with the help of generative AI. ZeroShotBot supports over 100 languages and works across websites, mobile apps, and social media platforms.

ZeroShotBot’s Answer Bank Interface for Managing Responses (Source)

While zero-shot learning brings a lot of flexibility, it’s not a silver bullet. Like any other machine learning approach, it has its trade-offs. Here are a few key things to keep in mind when using it:

Learning methods like zero-shot learning have opened the door to a new way of building artificial intelligence systems. Instead of training on examples for every task, models can now take on new challenges using only prompts and prior knowledge.

This makes it easier to test ideas, launch faster, and handle changing needs without starting over. However, it isn’t a one-size-fits-all solution. It works best when paired with thoughtful prompt designs and reliable models.

At Objectways, we help teams build better AI solutions by offering a full suite of services, including high-quality data labeling, data sourcing, and AI development support. Whether you’re training models from scratch or working on zero-shot and few-shot learning applications, we provide the clean, scalable data needed to power accurate, reliable systems.

Explore our services to see how Objectways can support your next AI project, and connect with our team to get started today!