Self-driving cars, AI security cameras, and a visual inspection system in factories all have one thing in common. They rely on computer vision models trained using large sets of labelled data. Labeling, also known as annotation, is the process of adding information, like bounding boxes, tags, or classifications, to visual content (like images and videos). Data annotation helps AI systems learn how to detect patterns and recognize specific objects in visual data.

Annotating images is relatively straightforward. However, video annotation is a little bit more complex and involves a more detailed process. Generally, a video is first broken down into individual frames, and each frame is then annotated separately.

For example, a 5-minute video recorded at 24 frames per second (fps) contains 7,200 individual frames (5 minutes x 60 seconds x 24 frames), which is equivalent to 7,200 separate images to label. Since computer vision models need hours of labeled video data to work accurately, video annotation can be a time-consuming and demanding task.

To make this process more manageable, a variety of video annotation tools have been developed. Choosing the right video annotation software can streamline your workflow, improve data quality, and save valuable time.

In this article, we’ll explore the top 5 video annotation tools of 2025, from lightweight open-source options to powerful enterprise-level solutions. Let’s get started!

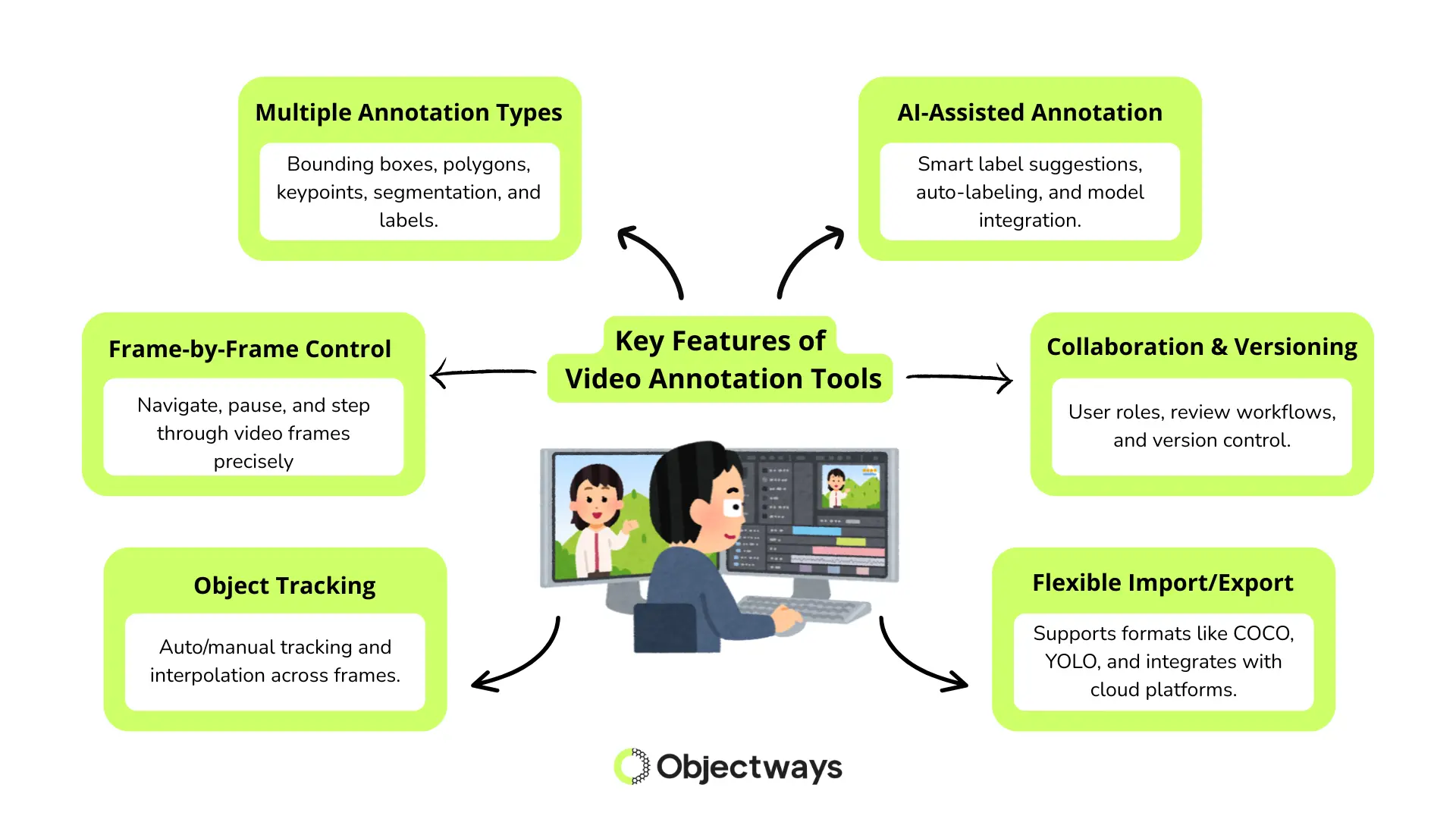

Before diving into the top 5 video annotation tools of 2025, it’s important to understand the key features that make a video labeling tool effective.

Just like choosing the right tool from a toolbox depends on the task, selecting the right video annotation software depends on your project’s needs. Not all video annotation tools are the same, and they typically differ with respect to supported data formats, pricing structures, and how much you can customize them.

Key Features of Video Annotation Tools

To handle the complexity of video labeling, an ideal video annotation tool should offer a complete set of features. For instance, it should help manage large amounts of video data and make it easy to produce high-quality labels for training AI models.

Here are some features that a reliable video annotation tool may offer:

Choosing the right video annotation tool can significantly impact the speed, accuracy, and scalability of your AI project. Next, let’s take a closer look at five impactful video annotation tools and explore the unique strengths each one brings to different types of AI projects.

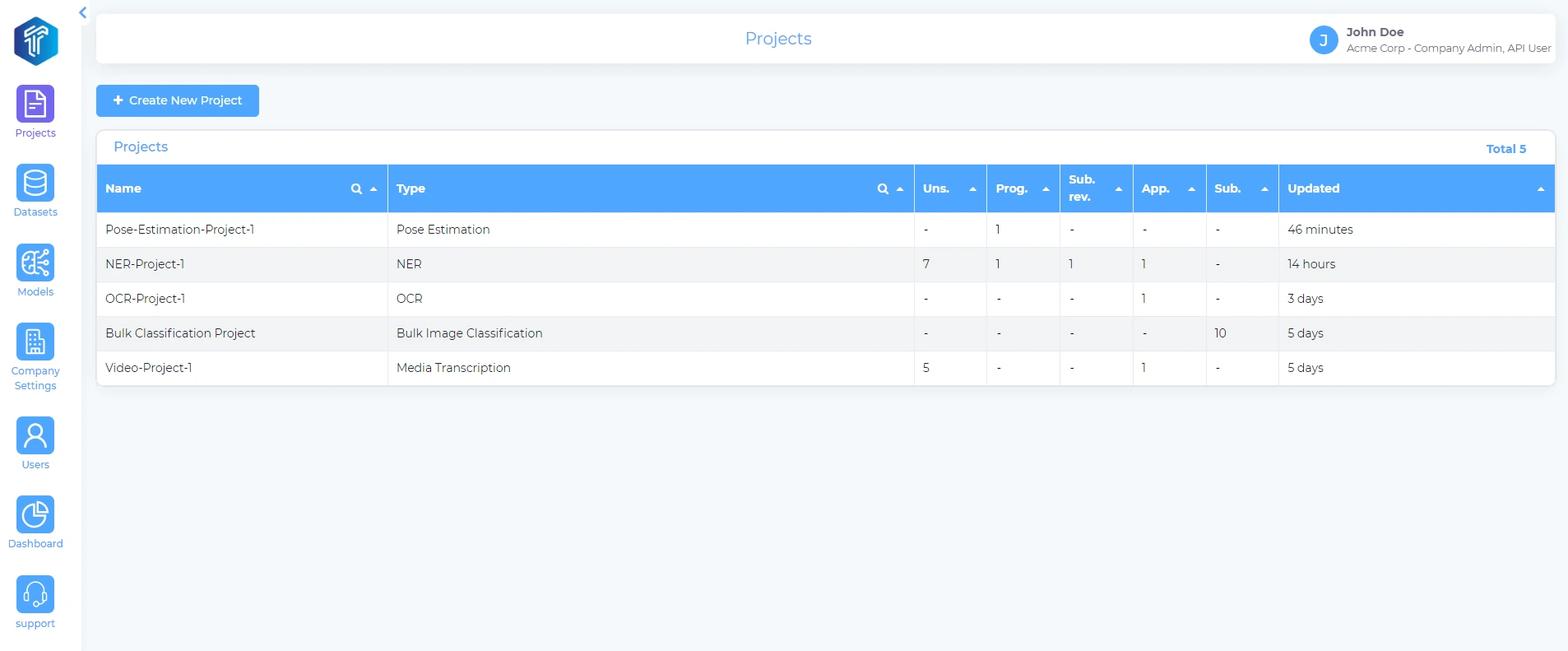

TensorAct Studio is a powerful platform built for enterprise-level video annotation. It’s a go-to option for teams that need robust control, data security, and custom workflows. Since it also supports audio annotation and transcription, it can serve as a comprehensive tool for large-scale AI projects.

Here are some of the key features of TensorAct:

Managing Multiple Projects within TensorAct Studio (Source)

Is your team working with complex video data? Then, TensorAct might be just what you need. It’s built to handle various video formats and works well for advanced use cases, such as surveillance and autonomous driving. It’s also great for breaking down long video clips into useful sections, such as spotting highlights in sports footage or detecting intrusions in security videos.

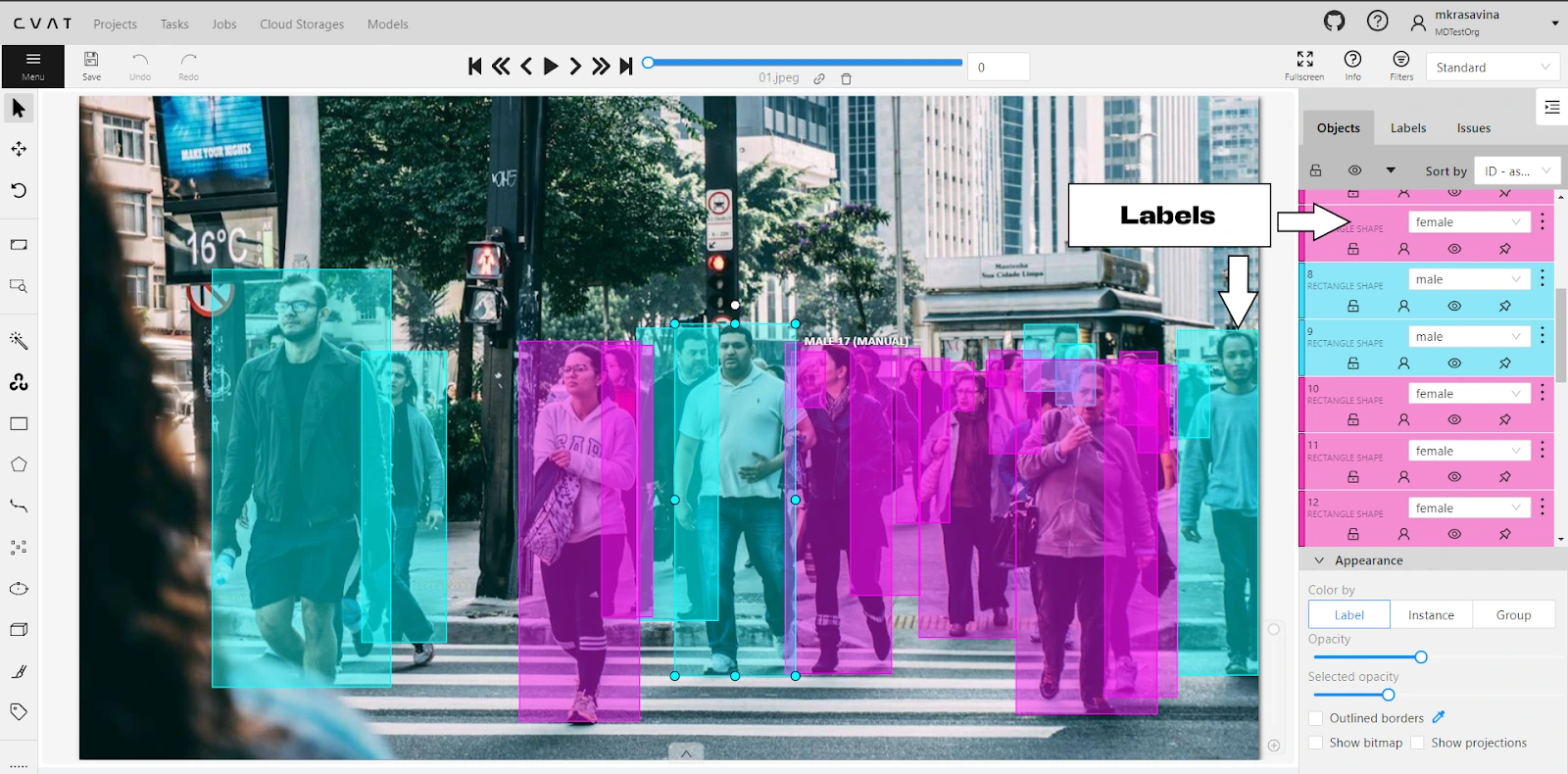

Computer Vision Annotation Tool, or CVAT, is an open-source video annotation tool developed by Intel in 2017. It offers advanced customization options and can be integrated into larger machine learning pipelines.

CVAT includes a range of features that streamline the annotation process. Users can move easily through video frames and manage tasks through a clean, intuitive interface. It supports multiple annotation formats, including bounding boxes, polygons, and keypoints, which are commonly used in object detection, tracking, and segmentation tasks.

An Example of Labeling Objects Using the CVAT Annotation Tool (Source)

On top of this, the platform is built for efficient teamwork. Team members can work on different parts of a project while maintaining clear boundaries over who views or edits specific annotations. In particular, the platform is a well-suited tool for researchers and early-stage startup projects where flexibility and customization are important.

It is commonly used in applications such as tracking objects in surveillance and drone footage, annotating data for self-driving cars, labeling medical video for diagnostics, analyzing sports footage, and preparing training data for robotics and automation tasks. For projects that require strict data privacy, CVAT can also be deployed locally on edge devices.

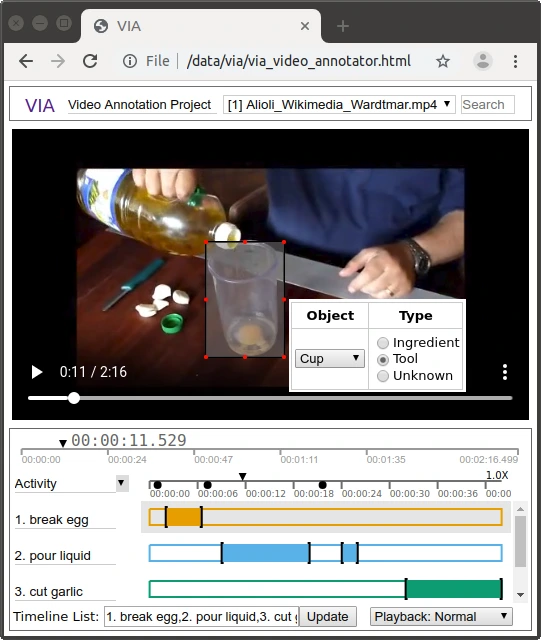

VIA, or VGG Image Annotator, is a free and open-source annotation tool developed by the Visual Geometry Group at the University of Oxford. It is a browser-based tool that comes with basic annotation functionalities for images, audio, and video.

The platform has many interesting features that make it easy to use. Since it’s a browser-based tool, you don’t need to install anything; just open the tool in your browser and start labeling.

It also runs entirely offline, making it ideal for projects that require privacy or are conducted in environments without internet access. Once annotations are complete, the data can be exported in a clean JSON format, which is easy to use for training AI models.

If you are looking for a lightweight, no-install annotation tool for quick labeling tasks, VIA is a great choice. It’s well-suited for small-scale projects where ease of use and speed take priority over advanced features. Students, researchers, and hobbyists often find it a practical choice for quick, privacy-friendly labeling tasks.

Annotating Specific Video Segments with VIA Directly in the Browser (Source)

Sloth is another open-source tool designed for image and video annotation, but it takes a different approach compared to the other annotation platforms we’ve discussed so far. Hosted on GitHub, Sloth is best suited for developer-focused teams with strong programming skills. Instead of using a graphical user interface, it relies on configuration files to define annotations, object classes, and behaviors. This setup provides greater flexibility and control, making it ideal for custom or complex labeling tasks.

Here’s a glimpse of some of the key features of Sloth:

For teams that prefer working directly with code and need full control over the annotation workflow, Sloth is a solid option. It’s especially useful in AI projects where labels have to follow a consistent structure or when the same process needs to be repeated across large datasets.

Consider researchers working on autonomous driving data. They can use Sloth to annotate vehicles, pedestrians, and traffic signs in a standardized format across thousands of frames.

Similarly, Label Studio is an open-source tool that can handle various data types, like video, images, text, and audio. Its modular architecture and plugin-based extensions make it ideal for multi-modal AI projects.

Label Studio comes with a wide range of features for flexible and detailed labeling. With its video plugin, you can tag specific frames, mark actions, and segment clips directly on the timeline.

It also allows you to customize how labeling works and even add extra tools using these plug-ins. For team projects or long-term work, Label Studio includes built-in tools to manage tasks and keep track of different versions, so everyone can stay organized and nothing gets lost.

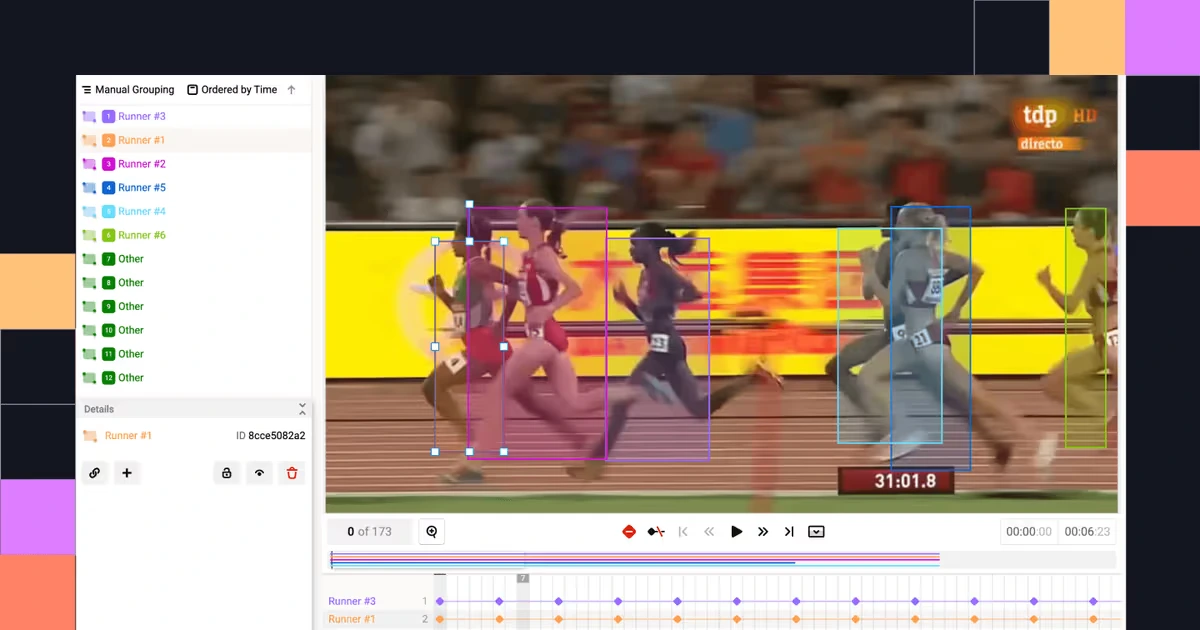

Tracking Multiple Runners in a Race using Label Studio (Source)

While selecting the right video annotation tool is crucial, it’s equally essential to understand that even the most feature-rich tools are just one part of a successful data labeling strategy. High-quality annotation depends on consistency, quality, and domain expertise.

Oftentimes, when dealing with complex video data, you need more than just a tool. You need a complete data annotation solution that combines human expertise with reliable workflows. It should include:

Does all this sound complex? That’s exactly why turning to experts is the best move, and you are at the right place.

At Objectways, we provide professional video annotation services supported by experienced human annotators. Whether you’re working with dashcam footage, medical videos, or surveillance data, we deliver high-quality annotations at scale. Our offerings include multi-modal workflows, comprehensive QA pipelines, annotator training, and end-to-end project management.

Building computer vision models for real-world problems starts with high-quality labeled data. While video annotation tools like TensorAct Studio and Label Studio are highly efficient for researchers and small teams, real-world and enterprise applications require scalable tools with skilled human expertise.

At Objectways, we specialize in transforming raw video into high-quality, production-ready AI training data. With a dedicated team of expert video annotators with domain knowledge, Objectways can help you curate accurate labeled video data.

Need data for your next AI initiative? Book a call with Objectways to explore scalable solutions.