Table of content

Best Practices to Manage Content Moderation Processes

Choose the Right Moderation Tool

Quality Service Level Agreements

Summary

Cleaning Up the Internet: The Importance of Effective Content Moderation

User-generated content (UGC) can be a valuable asset for digital platforms and businesses, providing an opportunity for users to engage with content and each other. However, UGC can also present several challenges and issues, including:

- Legal and regulatory compliance:-UGC can sometimes infringe on legal and regulatory requirements, such as copyright, privacy, or advertising standards. Digital platforms and businesses need to ensure that the UGC posted by users is compliant with these requirements to avoid legal or regulatory repercussions.

- Offensive or harmful content:-UGC can include content that is offensive, hateful, or harmful, such as hate speech, discriminatory language, or graphic violence. This type of content can lead to a negative user experience and may even damage brand reputation.

- Inappropriate content:-UGC can also include inappropriate or irrelevant content, such as spam, fake reviews, or irrelevant comments. This can affect the overall quality of the content and make it difficult for users to find relevant and useful information.

- Misinformation:-UGC can also include false or misleading information, which can be harmful to users and to the platform or business. Digital platforms and businesses must take steps to ensure that UGC is accurate and truthful.

- Brand safety:-UGC can also pose a risk to brand safety, particularly if the content includes references to competitors or is critical of the platform or business. Digital platforms and businesses need to monitor UGC to ensure that it is not damaging to their brand image.

- Moderation cost and scalability:-UGC can be difficult and expensive to moderate at scale. Digital platforms and businesses may need to invest in moderation tools, processes, and personnel to ensure that UGC is reviewed and moderated effectively.

Overall, UGC presents a number of challenges and issues that must be addressed by digital platforms and businesses. By implementing appropriate moderation processes, tools, and guidelines, these challenges can be mitigated, and UGC can continue to provide value to both users and the platform or business.

Best Practices to Manage Content Moderation Processes

Best Practices to Manage Content Moderation Processes Content moderation best practices involve a combination of human and automated moderation techniques, clear and transparent policies, and effective communication with users. By implementing these practices, digital platforms and businesses can provide a safe and positive user experience while complying with legal and regulatory requirements.

Objectways Content Moderation Human Review workflows involve following best practices:

- User report or flagging:-The first step in the human content moderation workflow is for users to report or flag content that they believe violates platform policies or community guidelines.

- Review content:-Once the content is flagged, it is then reviewed by a human content moderator. The moderator will evaluate the content to determine if it violates any platform policies or community guidelines.

- Categorize the content:-The moderator will categorize the content based on the type of violation, such as hate speech, graphic violence, or adult content.

- Take action:-The moderator will then take appropriate action depending on the type of violation. This can include removing the content, warning the user, or escalating the issue to a higher authority.

- Appeal process:-Platforms often provide an appeal process for users to dispute moderation decisions. If a user appeals, the content will be reviewed by a different moderator or team.

- Monitoring and continuous improvement:- After content is moderated, it is important to monitor the platform for repeat offenses or new types of violations. This helps to identify new patterns and improve the moderation process.

Choose the Right Moderation Tool

There are many tools available to help digital platforms and businesses with content moderation. Here are some leading tools for content moderation:

- Google Perspective API:-Google Perspective API uses machine learning to identify and score the level of toxicity in a piece of content, making it easier for moderators to identify content that may violate community guidelines.

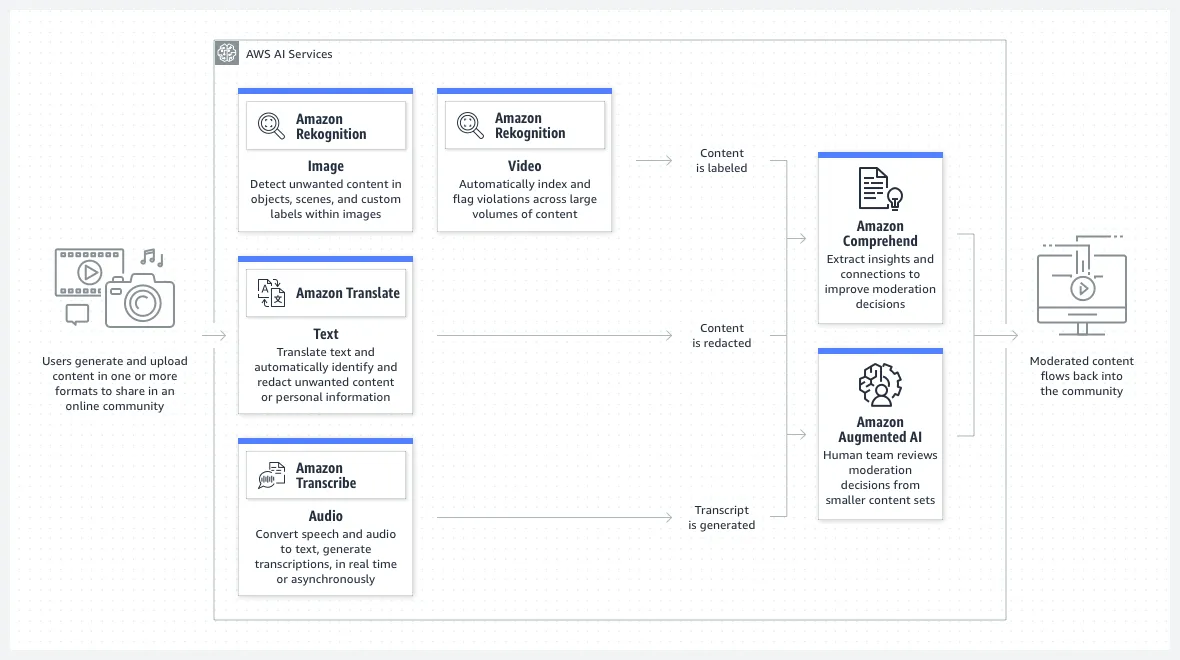

- Amazon Rekognition:-Amazon Rekognition is a powerful image and video analysis tool that can be used to automatically identify and moderate content that violates community guidelines.

- OpenAI GPT-3:-OpenAI GPT-3 is a natural language processing tool that can be used to analyze and moderate user-generated content, such as comments and reviews.

- Besedo:-Besedo is a content moderation platform that uses a combination of AI and human moderators to review and moderate user-generated content.

We typically work with tools chosen by our customer partners or use Amazon Rekognition for our Turnkey Content Moderation offering as it is easy to use, provides nice integration and human in the loop capabilities.

AWS Content Moderation Workflow

Quality Service Level Agreements

Service Level Agreements (SLAs) are agreements between our customers who are digital platforms, businesses and Objectways content moderation team. The SLAs specify the expected quality and quantity of content moderation services and set out the penalties for failure to meet those expectations. Our SLAS can include:

- Turn around time:-Google Perspective API uses machine learning to identify and score the level of toxicity in a piece of content, making it easier for moderators to identify content that may violate community guidelines.

- Accuracy rate:-Amazon Rekognition is a powerful image and video analysis tool that can be used to automatically identify and moderate content that violates community guidelines.

- Rejection rate:-OpenAI GPT-3 is a natural language processing tool that can be used to analyze and moderate user-generated content, such as comments and reviews.

- Escalation process:-Besedo is a content moderation platform that uses a combination of AI and human moderators to review and moderate user-generated content.

- Availability:-This specifies the availability of content moderation services, such as 24/7 or during specific business hours.

- Reporting and communication:-This specifies the frequency and format of reporting on content moderation activities, as well as the communication channels for raising issues or concerns.

- Penalties and incentives:-This specifies the penalties or incentives for failing to meet or exceeding the SLA targets, such as financial penalties or bonuses.

By establishing clear SLAs for human content moderation, digital platforms and businesses can ensure that the quality and quantity of content moderation services meet their requirements and standards. It also allows them to hold our content moderation teams accountable for meeting performance targets and provide transparency to their users.

Conclusion

In conclusion, content moderation human review plays a critical role in maintaining the safety, security, and trustworthiness of online platforms and communities. By providing a human layer of review and oversight, content moderation helps to ensure that inappropriate or harmful content is removed, while legitimate and valuable content is allowed to thrive.

However, content moderation human review is a complex and challenging process, requiring careful planning, organization, and management. It also requires a deep understanding of the cultural, linguistic, and social nuances of the communities being moderated, as well as a commitment to transparency, fairness, and accountability.

By leveraging best practices, advanced technologies, and human expertise, it is possible to create effective and efficient content moderation human review workflows that support the goals and values of online platforms and communities. Whether it’s combating hate speech, preventing cyberbullying, or ensuring the safety of children, content moderation human review is a critical tool in creating a safer and more trustworthy online world for everyone.

Please contact Objectways Content Moderation experts to plan your next Content Moderation project.