Sitting in traffic and staying focused on the road is something most of us have dealt with. Despite our best efforts, things don’t always go as planned, and accidents can happen. Driving may be convenient, but it’s certainly not simple.

Cutting-edge solutions like advanced driver assistance systems (ADAS) are designed to make driving safer and easier. These systems rely on sensors like cameras, radar, and LiDAR (Light Detection and Ranging) technology to observe surroundings and help out the driver. By detecting vehicles, reading road signs, and monitoring lane positions, ADAS support key driving tasks and reduce the risk of collisions.

Applications such as collision avoidance, adaptive cruise control, and traffic sign recognition are now becoming common. In fact, the global ADAS market is expected to grow from $42.5 billion in 2024 to nearly $133.8 billion by 2034. This growth is driven by advancements related to artificial intelligence (AI), sensor technology, and real-time data processing.

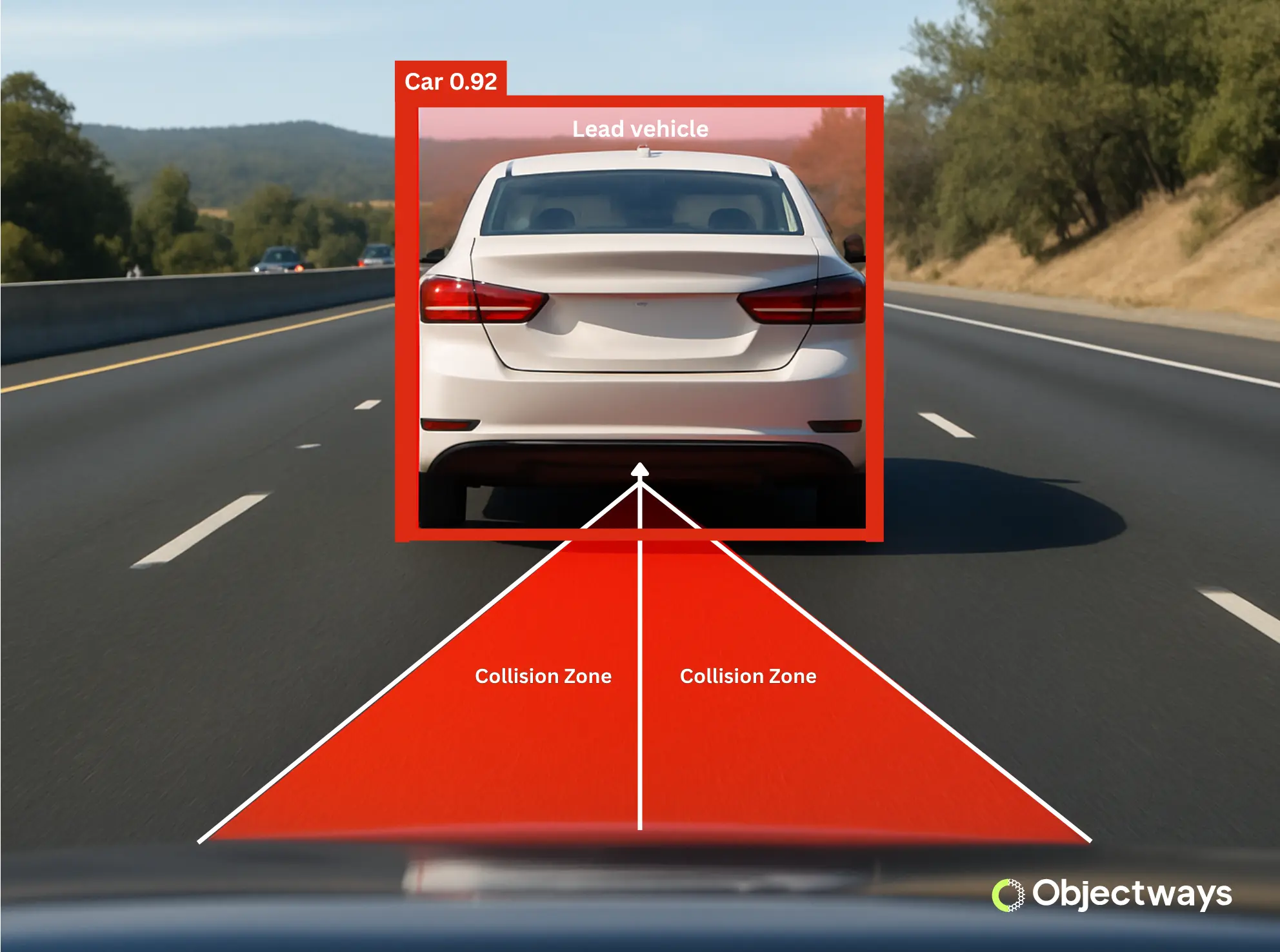

In particular, ADAS features rely on AI models trained with annotated data, such as lane markings, traffic signs, pedestrians, and vehicles. These data annotations help the system recognize objects, predict movements, and respond accurately. For example, forward collision warning systems learn from annotated data to detect vehicles or obstacles in the vehicle’s path, enabling the system to alert the driver before a potential collision occurs.

Forward collision warning systems use AI models to detect vehicles ahead.

In this article, we’ll peel back the layers of ADAS, explore why data annotation is crucial, and see how data plays a key role in helping these systems understand the road. Let’s get started!

Advanced driver assistance systems rely on multiple sensors to understand the road, and LiDAR sensors play a vital part in that setup. They work by sending out laser pulses and measuring how long they take to return. These measurements help create a detailed 3D view of the vehicle’s surroundings, often called a point cloud.

Unlike regular cameras that produce flat, two-dimensional images, LiDAR data provides depth information. It can detect how far away objects are, their shape, and their exact position in space. This makes LiDAR data great for identifying road edges, elevation changes, and moving objects, especially when accuracy is essential.

LiDAR technology also performs well in low-visibility conditions. Whether it’s nighttime, foggy, or rainy, LiDAR remains reliable, making it a reliable addition to cameras and radar in an ADAS system.

Beyond real-time sensing, LiDAR data can be annotated and used to train AI models. For instance, by labeling pedestrians, vehicles, road signs, and other objects within point cloud data, annotators can create rich training datasets. These annotated LiDAR datasets help models learn how to interpret 3D spaces, track motion, and respond to real-world driving scenarios with accuracy.

An example of annotated 3D LiDAR data. (Source)

Taking things a step further, combining annotated LiDAR data with inputs from other sensors gives the system a clearer view of the road. This multi-sensor approach enhances the vehicle’s ability to understand its surroundings, making ADAS features safer and more effective.

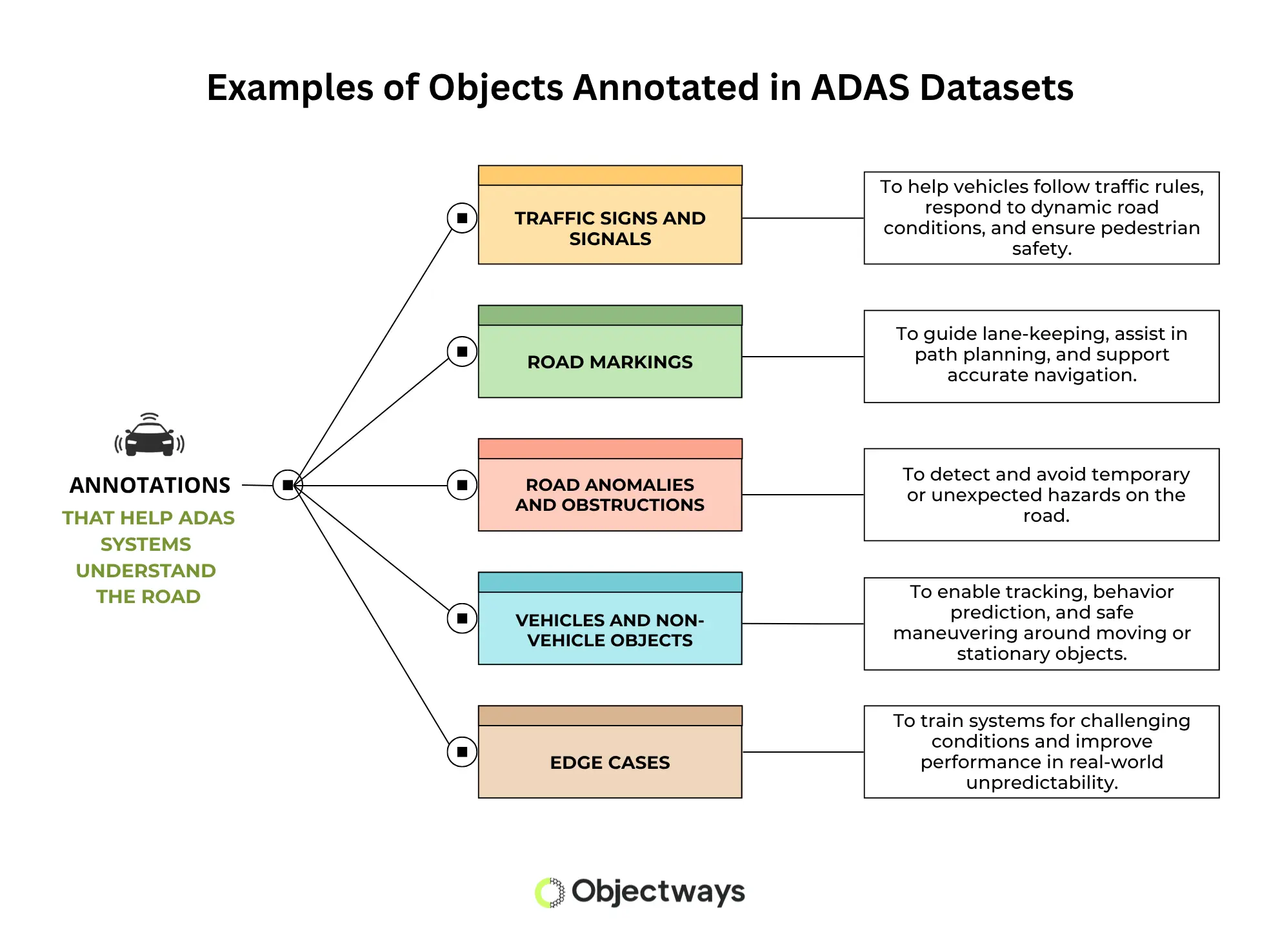

Generally, when we think of data annotation for AI models integrated into driver assistance systems, we think of vehicle detection. But that’s just one small piece of what these systems are actually designed to learn.

There’s a lot more happening when you drive than you might realize. Driving involves various visual cues: lane markings show where to stay, traffic signals tell you when it’s safe to move, and road signs give location-specific instructions. Pedestrians and cyclists can shift unexpectedly, and parked vehicles or roadside equipment can change how a system interprets its surroundings.

To understand these different objects on a road, each one needs to be labeled accurately. This process is known as data labeling. Annotators carefully label elements like lane markings, traffic signs, pedestrians, and vehicles in images, videos, or LiDAR data. This data makes it possible for AI models to understand the road in a way similar to how humans do.

This level of understanding is important for real-time performance. ADAS have to recognize what’s happening and respond to situations without any delays. The ability to do so depends heavily on the quality of training data. High-quality data and annotations make all the difference.

Here’s a glimpse of some of the most important objects annotated in real-world datasets, which are then used to train the AI models in advanced driver assistance systems:

Examples of Objects Annotated in ADAS Datasets.

Now that we’ve explored the various types of data annotations needed to develop ADAS systems, let’s see how this knowledge translates into real-world driving features.

Training driver assistance systems to detect lane markings involves collecting data and annotating it to see how roads look in different conditions. Lane markings aren’t always the same, and their visibility can change depending on things like the weather or time of day.

Let’s say an AI model is learning to detect a solid white line on a clear road. In controlled settings, this might be easy to label and analyze. However, in real driving conditions, lines can be dashed, faded, broken, or completely worn out.

Meanwhile, lighting tends to change with the time of day. Shadows from trees or buildings may fall across the road. Rain, glare, or fog can block parts of the view altogether.

The same is true for road features like merge points or edges. They are not always clearly marked, and temporary factors like debris or construction zones can interfere with visibility. Without examples of these situations, the AI model may not respond accurately.

By including diverse real-world conditions in training data, lane departure warning systems can perform more reliably. For instance, in 2024, the U.S. Department of Transportation’s National Highway Traffic Safety Administration (NHTSA) found that vehicles with lane-keeping assist (LKA) systems were 24% less likely to be involved in fatal single-vehicle road-departure crashes compared to those without.

Labeled lane data helps ADAS give lane departure warnings and support safer driving.

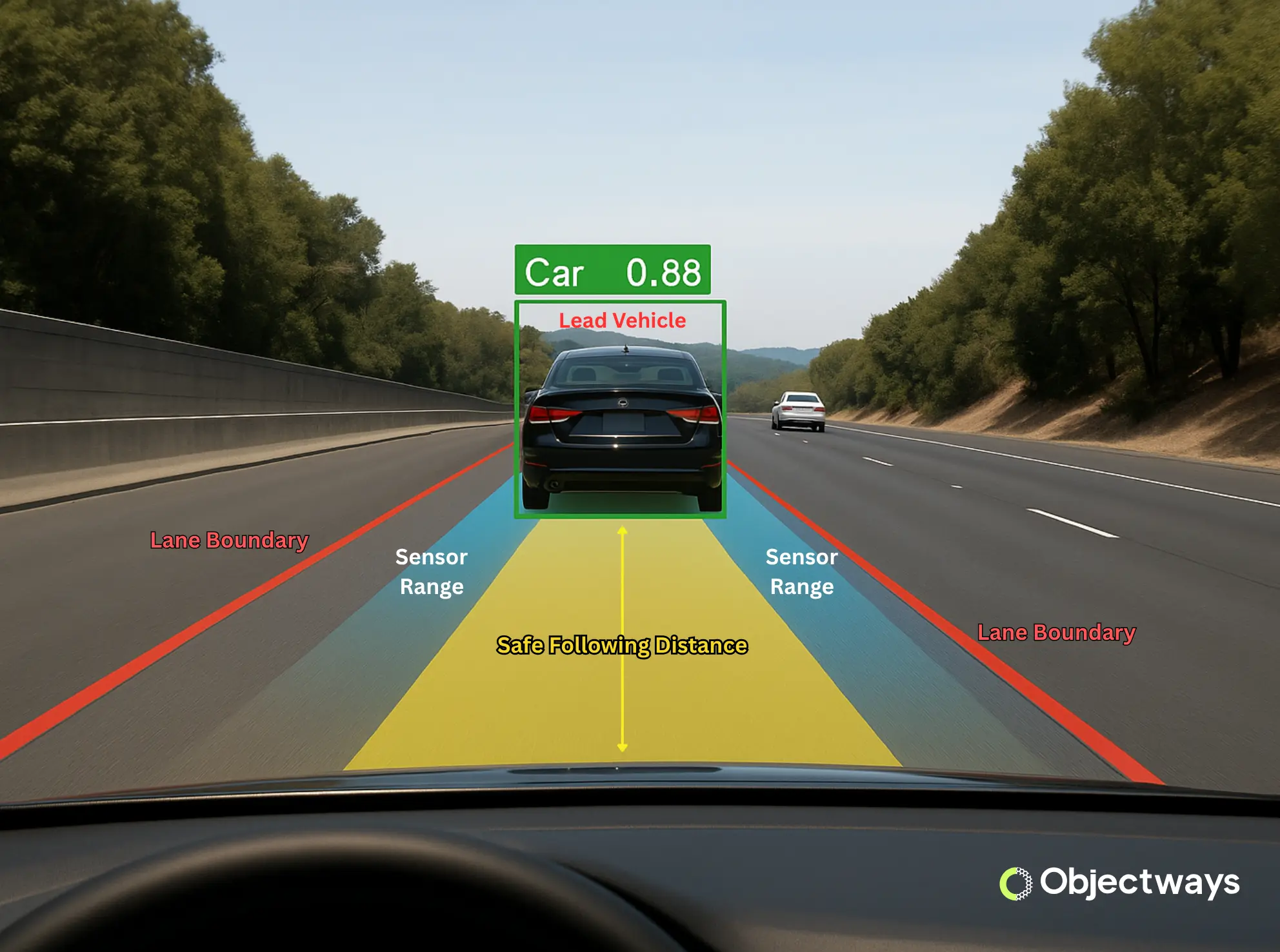

Traffic flow can change quickly, especially on highways. One moment, traffic is moving, and the next, vehicles begin to slow down. Adaptive cruise control is designed to respond to these changes by adjusting a vehicle’s speed and maintaining a safe distance from the car in front of it.

Unlike traditional cruise control, this system does more than hold a steady pace. It monitors the space between vehicles and adapts as traffic conditions shift. When the road clears, it accelerates back to the set speed. The system responds accordingly when another vehicle moves into the lane or slows down.

For this to work reliably, the AI models being used must be trained on various driving scenarios. The training data should be labeled with lead vehicle positions, lane alignment, and safe following distances. It must also include common scenarios such as sudden braking, merging traffic, or lane changes in congested areas.

When the training data includes these situations, the system is better prepared to make decisions under different road conditions. Interestingly, a national report published in China found that if adaptive cruise control were installed in all vehicles, traffic fatalities could be reduced by 5.48% and serious injuries by 4.91%.

Adaptive cruise control depends on models trained on annotated data.

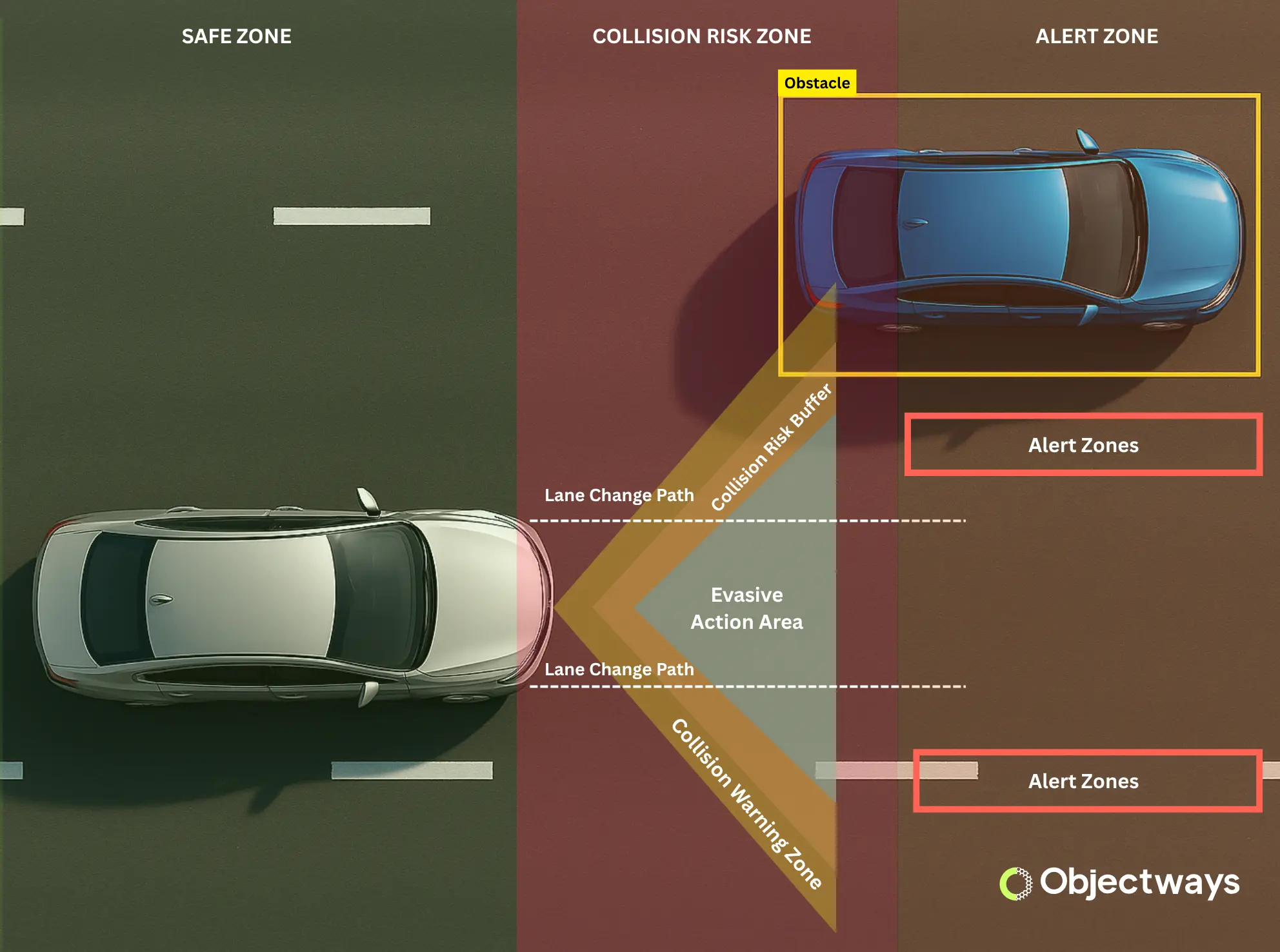

Similarly, collision avoidance systems are designed to help prevent accidents by detecting potential risks and responding in time. They continuously track nearby objects such as vehicles, pedestrians, cyclists, cones, and parked or stalled cars. If a potential threat is detected, the system can either warn the driver or apply the brakes to avoid a collision.

Two key systems used here are forward collision warnings and automatic emergency braking. The first alerts the driver when the vehicle is approaching something too quickly. The second takes action if there’s no response from the driver.

Collision avoidance systems have made a clear impact on real-world driving. Studies show trucks with forward collision warning have had 22% fewer crashes. When paired with emergency braking, rear-end collisions dropped by more than 40%.

Collision avoidance systems can detect and prevent crashes.

Traffic signs are there to help drivers make everyday decisions on the road, like when to stop, what speed to drive, and which way to turn. Traffic sign recognition systems work by identifying these signs based on their shape, color, and the text or symbols they show. These systems process what they see in real-time, allowing cars to react to signs as they appear on the road.

For the system to recognize signs accurately, it needs to be trained with annotated data. You can think of it like how we use context clues to figure out a word in a sentence. Each sign in the data must be clearly labeled with its type (like warning or restriction), along with details such as size, color, and text. To work in actual driving conditions, the data should also include variations like faded paint, poor lighting, glare, or partial obstructions.

The accuracy of traffic sign recognition depends on correctly labeled signs.

When you first start an AI project, data sourcing and labeling may seem straightforward. However, once you dig deeper, you’ll realize just how much detail and thought go into these initial, critical stages of the project. Turning to an expert is one of the best ways to get ahead of the curve.

At Objectways, we provide the data expertise needed to train ADAS models safely and effectively. From 2D camera frames to 3D LiDAR point clouds, our experienced annotators can label all the necessary road elements with precision and adapt workflows to match the feature being developed.

Here’s a quick look at how we support ADAS programs end-to-end:

Driver-assist systems are changing how vehicles perceive and respond to the world around them. As technology advances, these systems are having to handle more scenarios, adapt faster, and make decisions more confidently.

High-quality annotation plays a central role in making this possible, and Objectways can be your trusted partner in providing the expertise and precision needed to bring your ADAS projects to life. Looking to build or improve your ADAS workflows? Reach out to us, and let’s shape the future of intelligent mobility together.