When you learn to make tea or cross stitch, you usually learn by doing and by seeing the task up close. The details you pick up help turn simple instructions into a skill you can repeat with confidence.

Interestingly, when it comes to robots learning similar tasks, the process isn’t all that different. A robot also needs to observe tasks from a viewpoint it can learn from.

Typically, when we think about data used to train robots, we think of footage captured using third-person cameras. While this type of data shows what is happening in a scene, it often doesn’t capture how actions are actually performed.

Egocentric data bridges this gap. It is visual information captured from a first-person perspective, with the camera placed on the acting agent, such as a human or a robot. This egocentric perception gives robots the detail they need to learn real-world skills rather than just recognize what is in a scene.

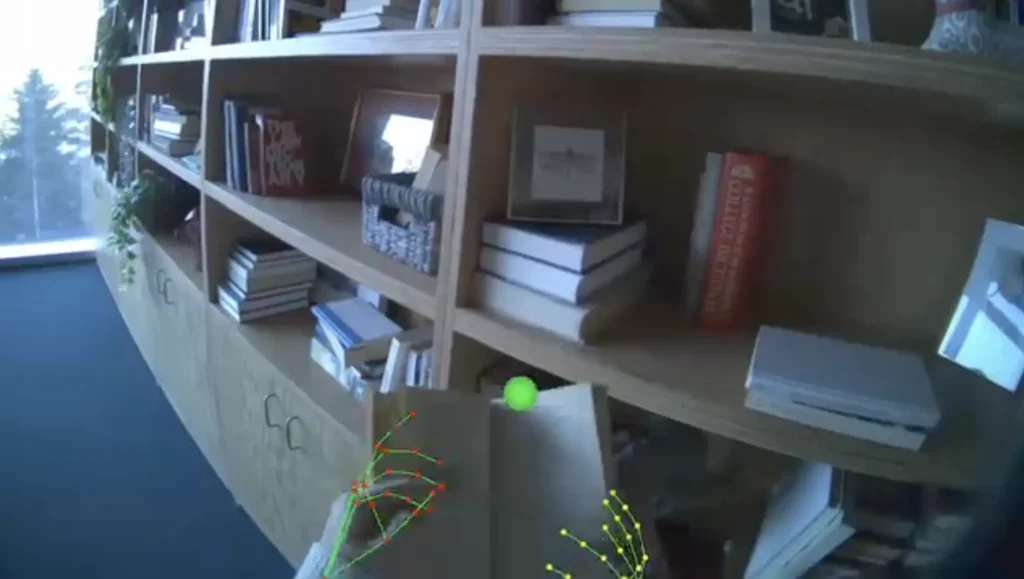

An Example of an Egocentric Dataset (Source)

It is exactly why egocentric data is receiving more attention today. First-person datasets for robotics have existed in research for years, but as robots are expected to operate in real-world environments, the limits of third-person footage have become more obvious.

In this article, we’ll explore what egocentric data is, how egocentric data collection works, and why first-person datasets are making a difference in robotics and embodied AI. Let’s get started!

Egocentric data is information captured from a first-person point of view. To collect this data, cameras or sensors are placed on a person or a robot, so the recording shows exactly what the agent sees while performing a task.

This perspective makes it easier to understand how tasks are actually carried out. Egocentric data captures details such as hand movements, object interactions, shifts in attention, and the order in which actions happen. These details are often missed by third-person cameras that observe a scene from the outside.

An Egocentric Point of View Vs. Third-Person Point of View

In particular, an egocentric dataset is a structured collection of first-person recordings gathered across many tasks, environments, and participants. It brings together these close-up views so models can learn patterns of action and interaction from real-world behavior.

So, how is egocentric data collected? Instead of filming from the outside, egocentric data collection captures tasks from the point of view of the agent performing them. This involves placing cameras or sensors directly on a person or a robot so the recording shows what they see and how they interact with objects while doing a task.

This approach keeps the focus on how actions naturally happen. Egocentric data is often collected using head- or chest-mounted cameras worn by people during everyday activities such as cooking, assembly, or navigation.

A Look at How Egocentric Data is Collected (Source)

For robots, data is typically captured via onboard cameras while the robot is teleoperated by a human operator, helping align the recordings with the robot’s physical movements. Data can also be collected around specific tasks to create clear action sequences.

Along with video, egocentric datasets may include additional signals like depth, motion data, hand or body pose, and sometimes audio. These signals add context and make it easier to understand actions.

By capturing egocentric data, how does this translate to understanding? The key is that first-person video shows how actions actually happen, not just what appears in a scene. Egocentric video understanding focuses on the process of performing a task from the actor’s point of view.

Here is a glimpse of what egocentric video helps systems understand:

Overall, egocentric action recognition focuses on figuring out what action is happening at any given moment, such as pouring, cutting, or tightening, from a first-person view. Although this can be difficult, it is essential for robots that learn from human demonstrations.

Next, let’s look at how egocentric datasets are being applied in real-world robotics, from large-scale first-person data capture to modeling human movement and behavior.

Egocentric AI isn’t just a theoretical concept. It is already being explored in real-world settings, and Meta’s Project Aria is a great example of this shift. Project Aria focuses on collecting high-quality first-person data to support research in machine perception, contextual AI, and robotics.

At the core of Project Aria are next-generation egocentric research glasses designed for large-scale, real-world data capture. The latest version, Aria Gen 2, combines multiple sensors, including vision cameras, eye and hand tracking, IMUs, spatial audio, and physiological sensing. These sensors work together to capture synchronized first-person data covering vision, movement, audio, and attention.

Tracking Hand Movements with the Aria Gen 2 Glasses (Source)

By recording data in everyday environments, Project Aria captures how people naturally move, look, and interact with objects. This includes fine-grained details such as where attention is directed, how hands manipulate objects, and how the body navigates through space. These signals are difficult to capture with third-person cameras but are essential for understanding real-world behavior.

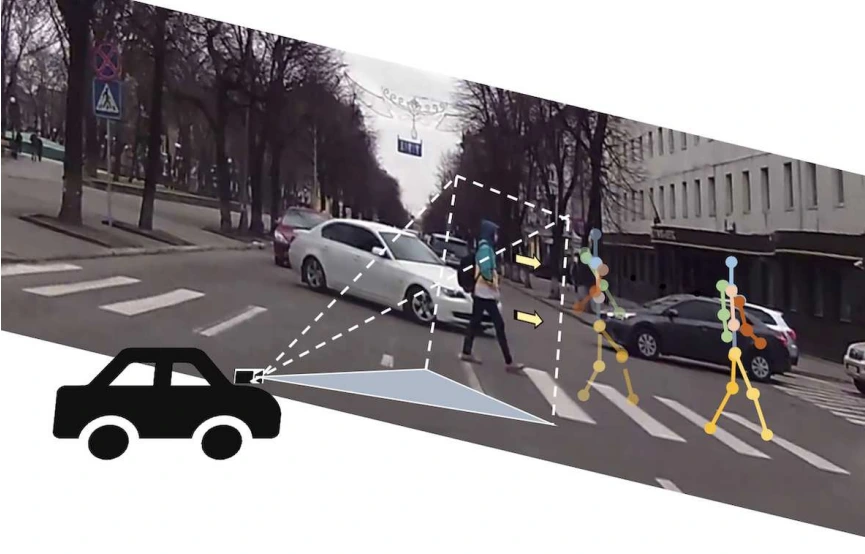

Egocentric data is often associated with a person wearing a camera, but in many systems, the acting agent is a vehicle or a robot. In autonomous driving, for example, egocentric data comes from cameras mounted on the vehicle itself, capturing the world from the car’s point of view as it moves through traffic and around people.

Egocentric Data of a Pedestrian Captured by Cameras on a Vehicle (Source)

This first-person perspective is especially useful for predicting human motion. From an egocentric view, a system can learn how pedestrians, cyclists, and other road users move relative to its own position and speed.

Egocentric video can model both overall movement, such as where someone is likely to go next, and finer details like body posture and motion. By learning from these cues, systems can anticipate human behavior even in complex and crowded environments.

It is likely that 2026 will see a huge spike in interest surrounding egocentric data. As more robotics systems move from research labs into real-world environments, there is a growing need to understand behavior over time, across longer tasks, and within more complex interactions.

A Robot Doing the Laundry (Source)

This shift is also redefining how the research community approaches egocentric vision. Shared datasets, benchmarks, and focused workshops are making it easier to study challenges such as action anticipation, long-horizon task understanding, and human motion prediction from a first-person perspective.

At the same time, advances in data collection are enabling larger and more diverse egocentric datasets. Improved sensors and recording setups are enabling the capture of realistic behavior across different environments and activities, helping bridge the gap between controlled experiments and real-world deployment.

Even though egocentric data collection is gaining attention, it still isn’t an easy or straightforward task. Capturing data from a first-person perspective introduces challenges that are less common with third-person setups. Natural movement can lead to motion blur, hands and objects often block the camera’s view, and the viewpoint changes constantly as tasks unfold.

Data annotation adds another layer of difficulty. Egocentric data involves fine-grained actions, subtle transitions, and overlapping movements that can be hard to label consistently. Without a clear plan, these issues can quickly reduce the quality and usefulness of a dataset.

Because of this, egocentric data collection can be difficult to scale without the right expertise. Choices around sensor placement, task design, annotation strategy, and quality control all have a direct impact on the final dataset.

If you are looking to build high-quality egocentric datasets for robotics or embodied AI, working with a team that understands the unique challenges of first-person data can make the process far more efficient and reliable.

At Objectways, egocentric data collection is handled through a structured, end-to-end approach that turns raw first-person recordings into high-quality, model-ready datasets. This helps teams avoid common pitfalls and move more efficiently from data collection to real-world deployment.

Have an embodied AI project in mind? Connect with Objectways to explore how we can help with egocentric data collection.

Egocentric data is reimagining how robots and embodied AI systems learn to operate in the real world. By capturing tasks from a first-person perspective, these systems gain access to the fine-grained details needed to move beyond scene recognition and toward real skill learning and interaction.

As robotics continues to move out of the lab, high-quality egocentric datasets will become even more important. Collecting this data well requires careful planning, the right capture setups, and thoughtful annotation to ensure it can scale and generalize.

If you’re planning an egocentric data collection project, working with a partner like Objectways can make all the difference. Check out some examples of egocentric datasets we’ve collected to see how high-quality first-person data is captured in real-world settings.