Nowadays, cutting-edge robots are learning through experience rather than instruction. In other words, a robot doesn’t improve at doing tasks by following predefined rules. It improves by observing its environment, making errors, and adjusting its behavior over time.

A great example of this is planetary exploration robots such as NASA’s Perseverance rover. Before operating on the surface of Mars, the rover was trained extensively using simulated environments that replicated terrain, lighting, and sensor conditions. These simulations let the navigation system practice obstacle avoidance and path planning without the risk of physical failure.

The Perseverance Rover (Source)

However, once deployed, the rover encountered unexpected surface textures, dust, and lighting variations that simulations couldn’t fully capture. Real-world data collected during the mission became essential for refining perception and navigation models.

This pattern is common across modern robotics systems. Simulations enable robots to learn safely and at scale, while real-world data exposes them to the unpredictability of physical environments. Relying on only one source of data often leads to fragile behavior when conditions change.

As robotics systems expand into warehouses, hospitals, roads, and remote environments, choosing the right balance between simulated and real-world data has become a central robotic design decision.

In this article, we will explore synthetic data vs real data for robotics and how each shapes effective robotics training strategies. Let’s get started!

Synthetic data in robotics refers to training data that is generated within simulated or virtual environments rather than collected directly from physical robots operating in the real world. These environments are designed to model how robots perceive, move, and interact with their surroundings, including objects, terrain, lighting, and sensor behavior.

These simulations act like flight simulators for robots. They allow systems to practice tasks repeatedly without risking hardware damage or safety issues. This approach has made simulated data for robotics a core part of AI development workflows.

Common forms of robotics simulation data come from physics-based simulators that model motion, force, and collisions within controlled environments. These synthetic environments can represent settings such as warehouses, streets, or factory floors, where conditions like lighting, object placement, and movement can be adjusted with precision.

Simulations also include virtual sensors and cameras that mimic real hardware. These tools generate image, depth, and lidar data that closely resemble real sensor outputs.

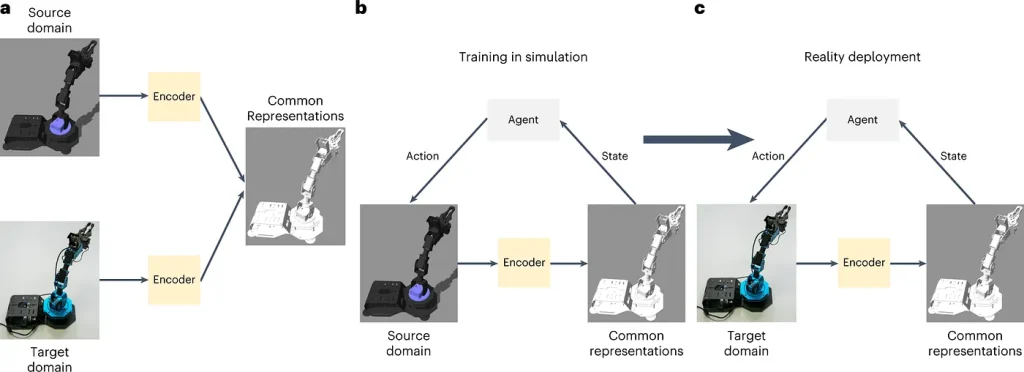

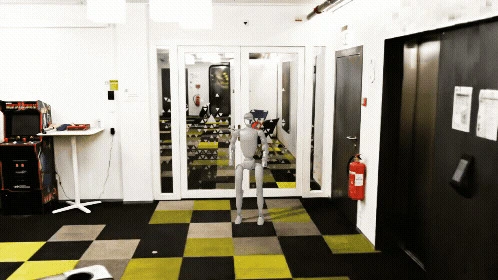

Training Robots in Simulation Using Synthetic Data (Source)

Simulations are commonly used during early training of robots to learn basic behaviors before interacting with real hardware. It also supports controlled experiments, where variables can be adjusted one at a time. Reinforcement learning relies heavily on simulations because agents must repeat actions thousands of times to learn effective policies.

These types of simulations have become central to robotics research because real-world data collection can be slow, expensive, and limited by safety constraints. Its ability to scale quickly and support safe experimentation makes it a natural starting point in robotics development.

Here are a few advantages of using synthetic data for robotic projects:

Despite its benefits, synthetic data comes with limitations that impact real-world performance. Here are a few important considerations to keep in mind:

Real-world data in robotics refers to training data collected directly from robots operating in physical environments. This data is collected by onboard sensors as robots perform tasks in real settings, capturing how systems perceive, move, and respond under natural conditions.

This type of robotics training data reflects how autonomous systems actually perceive and interact with the world. It includes variations in lighting, motion, surface properties, and environmental changes that cannot be fully reproduced in simulation.

Common forms of real-world robotics datasets include egocentric robot camera data, where cameras mounted on robots record first-person visual input during operation. These views closely match what perception models see during deployment.

Teleoperation data is another important source. Here, human operators control robots remotely, producing demonstrations that guide learning in complex or safety-critical tasks. Sensor data from deployed robots also plays a major role. This includes visual data, depth measurements, force feedback, and motion signals collected during real-world use.

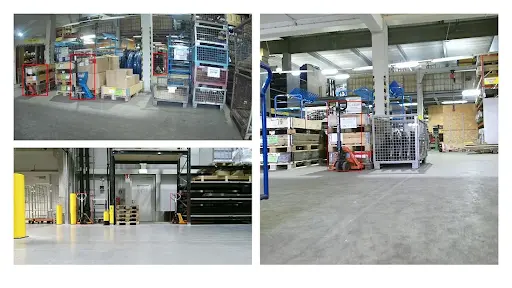

Real-world data for robotics is gathered through human demonstrations, teleoperated systems, and long-term deployments in physical environments such as factories, warehouses, and outdoor spaces.

Real-World Warehouse Environments Used For Robotics Training (Source)

In the synthetic data vs real data discussion, real-world data helps align models with how systems actually behave in real environments, reducing unexpected failures during deployment.

Here are a few pros of using real-world data for robotics:

Real-world data also introduces practical and operational challenges that teams have to plan for early in the development process. Here are a few key disadvantages to take a look at:

When comparing synthetic data vs real data for robotics, each data type solves a different part of the robotics training problem and supports systems at different stages of development.

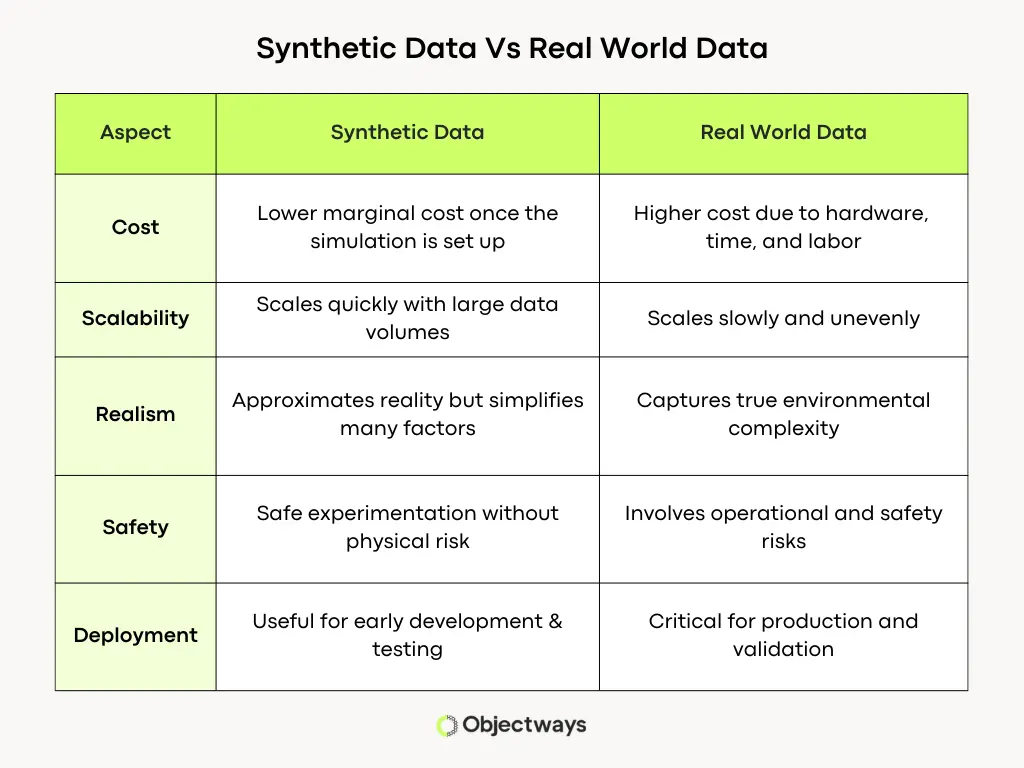

Choosing between synthetic data and real-world data often comes down to trade-offs between speed, realism, safety, and deployment readiness. Looking at these factors side by side makes the differences clearer.

Understanding the Differences Between Synthetic and Real-World Data for Robotics

The table above highlights how simulated data for robotics and real-world data for robotics compare across key dimensions that matter in production systems.

Synthetic data works best during early stages of robotics development, when teams need speed, safety, and flexibility. It allows systems to explore a wide range of behaviors in controlled environments before interacting with physical hardware and real-world constraints.

Next, let’s take a look at a few use cases where synthetic data works best.

In early-stage robotics research, simulated data for robotics helps teams explore ideas without committing to hardware-heavy workflows. Engineers can test perception stacks, control logic, and system assumptions quickly.

Robotics systems often learn by trying actions, observing the results, and refining their behavior over time. Reinforcement learning provides a framework for this process. These methods require agents to explore and learn from thousands of trial-and-error interactions, a process that is difficult to run safely or efficiently on physical robots.

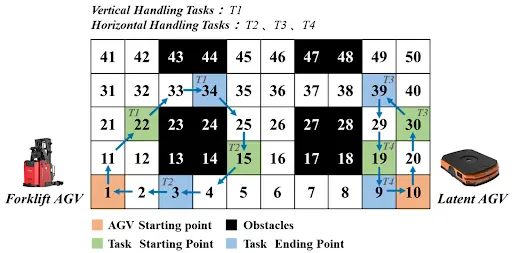

Navigation tasks in structured environments, such as warehouses or grid-based layouts, also benefit from synthetic data. For instance, simulated warehouse environments are used to study how different types of AGVs (Automated Guided Vehicles) work together during daily operations. Latent AGVs handle horizontal movement, while forklift AGVs manage both horizontal and vertical tasks.

An Example of Simulated AGV Task Planning In Grid-Based Environments (Source)

By planning safe paths and assigning tasks efficiently, simulation helps improve warehouse efficiency while reducing energy use and the total number of robots required.

Synthetic data is a great option for testing rare or dangerous edge cases safely. Teams can simulate collisions, sensor failures, extreme weather, or hardware faults without damaging equipment or interrupting operations.

Simulation also lets engineers stress test systems far beyond normal conditions. Robots can be exposed to worst-case scenarios that may occur only once in the real world but still need to be handled reliably.

Rapid iteration and debugging are easier in simulated environments. Developers can pause, rewind, and replay scenarios to understand exactly why a failure occurred, which speeds up troubleshooting.

This controlled visibility turns development into a learning loop. Each failure becomes a data point that can be analyzed, adjusted, and retested immediately, which shortens development cycles.

Real-world data becomes critical when robotics systems must interact closely with people, objects, and unpredictable environments. Now, let’s discuss a few use cases where real-world data is essential.

Some aspects of robotic behavior are difficult to simulate accurately. This is particularly true when it comes to variations in shape, texture, and force, as well as sensor noise. These factors often influence navigation and interaction performance in ways that simplified simulations can’t fully capture.

One practical approach addressing this gap is models like NVIDIA Omniverse NuRec, which demonstrate how real-world navigation data can be replayed inside simulation environments. Instead of relying only on synthetic scenarios, real sensor observations and motion trajectories collected during physical runs are transferred into the simulation. This allows teams to evaluate robot behavior under realistic constraints while retaining the control and repeatability of simulation.

A Scene Created Using Omiverse NuRec for Robot Training (Source)

By replaying real-world data in simulation, developers can consistently test challenging scenarios such as narrow passage navigation, where factors like human teleoperation input and real sensor noise significantly affect outcomes. This method combines the realism of physical data with the scalability of simulation, making it easier to analyze performance limits and failure cases.

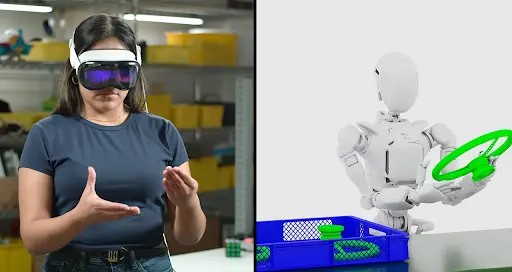

Similarly, tasks involving human-robot interaction benefit from real-world exposure. Teleoperation data and egocentric robot camera data capture how people move, respond, and collaborate in shared spaces, providing signals that are difficult to model accurately in purely synthetic environments.

As robots move from controlled testing environments into real-world deployment, reliability becomes more important than speed or scale. In these settings, systems must perform consistently under unpredictable conditions, where errors can lead to safety risks or operational failures.

Real-world data plays a critical role at this stage. It captures factors that are difficult to model in simulation, such as sensor drift, delayed responses, environmental clutter, and unexpected interactions. Exposure to these factors helps systems adapt to the conditions they will face after deployment.

Specifically, embodied AI systems rely on real-world data to learn perception from a first-person perspective. This improves alignment with how robots actually see, move, and respond in production environments.

Safety-critical applications in healthcare, logistics, and inspection depend on real-world testing to validate precision and reliability. Trust is built by observing how systems handle real failures and recovery scenarios, which is essential for long-term adoption.

Today’s robotics systems rarely rely on a single data source. Instead, they combine synthetic and real-world data into hybrid pipelines that balance scale with realism.

So, how do you combine real-world and simulated data for robotics? Basically, robots learn best by starting out in simulation to explore a lot, then fine-tuning their skills in the real world.

As discussed earlier, simulated data for robotics is typically used during pre-training and early exploration. At this stage, robots learn basic perception, navigation, and control skills in controlled environments. Simulation supports rapid experimentation and wide coverage of scenarios without safety risks or hardware constraints.

Once these foundations are established, real-world data is introduced to fine-tune models. Physical environments expose systems to sensor noise, unexpected interactions, and edge cases that simulation can’t fully capture. This final stage ensures that learned behaviors transfer reliably to deployment conditions.

A well-known example of this sim-to-real robotics approach is the Isaac GR00T framework from NVIDIA. Robots first learn inside high-fidelity simulations that closely model real physics and environments.

Imitation Learning Using the NVIDIA GR00T Framework (Source)

Once they have a strong foundation, the models are fine-tuned using data collected from physical robots operating in the real world. This combination of synthetic data and real-world data helps reduce the gap between training and deployment.

Hybrid pipelines also make robotics systems more robust and deployment-ready. They enable robots to learn at scale in simulation while staying connected to the conditions they will face after deployment.

High-quality real-world data keeps robotics systems grounded once they move beyond controlled environments. As robots operate closer to people and critical infrastructure, accurate and diverse real-world robotics datasets become essential to avoid subtle failures and ensure reliable performance.

Human-in-the-loop workflows make this possible. Through teleoperation and human oversight, Objectways enables scalable and structured real-world data collection that captures edge cases, recovery behaviors, and real interactions.

This data collection process can feel complex and requires expertise, but you are in the right place. If you are working on a robotics project, Objectways can help you create, collect, and annotate the data you need.

Choosing between synthetic data and real-world data isn’t just a technical choice. It is a strategic decision that influences how robotics systems learn, adapt, and perform once they are deployed.

Synthetic data supports speed, safety, and scale, which makes it perfect for early development and experimentation. Real-world data reflects actual operating conditions and captures the variability that robots must handle in real-world production environments.

Most smart robotics systems succeed by combining the two. Simulation helps systems learn efficiently, while real-world data helps validate and refine behavior.

If you are exploring how to design effective robotics data pipelines or balance simulation with real-world data, contact Objectways to discuss how we can support your robotics and AI initiatives.