Robots are showing up everywhere nowadays. While some move packages in warehouses, others perform complex surgeries in hospitals. You might think they’re completely autonomous, but not all are. Many of these robots are guided by capable human hands from far away, like the medical robots that are used to do surgeries.

Even though autonomous robot systems look impressive, they come with challenges. Real environments can change constantly. For instance, floors can be uneven, tools can slip, and people move unpredictably. In such situations, robots can struggle to work when conditions don’t match their training.

So the robotics industry has turned to human operators. Instead of searching for a perfect robot, they let humans step in remotely. For example, when robots get stuck, people guide them. At the same time, robots learn from how humans handle difficult situations. Such types of robots are known as teleoperated robots.

Robotics enthusiasts at CES 2026 in Las Vegas had the opportunity to see teleoperated robots in action. The event showcased a robot that was being controlled in real time by a person in Beijing, China.

Teleoperation robotic news continues to highlight a clear trend in 2026: robots perform best when humans remain part of the loop. From healthcare to autonomous vehicles, remote human guidance helps systems handle real-world uncertainty while collecting valuable training data for future autonomy.

In this article, we’ll explore how teleoperation is changing robotics today and why it matters. Let’s get started!

Humanoid teleoperated robots are one of the fastest-growing areas in robotics. When it comes to teleoperation, a human controls a humanoid robot remotely using cameras, screens, and control tools. During development, many teams guide these robots through real tasks before making them fully autonomous.

Every human-guided action becomes training data, showing robots patterns related to how people move, decide, and solve problems. As a result, they can work in real-world environments with ease.

Here are the key developments in the field of humanoid teleoperated robots right now in 2026:

A Teleoperated Robot from PAL Robotics (Source)

Next, let’s look at how teleoperation is being used in autonomous vehicles.

Self-driving cars can handle most roads on their own. But sometimes they face rare situations, known as edge cases, that they don’t fully understand. For example, it could be a strange construction layout, a blocked street, an accident, or a pedestrian doing something unexpected.

When this happens, the vehicle connects to a human operator sitting in a remote control center. The operator sees what the car sees via live cameras, maps, and sensor data, then either guides the vehicle or directly controls steering and braking.

Delivery and logistics companies are already using this method to fix issues. When a vehicle gets stuck behind a double-parked truck or at a confusing junction, a human steps in so the trip can continue. This is how self-driving cars make use of teleoperated systems.

A well-known example is Waymo’s fleet response system. When a Waymo car can’t decide what to do, it asks a remote agent for help. This shows how self-driving cars are not truly autonomous yet.

Waymo Vehicles Use Remote Fleet Support (Source)

With respect to teleoperation in surgery or telesurgery, doctors can operate remotely using robotic systems. A surgeon sits at a console and controls teleoperated robotic tools while the patient may be in another country. This enables expert surgeons to reach more patients, and patients no longer need to travel long distances for complex treatment.

In 2025, teleoperated robotic surgery reached a landmark moment when a heart procedure was performed across continents. On July 19, Dr. Sudhir Srivastava operated on a patient in India from France using the SSi Mantra 3 system, completing a highly delicate cardiac repair with no perceptible delay, despite a separation of more than 4,000 miles. Achieving this required ultra-fast, stable networks and tightly coordinated clinical and technical teams at both ends.

Intercontinental Robotic Cardiac Surgery Performed Using Teleoperated Robots (Source)

Similarly, another highlight in teleoperation robotic news is the SSI MantraM surgical bus. This mobile unit integrates a fully equipped teleoperated robotic operating room, bringing advanced surgical procedures to smaller towns and remote regions.

Surgeons can connect from afar to guide or perform operations with minimal latency, while the mobile setup also serves as a real-world platform for training and refining remote surgery. Together, these capabilities point toward a future where specialist care travels to patients, rather than patients traveling to specialists.

Similar to healthcare, construction sites are also full of complex tasks and risks. Heavy machines, loose ground, dust, noise, and constant movement make the job hazardous. Using teleoperation in construction is a great way to reduce those risks.

With it, operators no longer need to constantly sit inside the machine in unsafe conditions. They can easily control excavators, loaders, and other equipment from safe control rooms using screens, cameras, and joysticks.

Teleoperated excavators are a great example of such equipment. They can work from kilometers away while operators dig, lift, and move material without facing falling debris or unstable ground. This is especially useful in disaster zones, demolition sites, and mining sites. With teleoperation in mining, machines can operate underground while people control them safely on the surface.

Earlier, drones were operated by people with handheld controllers. These older systems had a limited range and required controllers to be close by. But today, operators can control them from distant control rooms, often flying far beyond the operator’s direct line of sight.

Such a shift makes it possible for drones to travel long distances and reach places that are difficult, dangerous, or impractical for humans to access. They cover vast areas while streaming clear, real-time visual data back to operators. As a result, these teleoperated robotic drones are now routinely used to inspect bridges and pipelines, monitor forests, and survey disaster zones.

Many companies are experimenting with new ways to use these drones in the real world. At CES 2026, drone manufacturer GDU Technology showcased its UAV P300, a drone built for public safety and industrial work.

GDU UAV-P300 Drone (Source)

Operators far away can still guide it in fog and low-light conditions. It also has AI-powered obstacle recognition and avoidance capability. Technology like this shows us a peek into what’s coming next. Drone teleoperation is becoming a standard part of business, supporting safety, inspections, and emergency work every day.

Virtual reality is often associated with gaming, but its most crucial role may be in how humans control machines at a distance. By placing people inside digital environments that respond to their movements, VR restores natural senses like depth, orientation, and presence, capabilities that traditional screens struggle to provide. This becomes especially important when humans have to guide robots performing precise, real-world tasks.

In robotics, depth is hard to judge, movements feel abstract, and small errors can quickly escalate. Immersive teleoperation using VR addresses this gap by making operators feel as though they are standing where the robot is working.

With VR headsets, operators can see through the robot’s cameras in 3D. As they turn their heads, the viewpoint shifts naturally, restoring spatial awareness and depth perception. This makes tasks easier and safer, allowing operators to judge distances accurately, avoid collisions, and move with greater confidence.

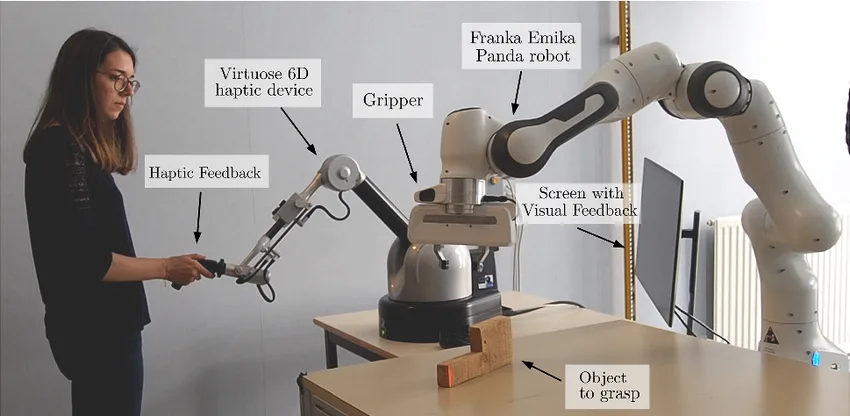

Touch also matters. Through haptic gloves or controllers, operators can feel resistance, pressure, and motion when the robot interacts with objects. This feedback is essential for gripping, lifting, and handling delicate materials where vision alone isn’t enough.

An Example of Using Haptic Feedback in Teleoperated Robots. (Source)

When VR and haptics work together, teleoperation becomes intuitive. Control no longer feels like issuing commands to a distant machine; it feels like extending your own hands into another reality.

Every time a person guides a robot, data and information are being collected. You can think of it as a record of how a human solves a real problem. This makes teleoperation a powerful source of learning data. It enables robots to learn from real people in real places, instead of relying on simulations.

This embodied AI data is most impactful during failure cases that continue to challenge autonomous systems. It reveals how humans respond to unexpected situations, correct errors, and adapt their strategies in real time. For this reason, teleoperation data plays a critical role in advancing the area of teleoperated robots.

Teleoperation robotic data usually includes camera video, sensor readings like force and depth, and time-stamped human controls. Together, these pieces teach teleoperated robots how people really solve problems.

To be useful for AI robotic training, this data must then be carefully organized and labeled so models can understand what happened and why. This process requires human experts with knowledge of both robotics and multimodal data labeling, ensuring the data accurately reflects real-world decision-making.

In teleoperation, multimodal data comes from multiple sources, including cameras, sensors, and human control inputs. These data streams show what the robot saw, how it was controlled, and how it interacted with its surroundings at any given moment.

Multimodal dataset labeling is the process of organizing and aligning the visual, sensor, and control data so that it matches up in time and context. This makes it possible for AI systems to learn how specific actions relate to what the robot perceived and what happened as a result.

While software tools can help with parts of this process, they often struggle to capture human intent or determine whether a task was actually completed successfully. Because of this, human reviewers are usually needed to look at video, sensor data, and control actions together to understand what the robot was trying to do and how it responded.

Because this process depends on understanding how robots actually work, domain expertise is important. Without it, annotations may lack depth or accuracy.

Turning to experts for teleoperation data collection and annotation can help streamline the process. At Objectways, specialized teams work with teleoperation data to ensure video, sensor, and control signals are accurately aligned and labeled for use in robotic training systems.

Teleoperation data can be challenging to work with. It combines video, sensor signals, and human control actions, all occurring at the same time. Making sense of this data requires more than basic labeling.

Objectways offers teleoperation data collection and annotation services designed to handle this complexity. We work with multimodal data in a single, connected workflow, bringing together video, LiDAR, sensor inputs, and action streams. Our human experts review and verify each step to maintain high data quality.

Looking for reliable teleoperation data annotation at scale? Objectways can help – book a call today.

In 2026, teleoperated robots are becoming a practical part of everyday robotics. From hospitals and warehouses to roads, construction sites, and disaster zones, human support is helping robots work safely in situations that are still too complex for full autonomy.

At the same time, these human-guided interactions are creating valuable real-world data that helps robots improve over time. As technology continues to evolve, teleoperation will likely remain an important bridge that allows robots to be useful today while learning how to operate more independently in the future.