When you open Netflix after a busy day to binge your favourite TV show, have you ever noticed the “Skip intro” button that pops up when the theme song starts? You’ll also come across similar options at the end credits as well to “Skip credits”. They work so smoothly that many viewers rarely even notice them, and most never stop to think twice about the technology that makes them possible.

Behind these features are AI models that are trained to understand audio and video with precision. They know what an intro sounds like, when credits begin, when a logo appears in a corner of the frame, and how dialogue blends with background noise. These models rely on thousands of carefully labeled clips, scenes, and audio. Content tagging enables these models to interpret media the same way as humans do.

The results? Netflix reports that “Skip Intro” is pressed 136 million times each day, saving viewers about 195 cumulative years of time. Also, with more than 500 hours of video uploaded to YouTube every minute, the need for accurate and consistent training data continues to grow.

Content Tagging Powers Features Like Netflix’s ‘Skip Intro’ (Source: Generated Using Gemini)

Simply put, AI solutions can’t deliver seamless user experiences without a strong foundation of reliable data. As American computer programmer and science fiction writer Daniel Keys Moran said, “You can have data without information, but you cannot have information without data.” The same is true for AI. Without high-quality data annotation and content tagging, even the most advanced models can’t understand what they are seeing or hearing.

In this article, we’ll explain how media annotation and content tagging work, why human judgment is still so important, and how Objectways helps support the AI solutions behind your everyday media experiences.

Audio and video data are tough for AI to understand. Real media isn’t tidy or predictable. Audio can have noise or overlapping voices, and videos can shift angles or hide logos in an instant. Humans can easily follow these changes, but AI systems need clear guidance to make sense of them

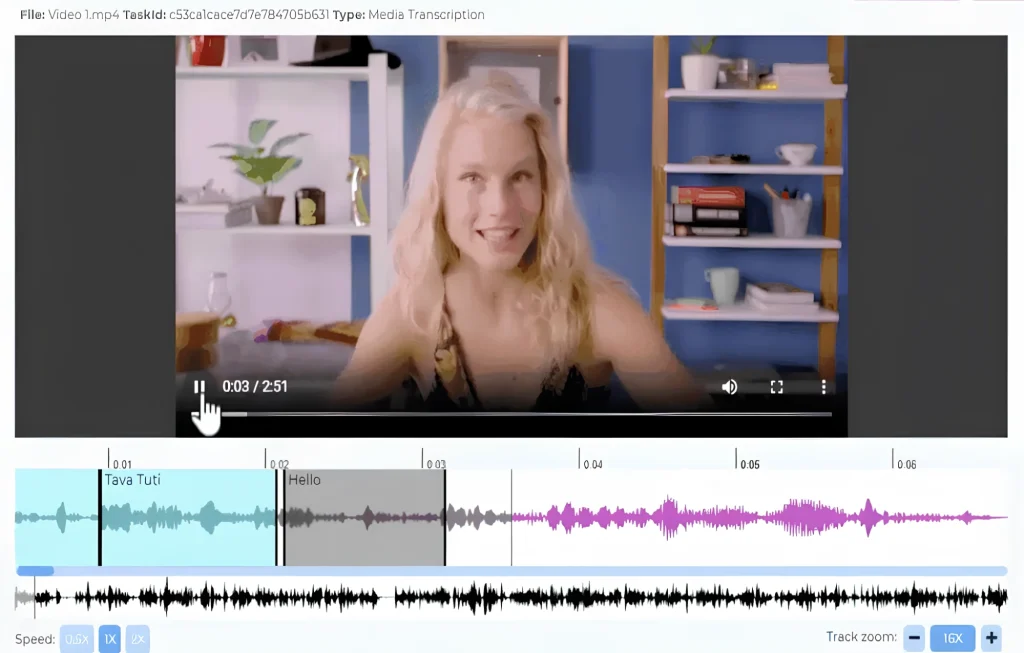

An Example of Video and Audio Labeling. (Source)

Content tagging helps by giving AI models the labeled data they need to understand what is happening in a piece of media. For instance, when training a model to recognize scene changes or emotional tone, annotators describe each moment by noting whether a scene is calm, emotional, fast-paced, or instructional. These tags provide AI with the context it needs to interpret both visuals and sounds accurately.

Here are two types of labeling that support AI models in media applications:

Professional media annotation and content tagging always begin with a secure environment. Since teams often work with sensitive or proprietary audio and video, protecting that data is the first priority.

A secure setup ensures that only authorized annotators can access files, that media never leaves the approved workspace, and that every action is logged for compliance and accountability. Annotation teams typically use annotation tools and data platforms, such as Encord or TensorAct Studio, to review large volumes of media safely.

These platforms are designed with strict access controls, encryption, and role-based permissions to prevent unauthorized viewing or downloading. They also support key industry compliance standards, such as SOC 2 Type II, HIPAA, and ISO 27001, giving companies confidence that their content is handled responsibly from start to finish.

With the workspace ready and the data protected, the next step is where human judgment comes in, turning raw audio and video into high-quality, structured training data that makes modern AI experiences possible.

Here are some examples of what content tagging work looks like:

While content tagging can sound simple, as if it’s just tagging a moment here or there, the reality is far more detailed and intricate. AI models need rich context to interpret media the way humans do.

Next, let’s pull back the curtains and see what actually goes into accurately annotating audio and video.

Audio carries far more information than what we consciously hear. When annotators review a clip, they listen for specific elements such as wake words and mark the exact timestamps where those words begin and end.

They also capture details like background noise, echo, distortion, or overlapping voices so the model can learn how audio behaves in real-world situations. In some projects, annotators include basic speaker attributes such as gender or age range.

All of these details are captured in a structured format, commonly a JSON or CSV file, so each element, from timestamps to noise levels, is clearly labeled. This makes it easy to use the data in a model training pipeline.

A Sample JSON File Containing an Audio Annotation’s Details.

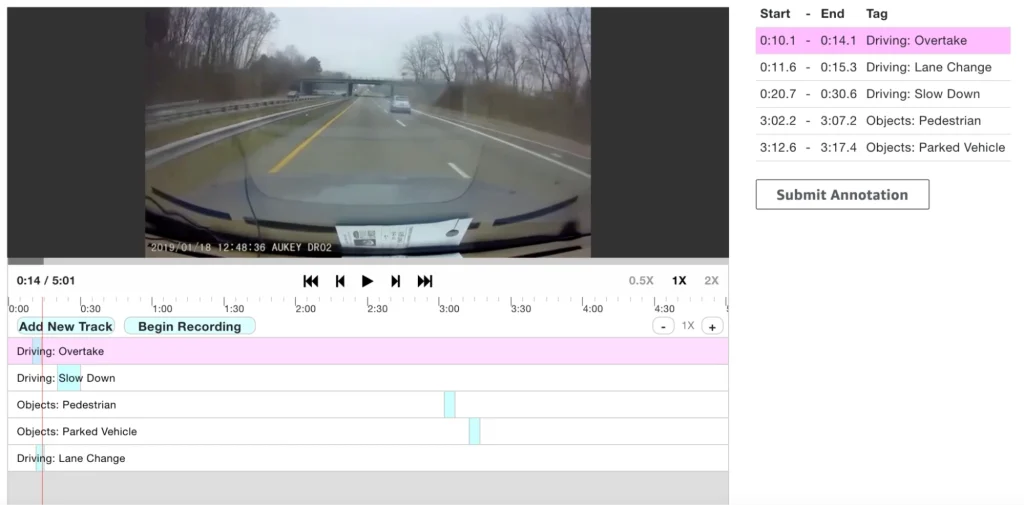

Tagging videos requires precision. Annotators must spot the exact frame where credits start, identify subtle scene transitions, tag logos that appear for only a second, and label the tone or suitability of each moment. Timestamps are key here because every label needs to be tied to the exact moment it appears on screen for the AI model to learn accurately.

A Look at Annotating Videos (Source)

All of these details are organized into clean formats such as JSON or CSV. Each file includes timestamps, labels, and notes that describe precisely what is happening in the video. This structured data becomes the training material that helps AI models understand audio and video more like a human, rather than simply processing pixels and sound waves.

Now that we have a better understanding of how content tagging works, let’s look at a few everyday AI features, what gets annotated to make them possible, and why it all matters for a better user experience.

Voice assistants like Siri, Alexa, and Google Assistant learn to recognize wake words through thousands of short audio clips that humans label by hand. Annotators mark when a wake word is spoken, how clear it sounds, whether the person intended to activate the device, and the presence of background noise. They also note accents and changes in speakers so the model understands how different people talk in everyday life.

Wake-word detection powers voice assistant activation. (Source: Generated Using Gemini)

Someone might say “Hey Google” while cooking, walking outside, or talking to a friend. These kinds of real moments teach the assistant how wake words sound in noisy and unpredictable settings.

Good audio labeling is what makes voice assistants feel dependable. They wake up when you need them and stay quiet when you don’t.

Most OTT viewers tap the skip button without thinking about how it appears at the perfect moment. Streaming platforms such as Netflix, Amazon Prime Video, Hulu, and Disney+ use various detection methods to identify intros and credits so the skip button can appear at the right time.

Human annotators support this process by watching episodes and labeling the exact frames where the intro starts, where the theme music ends, and when the credits begin. They also note whether the credits roll over the final scene or appear on a blank screen. These detailed labels help models learn what these moments look like across different shows.

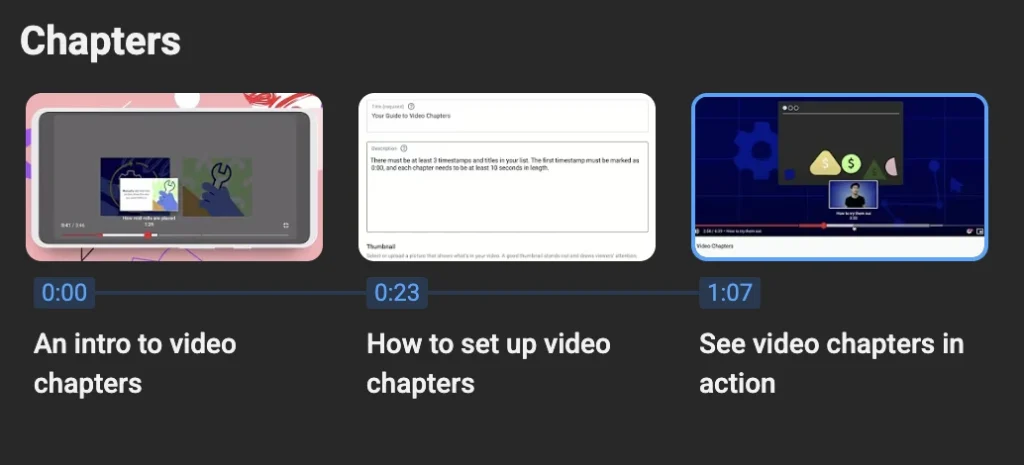

Lengthy videos are easier to navigate when they are broken into clear sections or chapters, but AI-driven platforms can’t identify those moments on their own. Human reviewers annotate videos by marking the exact timestamps where a scene shifts to a new setting, mood, or topic. They look for changes in location, tone, pacing, or dialogue.

Automatic Video Chapter Generation on YouTube. (Source)

These labeled examples show AI models what a real scene boundary looks like across many types of content. Platforms like YouTube use this kind of annotation to power auto-generated chapters.

Finding logos in video might sound straightforward, but in real-world footage, they can appear in many different ways. A logo may be bright or faint, fully visible or partly hidden, or appear for only a split second during a fast camera move.

AI can’t reliably pick up these variations without extensive training. To train AI models effectively, human reviewers watch footage and label each logo appearance, noting the timestamp, how long it stays on screen, and whether anything obstructs it.

Detecting Logos Using AI (Source)

They also capture details that the model might miss, such as changes in lighting, reflections, motion blur, or when a logo appears at an angle. These annotations help AI models learn what logos truly look like in real environments, not just in clean, controlled images.

This type of video labeling is widely used in sports broadcasting and advertising. Broadcasters rely on annotated video and computer-vision systems to track how often a sponsor’s logo appears during a game or highlight reel.

Advertisers use the resulting data to verify that their brand received the exposure they paid for. With accurate logo detection, companies gain trustworthy insights, and platforms can provide more reliable reporting.

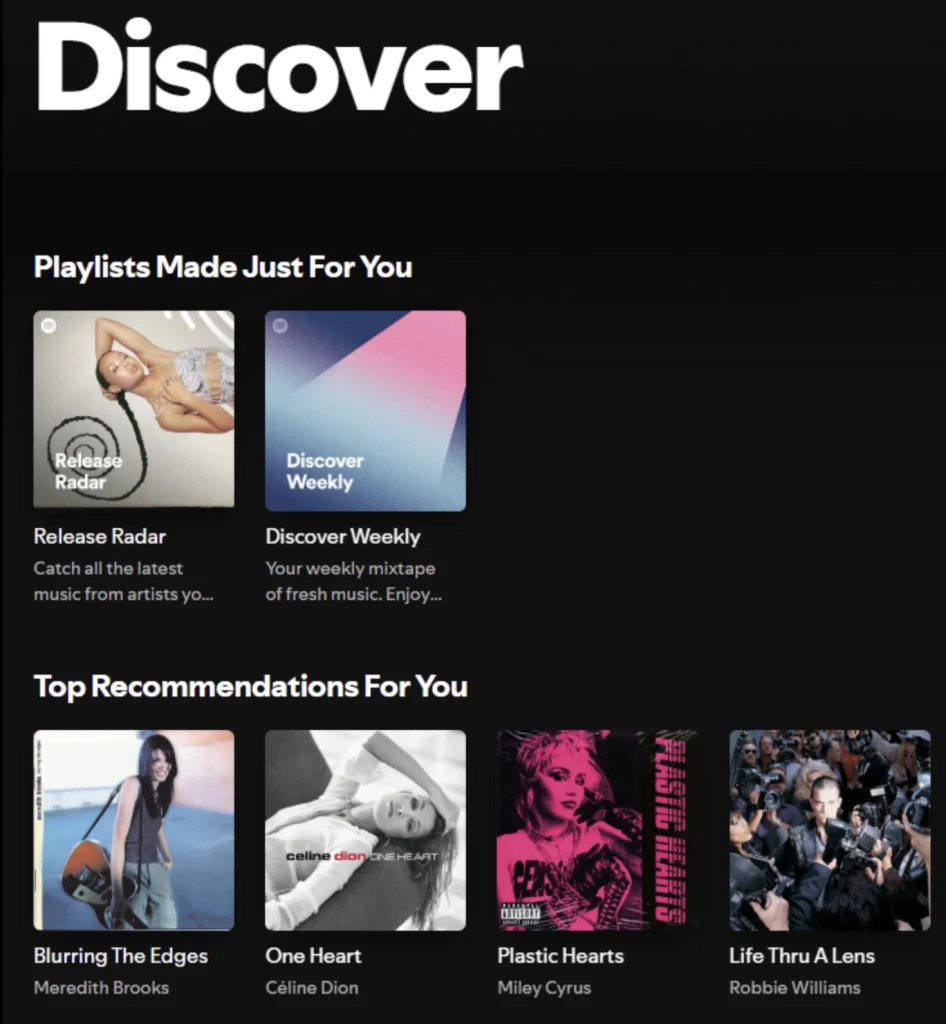

Most people open platforms like Spotify or YouTube and instantly see recommendations that feel surprisingly accurate. These suggestions come from models trained to understand what a piece of media contains so they can match it with the right audience.

In many workflows, media is tagged with information about its themes, mood, style, or topic. For example, a video might be labeled as instructional or comedic, and a song might be tagged by tempo, mood, or vocal style.

These labels help AI systems build a clearer understanding of what the content is and how people might experience it. Platforms such as Spotify and YouTube use a mix of human curation, metadata, and machine-learning models to power their recommendations.

Personalized Recommendations Powered by Media Content Classification in Spotify. (Source)

Video platforms also apply similar content tagging and classification methods to help surface content that fits a viewer’s interests. When the underlying metadata is accurate, users spend less time searching and more time enjoying content that matches what they want to watch or listen to.

While scrolling through social platforms, you might notice that ads rarely appear next to violent or disturbing videos. A key part of making this possible is content tagging.

Sensitive Content Labeling for Safer Viewing Experiences. (Source)

Human reviewers or content moderation teams label videos that contain sensitive elements such as violence, adult themes, dangerous behavior, or emotionally distressing scenes. These tags help classification systems understand what the content includes so it can be grouped into the appropriate safety category.

AI has come a long way, but it still struggles with the kinds of nuance people understand without even thinking. Context changes everything, whether it is a wake word hidden under background noise, a final scene that continues while the credits start to roll, or a logo that appears for only a moment during a game.

Humans can easily tell when someone is not actually saying “Hey Siri,” notice when a story is still unfolding, or spot a sponsor logo even when it is partly blocked. AI often misses these moments unless people label them clearly and consistently.

High-quality labeled data makes a difference. In fact, studies show that when 40% of the labels in a training dataset are incorrect, model accuracy can drop to 46.5%. Careful data annotation and content tagging fill the gaps, creating media experiences that feel more accurate, reliable, and natural.

Are you working on building media-focused AI? At Objectways, we specialize in high-quality audio, video, and content tagging, and we can help you create the reliable training data your models need.

Here’s a quick look at how we can support your project from start to finish.

Many media features we rely on every day seem simple, but they depend on AI systems that precisely understand audio and video. Skip buttons, voice activations, recommendations, logo tracking, and brand-safe advertising all start with high-quality data annotation. As global media grows, AI systems can’t interpret it without structured, context-rich data.

For all your data annotation and collection needs, we’ve got your back. Our trained teams at Objectways are here to help you build high-quality, reliable datasets with ease. Build your next AI project with confidence and book a call with us.