As of 2025, nearly 88% of organizations are using artificial intelligence (AI) in at least one area of their business operations. AI isn’t the future anymore. It’s already here, and it’s shaping how companies operate, make decisions, and innovate every day.

As AI expands across industries, there’s one pressing question that keeps coming up. How do we make these AI models perform accurately and consistently?

For instance, when it comes to getting the best out of AI models like large language models (LLMs), two approaches are leading the way: prompt engineering and fine-tuning. Prompt engineering focuses on crafting the right inputs to guide how a model responds.

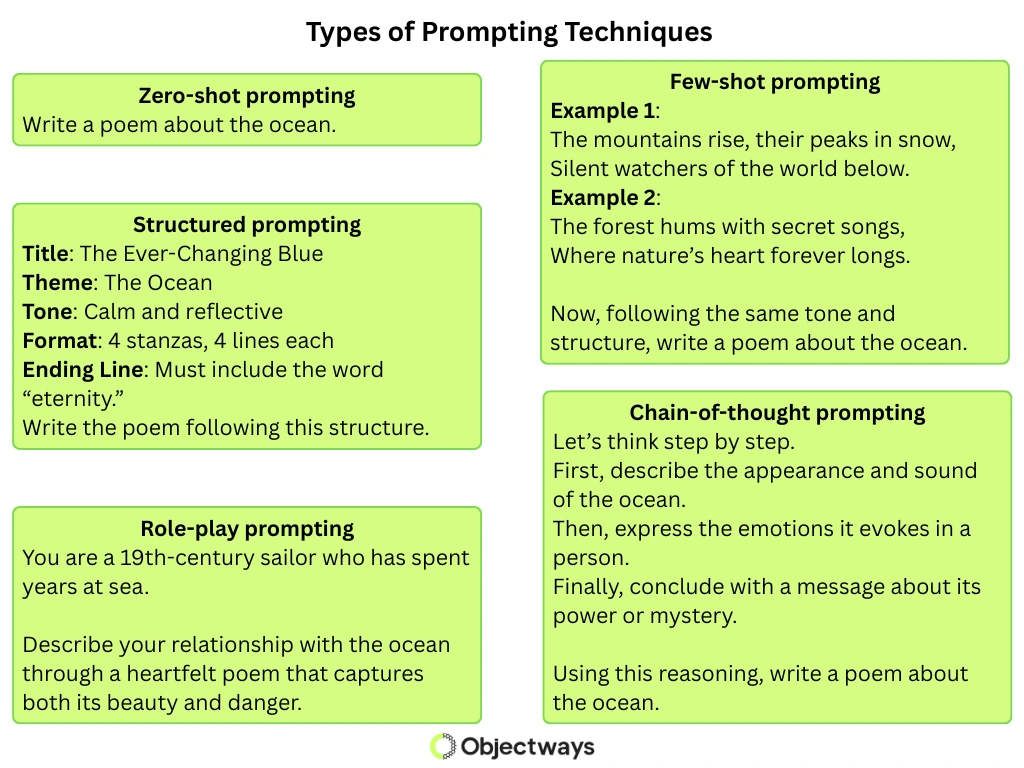

In particular, advanced prompt engineering techniques like few-shot and chain-of-thought prompting are used to shape AI outputs. The other, more hands-on method is model fine-tuning, where models are retrained on application-specific data to deliver more consistent, reliable results.

In this article, we’ll explore prompt engineering vs. fine-tuning, break down their key differences, and discuss how the two can work together to create more adaptive AI models. Let’s get started!

Prompt engineering is the process of creating clear, targeted instructions, called prompts, to guide the behavior of large pre-trained AI models. Since these models have already learned patterns, language, and reasoning from massive datasets, the quality of their output often depends more on how we frame prompts than on retraining the model itself.

Simply put, better prompts lead to better answers. A simple way to think about it is like ordering coffee from a robot barista. The skills and ingredients are already there, but the final drink depends on how clearly you describe what you want.

A vague request like “I’ll have a coffee” might get you something basic, while a detailed request such as “A medium cappuccino with oat milk and a shot of caramel” leads to a result that’s precise and aligned with your preferences.

AI-powered robots are now stepping in as baristas of the future. (Source)

Depending on the task, different advanced prompt engineering techniques can help you get better results from an LLM. Here are some of the most common prompt techniques:

Different Advanced Prompt Engineering Techniques.

Here are some key advantages of prompt engineering:

Now that we have a better understanding of prompt engineering, let’s take a look at fine-tuning.

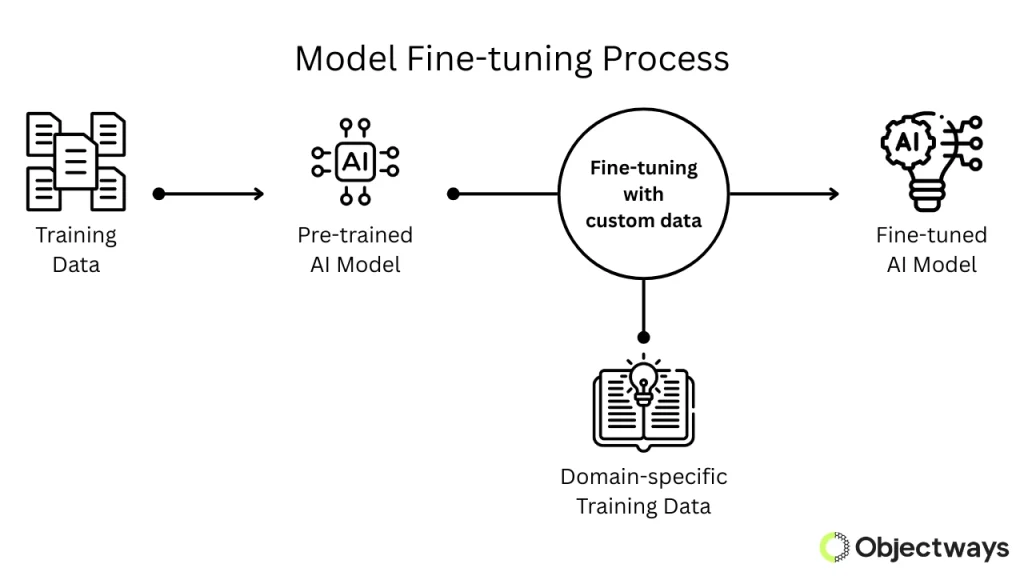

Fine-tuning is the process of retraining a pretrained AI model on data from a specific domain so it can perform better on specialized tasks. Instead of training a model from the beginning, we start with one that already understands general language and then adjust it using examples from a particular field such as medicine, finance, or law. This helps the model learn the terms, patterns, and style needed to produce more accurate results in that area.

Because the model already knows how to understand and generate language, fine-tuning simply adapts its existing skills to a new context. This approach is a form of transfer learning, where broad knowledge is refined for a focused purpose.

An Overview of Model Fine-Tuning

You can think of a pretrained model as a student who has just completed their general education. Fine-tuning is like sending that student to a master’s program to specialize in one subject. This extra training turns broad knowledge into true expertise in a specific domain.

Fine-tuning generally falls into two main categories: full model fine-tuning and parameter-efficient fine-tuning. Each offers a different balance of control, performance, and computing requirements.

Here’s a closer look at both:

Here are some of the advantages of fine-tuning:

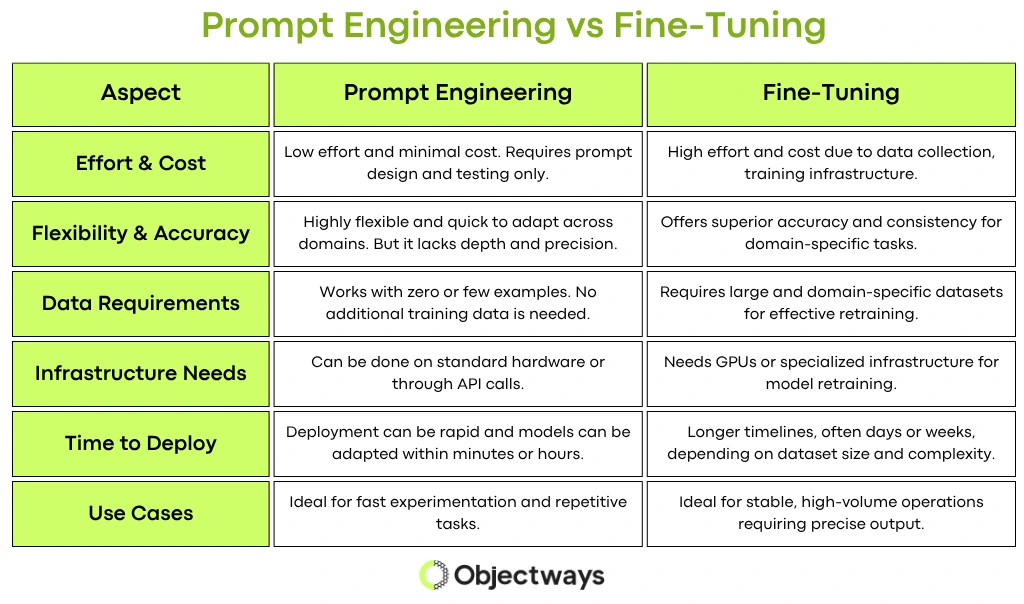

So far, we’ve explored what prompt engineering and fine-tuning are, their types, and their advantages. While both approaches improve model performance, they work in very different ways. The table below provides a quick comparison of prompt engineering and fine-tuning.

A Look at Prompt Engineering Vs. Fine-Tuning

Next, let’s discuss when you should rely on prompt engineering.

Prompt engineering works best when flexibility, speed, and creativity are more important than strict accuracy. It is especially helpful for teams looking to experiment with large language models without needing large datasets or complex technical setups.

Here are some situations where prompt engineering is a good option:

Interestingly, recent research backs this up. A 2025 study on real-world LLM use found that when people refine their prompts, test different approaches, and add more clarity, the quality of the responses improves noticeably. The study also highlighted that techniques like role prompting, chain-of-thought prompting, and adding context mean models produce more accurate and useful results.

Meanwhile, fine-tuning is the better choice when accuracy and domain knowledge matter more than speed. By retraining the model on domain-specific data, it learns the context and nuances required for high-stakes tasks.

Here are some scenarios where fine-tuning is a great option:

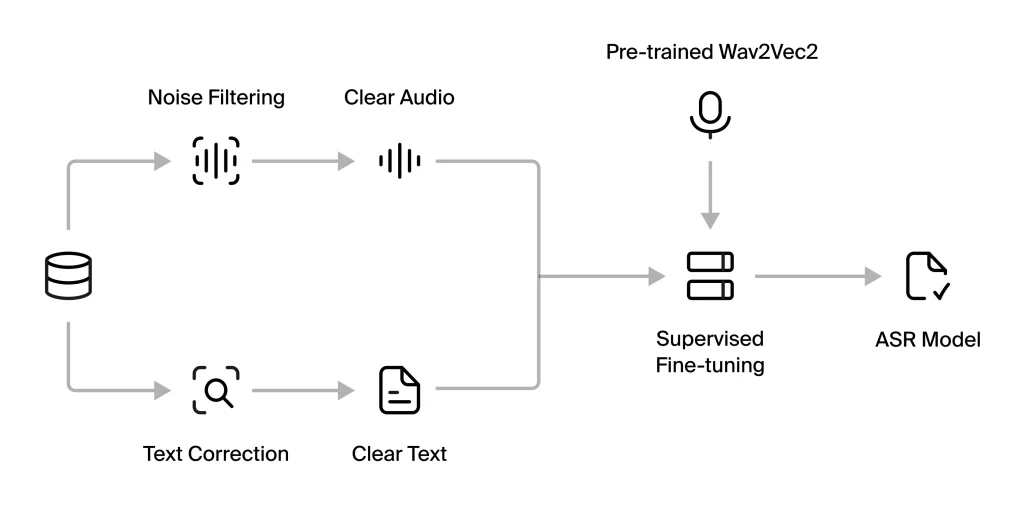

An example of an application where fine-tuning makes a meaningful impact comes from a study on Automatic Speech Recognition (ASR) systems. Researchers fine-tuned an existing ASR model using real radio communication data from ships.

Fine-Tuning an Automatic Speech Recognizer Model (Source)

The audio in these recordings was often noisy and filled with maritime-specific terminology, which made it difficult for general ASR systems to interpret. After fine-tuning, the model achieved much higher recognition accuracy than standard ASR models.

While both fine-tuning and prompt engineering can dramatically improve how AI models perform, each approach comes with its own limitations. Understanding these challenges can help teams choose the right strategy for their goals.

Here are some common barriers related to prompt engineering to keep in mind:

Also, here are some common concerns related to fine-tuning to consider:

Prompt engineering gives you speed and flexibility, while fine-tuning offers more control and accuracy, though it usually takes more time, data, and resources to get right. There are several factors to consider, and at times the decision between the two can feel a bit tricky.

If you need help choosing the right approach or combining both effectively, our team at Objectways is here to support you. We specialize in delivering high-quality data annotation and collection, building custom generative AI and LLM solutions, and supporting you through training and deployment. Reach out to us, and let’s build an AI solution that perfectly fits your goals.

So far, we’ve explored prompt engineering vs. fine-tuning as two distinct ways to get the most out of AI models. But here’s a thought: what happens when we combine them together?

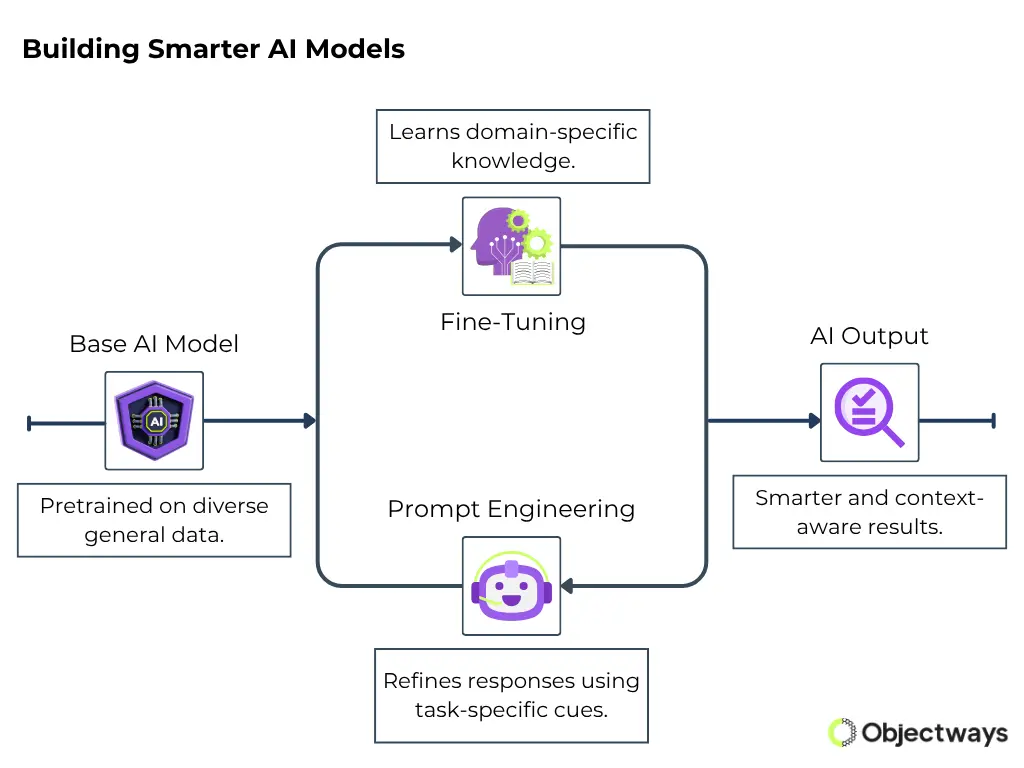

By blending fine-tuning with advanced prompting techniques, we can tap into a new level of intelligence in AI systems. In this setup, fine-tuning gives the model deep domain expertise, while prompt engineering refines how that expertise is expressed in domain-specific areas.

Building Smarter AI Models Using Fine-Tuning and Prompt Engineering

In fact, cutting-edge AI research and industry practice show that combining these two methods often yields better results than relying on either one alone. Many modern LLM development pipelines use this hybrid approach: the model is first fine-tuned on domain-specific data to build a deeper understanding, and then further improved through iterative prompt refinement.

This back-and-forth process strengthens both the model’s reasoning and its ability to generate accurate, context-aware outputs. Together, fine-tuning provides depth while prompting provides direction, resulting in a more adaptable and high-performing AI system.

Understanding the differences between prompt engineering and fine-tuning shows how they complement each other. While prompt engineering guides model behavior with clear, contextual instructions, fine-tuning lets the model deeply understand specialized knowledge and patterns. Integrating both makes AI both adaptable and reliable, with one guiding creativity and the other ensuring depth and accuracy.

At Objectways, we help organizations strike that balance. By combining the art of effective prompting with the science of fine-tuning, we can build AI systems that are faster, smarter, and deeply aligned with your business goals. Book a call with us to build your next AI model.