The internet is a hub of self-expression, with millions of tweets, comments, articles, and livestreams flooding platforms every second. However, along with this creativity comes a growing challenge: harmful, misleading, and offensive content spreads just as quickly, sometimes even faster, than positive stories that intend to connect us.

For years, social media platforms have attempted to manage content moderation using human reviewers and keyword filters. While it works to some extent, these methods struggle to keep up with the scale and complexity of content on the internet, especially in the age of AI-generated content. More importantly, they often miss the subtle differences that separate harmless banter from genuinely harmful speech.

Natural language processing (NLP) is making a significant difference in this area. Natural language processing is a branch of artificial intelligence (AI) that enables computers to understand, interpret, and generate human language. Unlike basic filters, NLP can be used to go deeper, analyze context, identify entities, and flag harmful content with greater accuracy.

Take a viral meme or video, for example. Sometimes it’s celebrated for being witty, but other times it crosses into offensive territory, sparking heated debates and dividing communities. Moments like these are where content moderators, empowered by NLP, can step in and restore balance before chaos spreads.

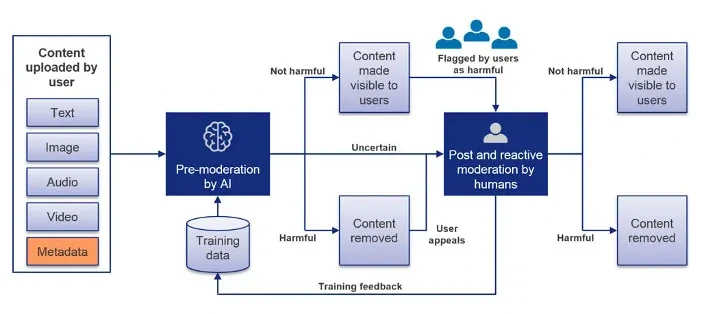

A Look at AI-Driven Content Moderation (Source)

In this article, we’ll explore how named entity recognition in NLP and contextual analysis power modern content moderation systems. We’ll also look at popular named entity recognition tools that help identify people, places, and organizations in text, and compare natural language processing vs generative AI to understand their different roles in content moderation. Let’s get started!

Named entity recognition (NER) is a key technique that drives most of NLP-powered moderation systems. It is a process that scans through text and identifies specific entities such as people, organizations, locations, dates, or numerical values.

The real value of named entity recognition in NLP lies in turning unstructured text into structured and readable data. Instead of looking at a post or comment as a block of plain words, named entity recognition tools tag the important parts so that moderation systems can understand the who, what, and where of the content.

Think of NER like reading an article with a highlighter in hand. As it scans through the text, it marks names, dates, and organizations so they stand out from the rest of the words. What once seemed like an overwhelming block of text becomes structured and easy to interpret – just what moderation systems need to quickly spot harmful or sensitive entities.

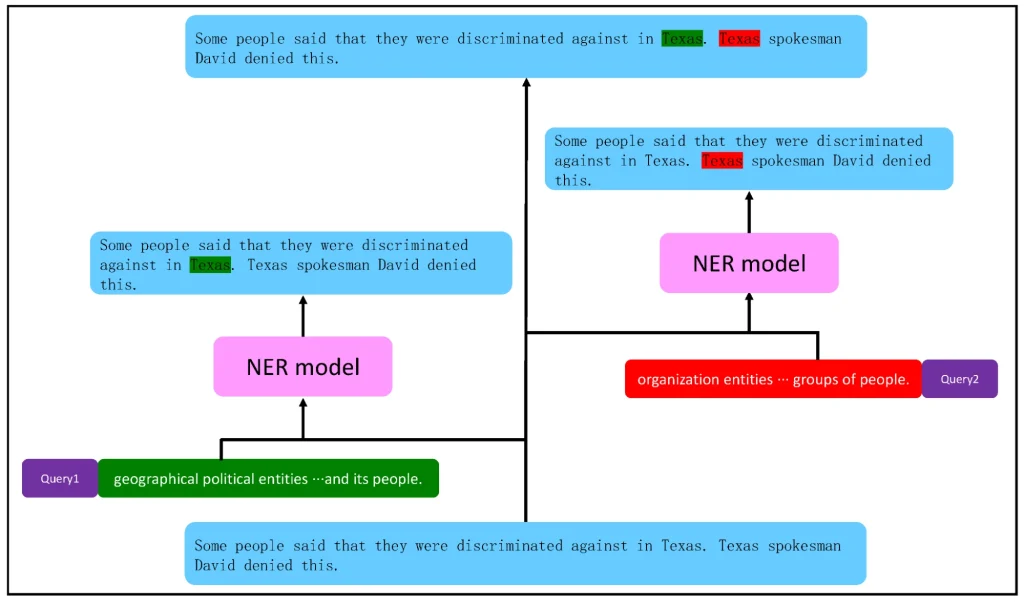

An Example of Using Named Entity Recognition in NLP. (Source)

Named entity recognition in NLP can be implemented in several ways. Here are some of the most common approaches:

Search engines use named entity recognition tools to provide more relevant results, and customer service bots use them to pick out key details in queries. Specifically, when it comes to content moderation, NER can be impactful since it helps detect sensitive entities, identify misuse of names, and flag potentially harmful behavior.

NLP in content moderation goes beyond just spotting keywords. It’s about grasping how words and languages work in real-world conversations. Next, to get a better understanding, let’s walk through three key techniques used in natural language processing that provide a more comprehensive grasp of language.

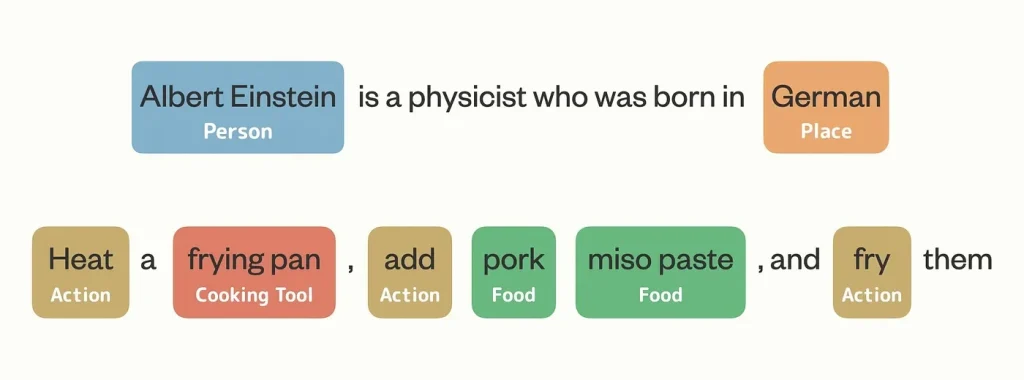

Entity detection, often built on named entity recognition in NLP, identifies mentions of people, places, organizations, or events. This becomes crucial in content moderation when sensitive figures or topics are involved. For instance, if a threatening remark is made toward a political leader, entity detection ensures it gets flagged quickly for review.

Entity Detection in NLP Showing Words Classified as Person, Place, Food, Tool, and Action (Source)

Words don’t always mean exactly what they seem. Sarcasm, irony, and cultural slang can completely change their meaning. Contextual AI analysis uses tools like sentiment analysis, part-of-speech tagging, and dependency parsing to capture tone and intent. That’s how systems can tell the difference between a joke like “The game was criminally good” and an actual harmful statement.

This technique focuses on abusive, toxic, or policy-violating text. Instead of just scanning for bad words, machine learning models trained on vast datasets detect hate speech, bullying, explicit material, or misinformation, even when disguised with slang or creative spellings. That makes NLP-powered detection far more reliable than traditional keyword filters.

Regardless of the technical concepts behind NLP and named entity recognition tools, there is also a practical side. These methods hit home base when applied through fundamental tools and frameworks to handle moderation challenges, and choosing the right tools can make all the difference.

For instance, some of the best NLP tools for content moderation include spaCy, NLTK (Natural Language Toolkit), and Stanford NLP. Each has its unique strengths. spaCy is a production-ready library trusted in commercial use for its speed, accuracy, and support for over 75 languages through tokenization, with trained pipelines available for 25 languages.

Meanwhile, NLTK is widely used by students and researchers for its comprehensive set of functions, from tokenization to sentiment analysis. Stanford NLP, on the other hand, is well known for deep linguistic analysis and high precision, making it ideal for both academic research and enterprise-grade applications.

Together, these frameworks provide the backbone for many AI-driven content moderation systems. They help platforms shift from simple keyword filters to context-aware decisions that accurately reflect how language is actually used.

Now that we have a better idea of how named entity recognition in NLP supports moderation and how natural language processing vs generative AI play different but complementary roles, let’s look at some real-world examples of how major platforms use these technologies to make online spaces safer and more inclusive.

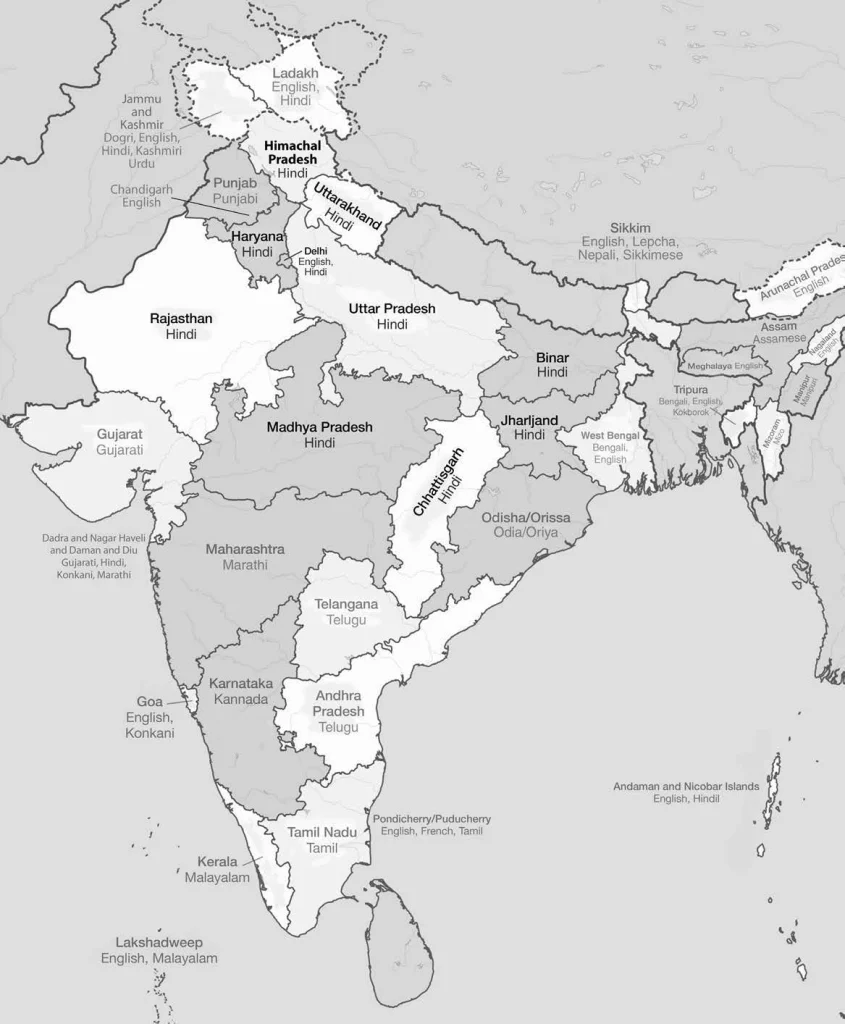

Content moderation in a linguistically diverse country like India comes with unique obstacles. With dozens of regional languages, multiple dialects, and varied cultural expressions, building content moderation systems that work reliably is a complex task.

Languages in India (Source)

To make moderation effective in such settings, NLP models need training data that mirrors real conversations. This means going beyond polished text to include slang, informal spellings, hesitations, tone shifts, and culturally specific expressions.

Gathering data from different regions, age groups, and communities ensures the models are exposed to the full range of how people actually communicate. Careful validation and review are also essential to keep the data consistent and accurate.

With inclusive and high-quality datasets, NLP systems can better identify entities, understand context, and detect harmful or inappropriate content across languages – not just in English. This approach highlights the importance of diversity in building robust moderation systems that can adapt to multilingual and multicultural communities.

A platform like YouTube is a universe of its own. With hundreds of hours of video uploaded every minute, moderation can’t rely on a single method. The challenge is not only the massive volume of content but also the way harmful material, such as misinformation or abuse, constantly evolves.

To keep up, YouTube uses natural language processing and other AI techniques. These systems scan video titles, descriptions, transcripts, and comments to identify abusive or misleading patterns. Most harmful videos are flagged and removed quickly, often before they reach a wide audience.

Human moderators are still vital, focusing on difficult cases that require cultural awareness or careful judgment. This mix of automation and human review helps YouTube scale moderation more effectively, reduce exposure to harmful material, and keep the platform safer and more reliable for its global community.

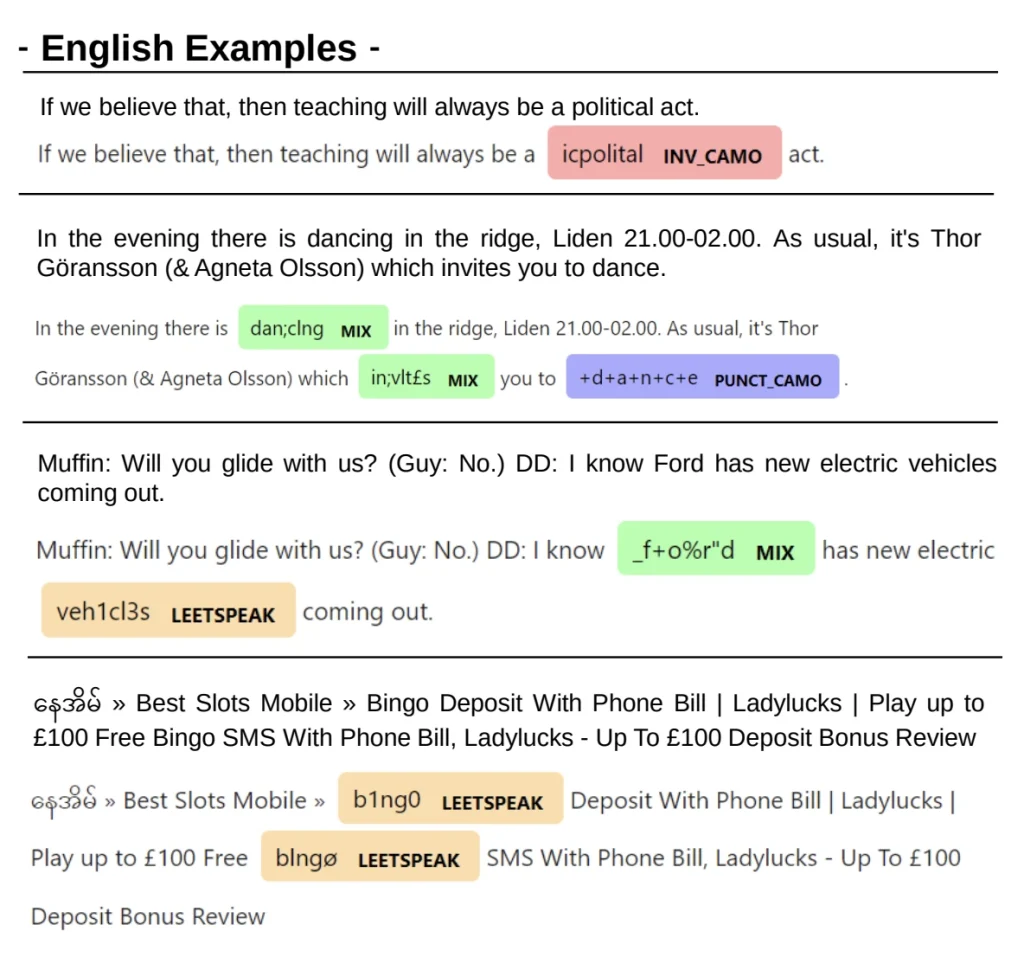

Online platforms often face users who try to sneak harmful content past moderation systems by disguising words. A common trick is word camouflage, where letters are swapped with numbers, punctuation is added, or words are jumbled.

For example, the word “violence” might appear as “v1o.l3nce.” People can still read it easily, but basic filters often miss it. This allows toxic speech and misinformation to spread without being caught.

To solve this problem, researchers have created a tool called pyleetspeak that copies these tricks in many languages and trained advanced named entity recognition in NLP models to catch them. Instead of only looking for exact keywords, the models learned to recognize hidden patterns in disguised text.

Detecting Word Camouflage Using NER Tools. (Source)

When tested, they showed high accuracy across several languages, proving that NER can spot harmful content even when it’s disguised. This approach lets platforms build smarter moderation systems that are harder to fool and more effective at keeping online spaces safe.

We’ve already touched on how NLP and named entity recognition tools strengthen content moderation, but there’s more at play than just these techniques.

As online conversations grow more complex, keeping digital spaces safe requires more than simply deleting harmful posts. Effective moderation now demands an understanding of context, the ability to adapt to new forms of expression, and the foresight to stay ahead of emerging threats.

At the heart of this effort are two powerful technologies: NLP and generative AI. NLP acts like a watchdog, scanning for hate speech, spam, policy violations, or abusive language. Generative AI, on the other hand, acts like a sparring partner, creating challenging scenarios so moderation systems can learn to defend against evolving threats.

When looking at natural language processing vs generative AI, the two technologies complement each other and enhance content moderation in two key ways:

Even though NLP and named entity recognition tools have made major progress in content moderation, there are still important hurdles to overcome. Some of the biggest ones include:

At Objectways, we understand these challenges and offer end-to-end content moderation services that combine the power of AI with human judgment. From handling slang and dynamic language to ensuring fairness in complex contexts, our team can ensure your moderation system stays accurate, scalable, and trustworthy.

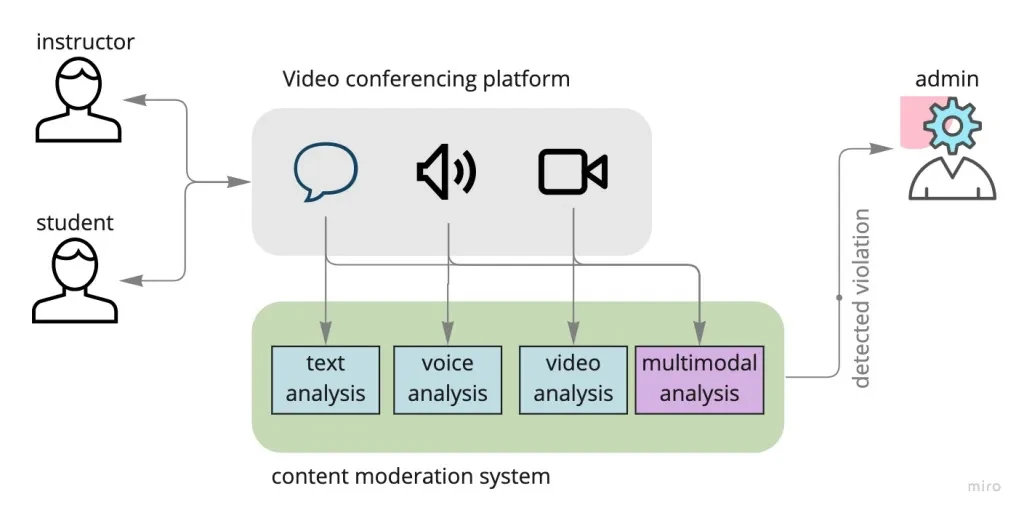

The future of content moderation is moving toward systems that are both smarter and more inclusive. Multimodal AI is a big part of this shift. Instead of looking at text, images, audio, or video separately, these models will analyze them together, similar to how people naturally make sense of online content.

Multimodal AI and Content Moderation (Source)

Another important change is the move toward culture-independent models. Right now, most moderation tools work best in English and a few other major languages. This leaves a gap when it comes to smaller languages and regional dialects, which means harmful content can slip through. Closing this gap is essential for building fairer and more inclusive moderation systems that protect people, no matter what language they use.

Named entity recognition in NLP has become a key part of cutting-edge content moderation. By combining language understanding with machine learning, these tools make platforms safer while easing the workload on human moderators.

For any organization that hosts user-generated content, NLP-driven moderation is no longer optional. The most effective systems combine AI with human judgment to strike the right balance between speed, accuracy, and fairness. As online conversations keep moving, named entity recognition in NLP will continue to play a central role in building safer and more inclusive digital spaces.

At Objectways, we work with teams to put this into practice. By combining advanced tools with human expertise, we help platforms design moderation systems that are accurate, scalable, and fair.

If you are exploring ways to strengthen your platform’s safety, we would love to connect and talk through how Objectways can support your goals. Book a call with us today!