Not too long ago, customer support meant being on hold during long phone calls or having to explain your issue over and over to each new support agent because everything was handled by human agents. Thanks to advancements in AI, organizations now enjoy faster and more reliable customer support solutions in the form of chatbots, conversational agents, and virtual assistants.

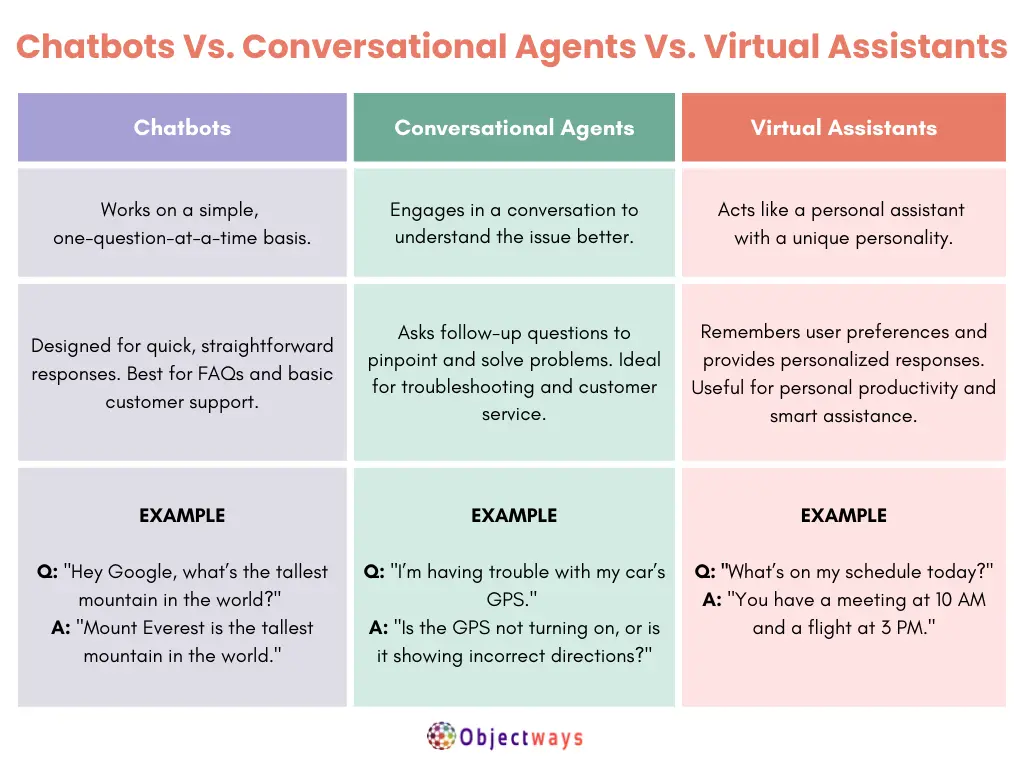

Each of these tools has its own strengths. Chatbots are great for answering quick, one-off questions, while conversational agents engage in multi-turn conversations to solve more complex problems, much like a friendly customer service rep. Virtual assistants go a step further by learning from every interaction, personalizing responses, and even helping with everyday tasks like setting reminders or managing to-do lists.

Key Differences Between Chatbots, Conversational Agents, and Virtual Assistants

The real magic happens when these AI systems are well-trained. Basic scripts might handle simple queries, but true efficiency comes from continuous learning and adaptation. Without this, even the best technology can sometimes leave customers feeling more frustrated than helped.

With businesses increasingly leaning on automation, the AI chatbot market is set to grow from $6.4 billion in 2023 to an estimated $66.6 billion by 2033. However, adopting a chatbot into your operations is only part of the equation – the real impact comes from setting clear goals, fine-tuning performance, and choosing the right models for your needs.

In this article, we’ll walk you through how to train a chatbot, outlining the key steps in the process. While focusing on chatbot training, it also provides insights into the techniques used when building a generative AI for conversational AI. Let’s dive in!

Training a chatbot without clear objectives is like onboarding a new employee without telling them exactly what their job entails. They might be ready to work, but without direction, their efforts could be all over the place. The same goes for chatbots; without a clear purpose, they can end up giving vague or irrelevant responses, frustrating users instead of helping them. Just like how employees refine their skills through feedback and experience, chatbots improve by analyzing interactions and being fine-tuned over time.

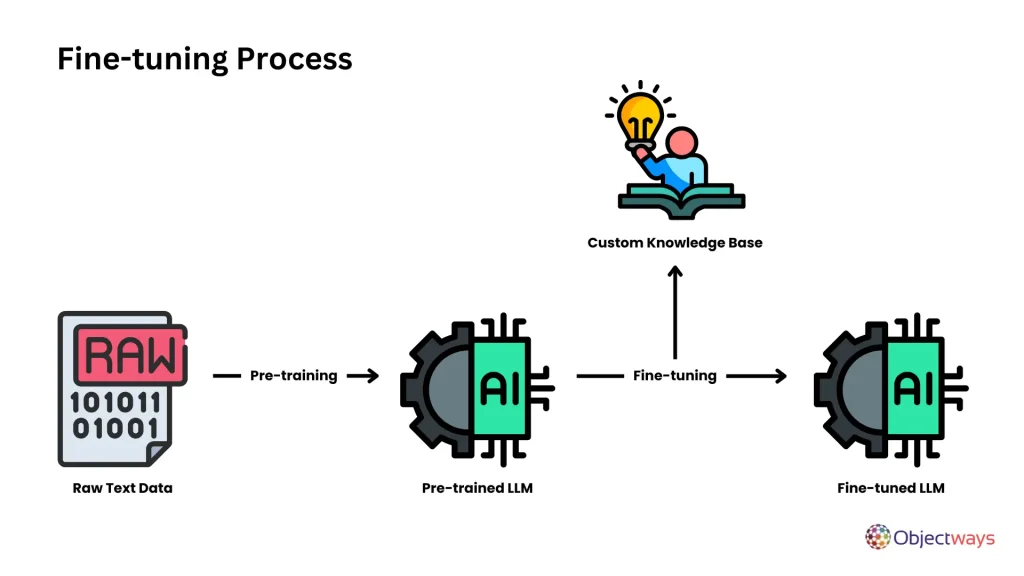

Nowadays, under the hood of a chatbot is usually a well-trained LLM (large language model). However, chatbots didn’t always use large language models. Earlier, they relied on rule-based systems, but now, with advances in AI, LLMs can be trained on vast amounts of text to generate human-like responses.

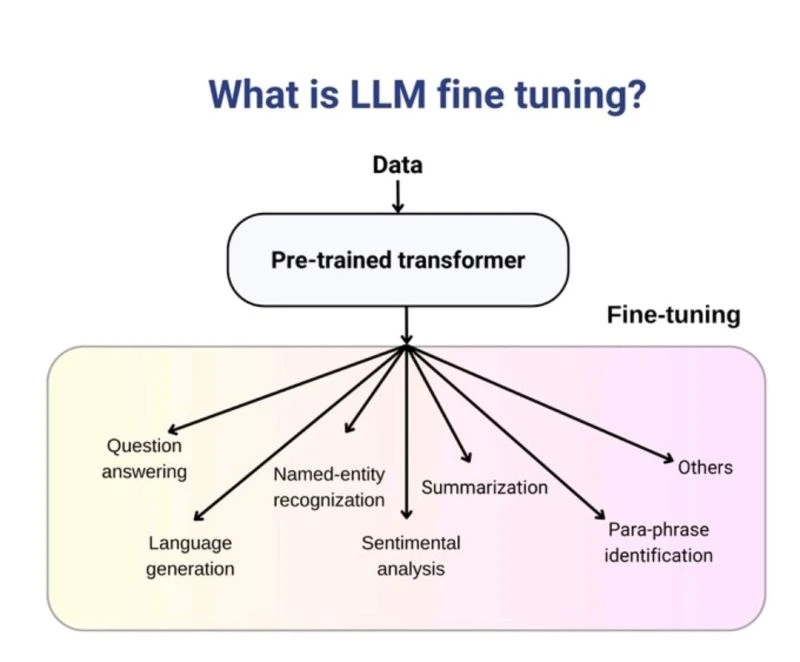

While LLMs are great at processing language, that doesn’t mean they automatically understand the details of a specific industry or what users really need. Fine-tuning attempts to fill that gap by training a pre-trained model on structured, high-quality data, making its answers more accurate and relevant.

For instance, a general LLM might give broad financial services and advice, but one fine-tuned for financial services will understand industry jargon, regulations, and the nuances of financial topics, providing much more precise guidance.

Understanding Fine-tuning. (Source)

Something important to note about chatbot training is that an LLM is only as effective as the data it learns from. Going back to the metaphor of onboarding a new employee – if they only study general textbooks, they won’t grasp the specifics of your business. Similarly, chatbot training relies on structured, industry-specific data to be truly helpful. Data like customer service logs can pinpoint common queries, while chat transcripts make interactions feel natural.

In particular, industry documents add depth to training data. For example, a legal chatbot needs exposure to case laws and regulations, while an e-commerce assistant benefits from detailed product info and customer queries. Simply put, the more relevant the data, the smarter and more reliable the chatbot becomes.

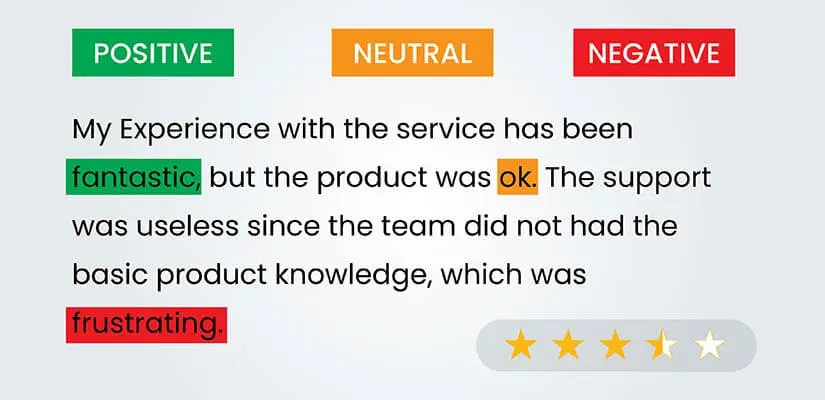

Beyond collecting data, data annotation is what transforms raw information into meaningful insights. By labeling intent, context, and relationships within the data, you can help LLMs interpret user inputs more precisely.

As you walk through how to train a chatbot, you might be wondering – what annotations are actually used? Different types of annotations serve various purposes to make sure models understand language, follow instructions, reason through tasks, and respond appropriately in different situations.

Here’s a quick glimpse at the key annotation types that can improve an LLM’s responses:

Chatbots can recognize emotions using sentiment analysis. (Source)

So, you’ve collected and annotated your data. What’s next? It’s finally time to train your chatbot. There are multiple ways to do this, depending on your expertise and resources.

If you’re an AI engineer, the process is pretty straightforward. You can train a LLM using your own data to make it more relevant to your specific needs. This usually involves writing some code and using tools like OpenAI’s fine-tuning API or Hugging Face’s Transformers to adjust the model’s responses based on your data.

Once the model is trained, you connect it to an API, which lets users interact with the chatbot by sending messages and receiving responses. Finally, you set up dialogue management, which helps the chatbot keep track of the conversation, understand follow-up questions, and respond in a natural, meaningful way.

However for those without a technical background, this might seem overwhelming. Fortunately, AI advancements have made chatbot training much more accessible. Today, open-source tools and platforms simplify the process, making it possible to train and deploy chatbots without deep technical knowledge.

Regardless of the approach, the underlying process remains the same. Let’s take a closer look at how chatbot training and fine-tuning work.

Before we discuss the ins and outs of fine-tuning an LLM, it’s useful to understand the challenges a chatbot needs to be able to handle. Here are some of the challenges that chatbot training aims to solve:

There are several methods for fine-tuning a chatbot, each offering different levels of control and efficiency. Each method has its own advantages, depending on the complexity of the chatbot and the resources available.

Let’s take a closer look at the fine-tuning methods that can be used for chatbot training.

Fine-tuning an LLM for domain-specific tasks. (Source)

Fine-tuning a full AI model can be resource-intensive, but Low-Rank Adaptation (LoRA) and Quantized LoRA (QLoRA) make the process more efficient. Instead of retraining the entire model, these methods update only specific layers, reducing the need for high computing power. This makes fine-tuning chatbots quick and cost-effective.

For example, an e-commerce chatbot can use LoRA to adapt to seasonal trends, adjusting its responses based on changing customer preferences. Meanwhile, QLoRA enables retailers to update product recommendations without relying on expensive hardware. These approaches make chatbot training faster, more affordable, and more accessible to businesses of all sizes.

Large language models (LLMs) can learn new tasks with just a few examples, a method known as few-shot learning. Instead of requiring massive amounts of training data, the model adapts by analyzing a small set of relevant examples. For example, a legal chatbot can learn to draft contracts by studying just a few high-quality samples rather than thousands.

Instruction tuning takes this a step further by teaching the model how to follow structured prompts instead of relying on rigid, pre-set scripts. This makes chatbot responses more natural and flexible, improving the overall user experience.

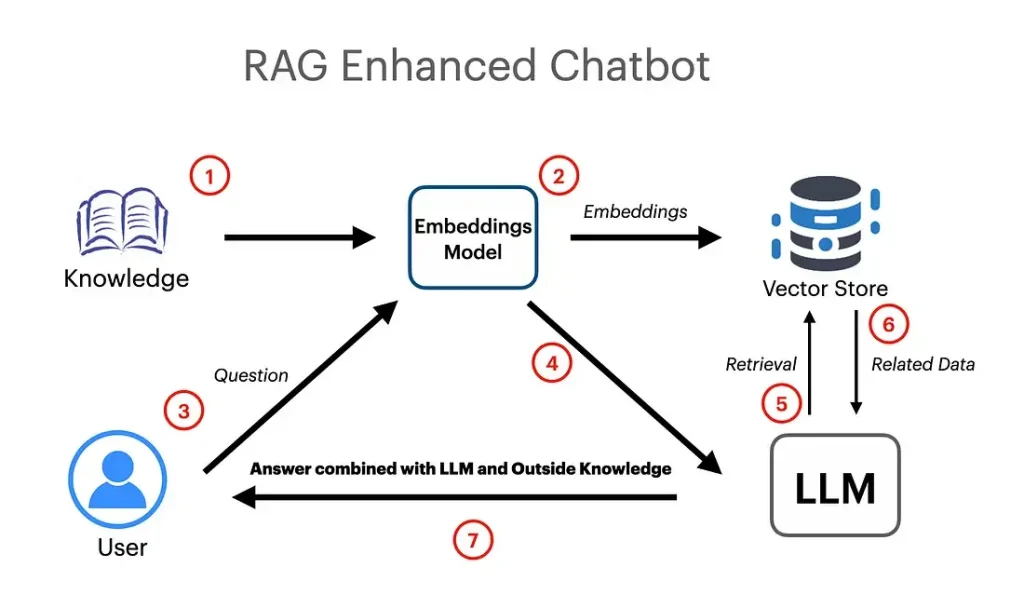

Pre-trained AI models have limits on what they “know” because they rely on past training data. Retrieval-Augmented Generation (RAG) improves accuracy by fetching real-time information from trusted sources before generating a response. For example, a healthcare chatbot answering medication-related questions can pull data from medical research papers or official health guidelines, ensuring its responses are up-to-date and reliable.

RAG chatbots use external knowledge to provide better responses. (Source)

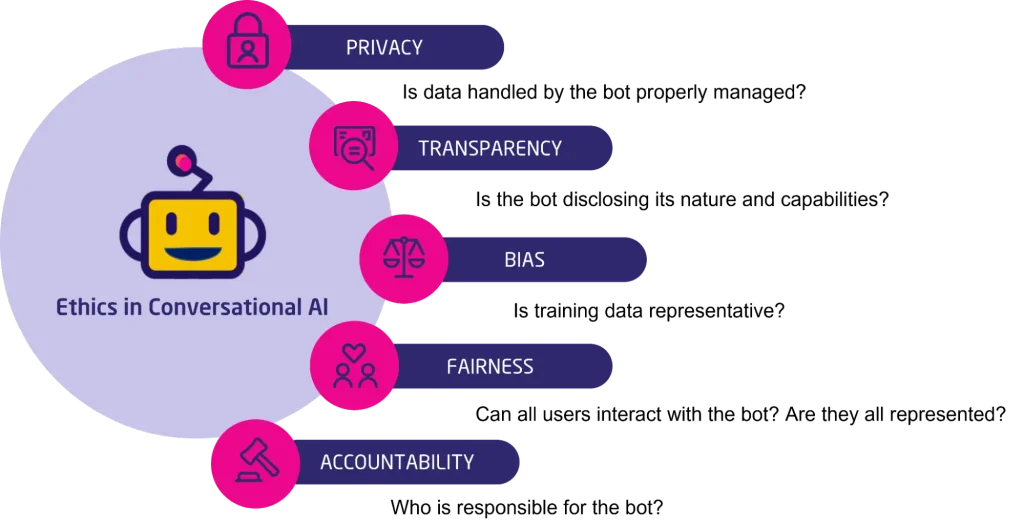

AI chatbots can sometimes pick up biases from their training data, leading to unfair or misleading responses. To prevent this, user feedback loops can be used to review and improve chatbot responses over time.

Also, adversarial testing helps detect biases before the chatbot goes live, while context-aware adjustments make sure it responds fairly and appropriately in different interactions. These measures help create more ethical and reliable AI systems.

An overview of ethics in conversational AI. (Source)

Training and presenting a generative AI demo of your new chatbot isn’t the finish line – it’s just the beginning. Like an employee, it requires ongoing monitoring and adjustments to refine its understanding, improve accuracy, and adapt to real-world interactions over time.

Tracking conversations, analyzing user feedback, and fine-tuning responses are essential for maintaining relevance and accuracy. With so many moving parts, the process of training and monitoring a chatbot can feel compllicated. But with the right expertise, it can be streamlined for efficiency and long-term success.

Objectways helps businesses create smart, adaptable AI solutions that keep improving over time. With expertise in data sourcing and labeling, we ensure AI models accurately understand data like text, images, audio, and video. We also specialize in training chatbots, making them more natural, engaging, and effective in conversations.

Developing an effective AI chatbot isn’t just about using advanced models, it’s about refining them with the correct data, continuous training, and intelligent optimization. From annotation to deployment, every step plays a role in making chatbots more accurate, responsive, and adaptable.

As AI chatbots evolve, high-quality data and ongoing improvements will be key to keeping them relevant. At Objectways, we can help you create chatbots fit for your specific business need. We provide expert data labeling and customized AI solutions to make sure your chatbot runs smoothly, understands users accurately, and delivers the best possible experience.

Want to build an intelligent chatbot? Contact us today!