Most modern AI systems depend on annotated data that is created by skilled data annotators or labelers. Many end users or company executives may not realise this, as the work of data annotators often happens behind the scenes.

Before an AI model can recognize an object, understand a sentence, or respond to a voice command, someone has to teach it what the data actually means. That’s why the data annotation process is important.

Data annotation is the process of adding meaning to raw data so machines can understand and learn from it. By assigning labels, categories, or contextual information, unstructured data becomes usable for training AI models. Without annotation, data lacks the clarity needed for AI systems to recognize patterns or make accurate decisions.

Many organizations treat data labeling as simple manual work that anyone can do with basic instructions. Such assumptions often lead companies to use crowdsourcing or generic labor to quickly and cheaply annotate data. This may seem reasonable since drawing boxes or tagging text can appear straightforward.

However, that’s not entirely true. Small labeling mistakes compound over time and can completely change how an AI model functions. Inconsistent annotations can confuse AI models and cause them to produce errors when they run.

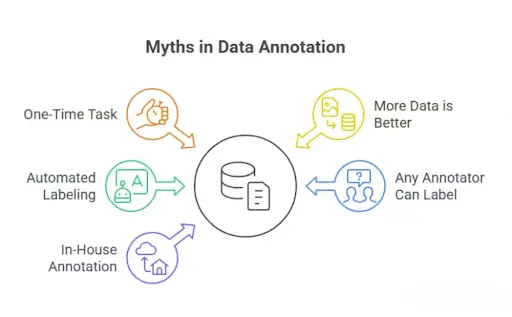

Common Data Annotation Myths and Mistakes (Source)

To train reliable AI systems, you need a data annotation specialist who understands data, domain context, and how AI models behave. In this article, we’ll explore the role of a data annotation specialist and compare expert-done annotations with crowdsourced annotations. Let’s get started!

A data annotation specialist does more than tag data or draw boundaries around objects. Their primary role is to translate the real world into a form that AI models can understand and learn from.

You can think of a data annotation specialist as a language teacher for machines. Just as a teacher explains meaning, nuance, and context to students, the specialist guides an AI model by defining what matters in the data.

Some major tasks include labeling text, images, audio, and video. Each label is applied with a clear understanding of how the model will use that information during its development stages.

Unlike generic workers or crowdsourced workers, a data annotation specialist considers all aspects regarding annotation. This includes intent, boundaries, and consistency across the dataset.

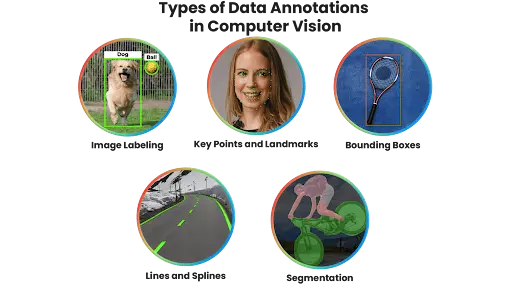

Such attention to detail is especially useful in computer vision models, where the model is taught to make sense of visual information. For instance, decisions about where an object starts and ends or how an action is defined often require careful judgment.

A data annotation specialist also follows well-defined guidelines and applies them uniformly while recognizing edge cases that need special handling. For example, when labeling cars in images for computer vision models, the annotator follows strict rules but adjusts for edge cases like partially hidden or reflected vehicles.

Data Annotation Types Used in Computer Vision (Source)

Another important factor that data annotation specialists handle is domain awareness. Being aware of the specific domain, like healthcare or finance, can directly shape how an AI model learns, handles new data, and performs in the real world.

Next, let’s take a look at why data annotation is more complex than typical freelance work.

Freelance platforms like Upwork and Fiverr are popular because they offer speed, flexibility, and access to a large global workforce. While these platforms work well for many general freelance tasks, AI annotation requires a more specialized set of skills.

These platforms are designed to match tasks with availability rather than assess whether annotators understand machine learning objectives, domain-specific guidelines, or the long-term impact of labeling decisions on dataset quality. As a result, annotation work is often treated as isolated tasks instead of a coordinated process within a larger AI training workflow.

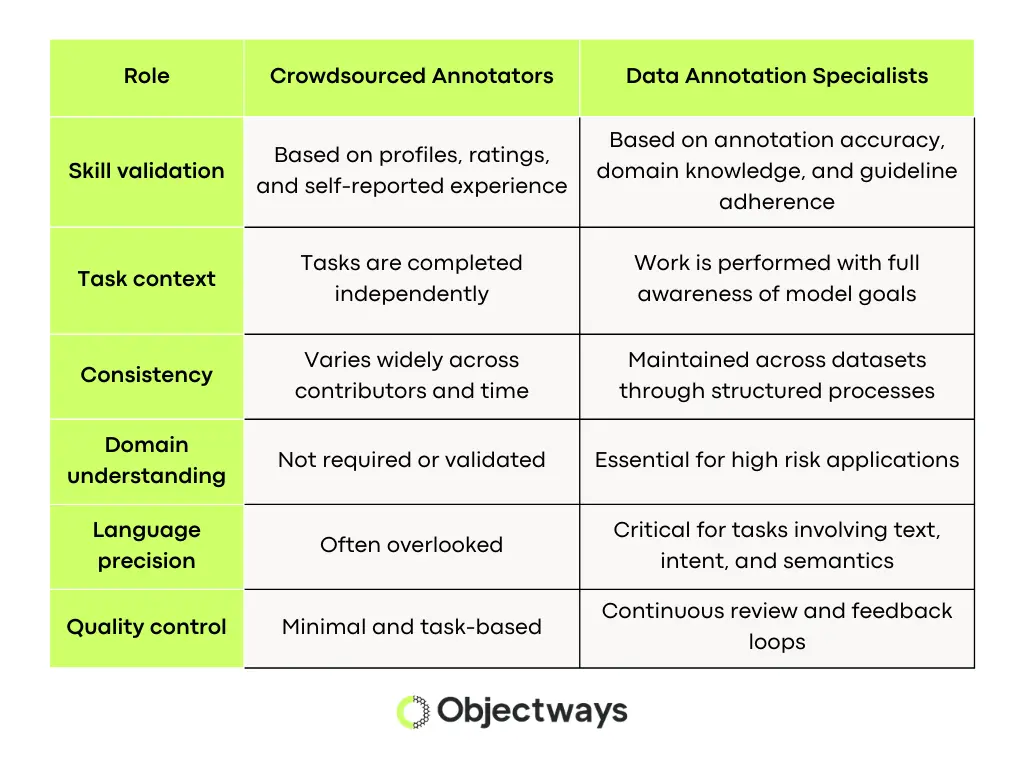

Crowdsourced Annotators Vs. Data Annotation Specialists

Because of this, finding skilled and experienced data annotation specialists through freelance platforms can be challenging. Annotation work feeds directly into complex AI training pipelines, where consistency, contextual understanding, and decision quality have a direct impact on how the final AI model performs.

Roles such as AI annotator, data labeler, and data annotation specialist require skills that enhance data quality and model performance. Here are some key considerations that separate an expert data annotator from a general worker:

A good example of AI applications that need expert-driven data annotation is language-specific applications. AI systems rely heavily on the quality of the data they are trained on.

When language models are trained on data that reflects real cultural, linguistic, and professional contexts, they understand the world more accurately. This helps the AI systems interpret real-world inputs with greater reliability and relevance.

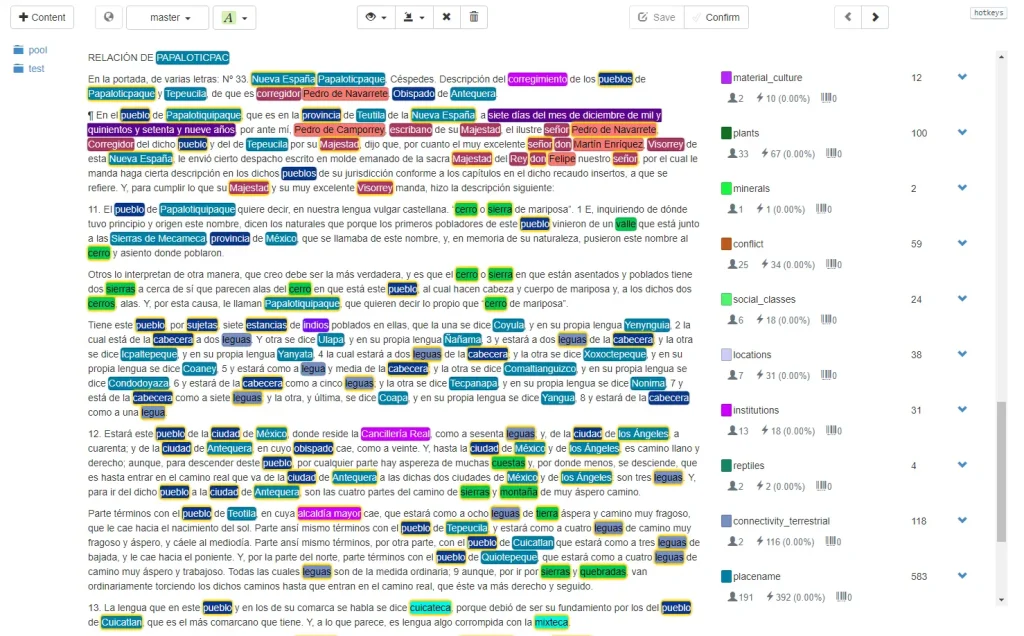

An AI data annotator with good language fluency can provide annotations with deeper meaning. They can consider factors like dialects, regional phrases, and tone, all of which can completely change the meaning and context behind some labels.

An Example Of Detailed Text Annotation With Linguistic Context (Source)

For example, in legal documents, a small difference in wording can change the interpretation of a clause. Similarly, in medical data, mislabeling a symptom or condition can affect how a model prioritizes risk or treatment outcomes.

A data annotation expert understands how these details influence model behavior. They apply labels with awareness of how the AI will interpret patterns during training. Without fluency in language or domain familiarity, annotators may rely on surface-level cues or literal definitions, which leads to inconsistent or misleading labels.

That is why data annotation specialists with language and domain-specific annotation skills are a better option, ensuring datasets reflect real-world usage rather than simplified assumptions. This results in AI systems that perform more reliably when exposed to diverse users and complex scenarios.

At Objectways, we make it easy for organizations to build high-quality AI training data with confidence. Our work is powered by an in-house team of more than 2,200 trained data annotation specialists, ensuring consistency, accountability, and deep alignment with each project’s goals.

We integrate our in-house data labelers and AI data annotation specialists directly into your annotation workflows. Every project starts with people who understand the data, the domain, and how annotation decisions affect AI model performance.

Rather than outsourcing tasks to general workers, Objectways assigns datasets to dedicated data annotation specialists with the relevant domain expertise and language skills. This controlled, in-house approach helps maintain accuracy, consistency, and long-term dataset quality.

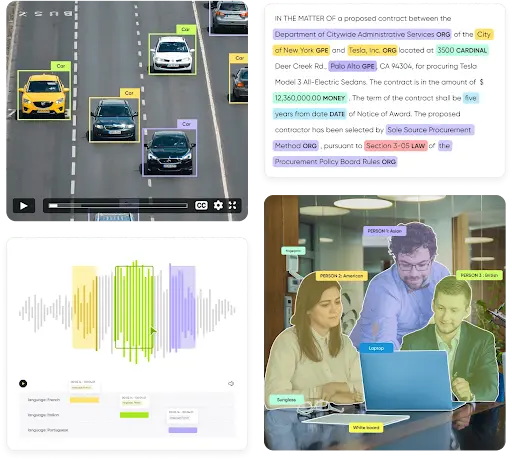

Examples of Image, Text, Audio, and Video Annotation (Source)

For instance, multilingual annotation is a core capability at Objectways. Our annotators are fluent in target languages and familiar with regional context, allowing meaning, intent, and tone to remain intact across multilingual datasets.

Quality assurance is built into every stage of our process. Dedicated reviewers enforce clear annotation guidelines, monitor output continuously, and provide structured feedback.

To support precision and efficiency, we equip our AI data annotators with task-specific tools designed for accuracy, collaboration, and version control. This enables our specialists to focus on high-quality decisions while maintaining scalable, reliable delivery.

Next, let’s walk through a couple of real-world success stories where leveraging data annotation specialists helped our customers create a meaningful impact with AI.

Objectways has supported medical annotation projects for over five years by working with expert annotators from medical backgrounds.

For one healthcare-focused client, we managed complex medical data where accuracy, consistency, and context were critical. The project required a precise understanding of clinical terminology, diagnostic language, and medical imagery such as scans and reports.

To meet these requirements, we relied on our trained data annotation specialists who were familiar with real clinical workflows and healthcare standards. They labeled the data with a clear understanding of how it would be used in AI-driven healthcare applications, including diagnosis support and medical imaging analysis.

Their domain knowledge helped them recognize subtle patterns, handle edge cases, and apply guidelines consistently across large datasets. The client received high-quality training data that improved model performance, reduced rework, and supported safer, more trustworthy AI deployment in healthcare settings.

Similar to healthcare, power line inspection is an AI application that requires specialized domain knowledge.

We worked with an Australian power company that used drone imagery to inspect electrical lines and identify early signs of corrosion. The images captured subtle visual details that required engineering expertise to interpret correctly.

Objectways staffed the project with expert electrical engineers who understood the structure and behavior of power lines. They carefully reviewed each image and precisely labeled areas of corrosion. Their ability to distinguish between corrosion, surface dust, and normal wear significantly improved label accuracy across the dataset.

Building reliable AI systems requires more than large datasets and powerful models. It depends on the quality, consistency, and context embedded in the data used to train them. Expert data annotators bring structure, judgment, and domain understanding into every dataset. Their work helps AI systems perform predictably and improve over time.

As AI projects move from experimentation to real-world deployment, annotation quality becomes a long-term investment rather than a one-time task. Teams that prioritize skilled data annotation specialists lay a stronger foundation for scalable and trustworthy AI solutions.

Want to apply the same level of expertise to your data? Objectways supports medical, multilingual, and domain-specific annotation through trained data labelers and AI data annotators. Reach out to explore how our workflows can support your AI projects.