The idea of self-driving cars has been around for decades. Back in the 1980s, researchers at Carnegie Mellon University began experimenting with early autonomous vehicles like Navlab and ALVINN, paving the way for today’s smarter and safer self-driving cars.

Autonomous vehicles rely less on mechanical control and more on intelligent systems. They use computer vision and advanced sensors to collect data, analyze their surroundings, and build a real-time 3D model of the environment. This process of reconstructing reality helps the vehicle understand where it is, what’s around it, and how to move safely.

Key technologies like sensor fusion, 3D reconstruction, and scene mapping work together to give the autonomous vehicle situational awareness. This is essential for tasks like detecting obstacles, planning routes, and interacting with other vehicles or pedestrians on the road.

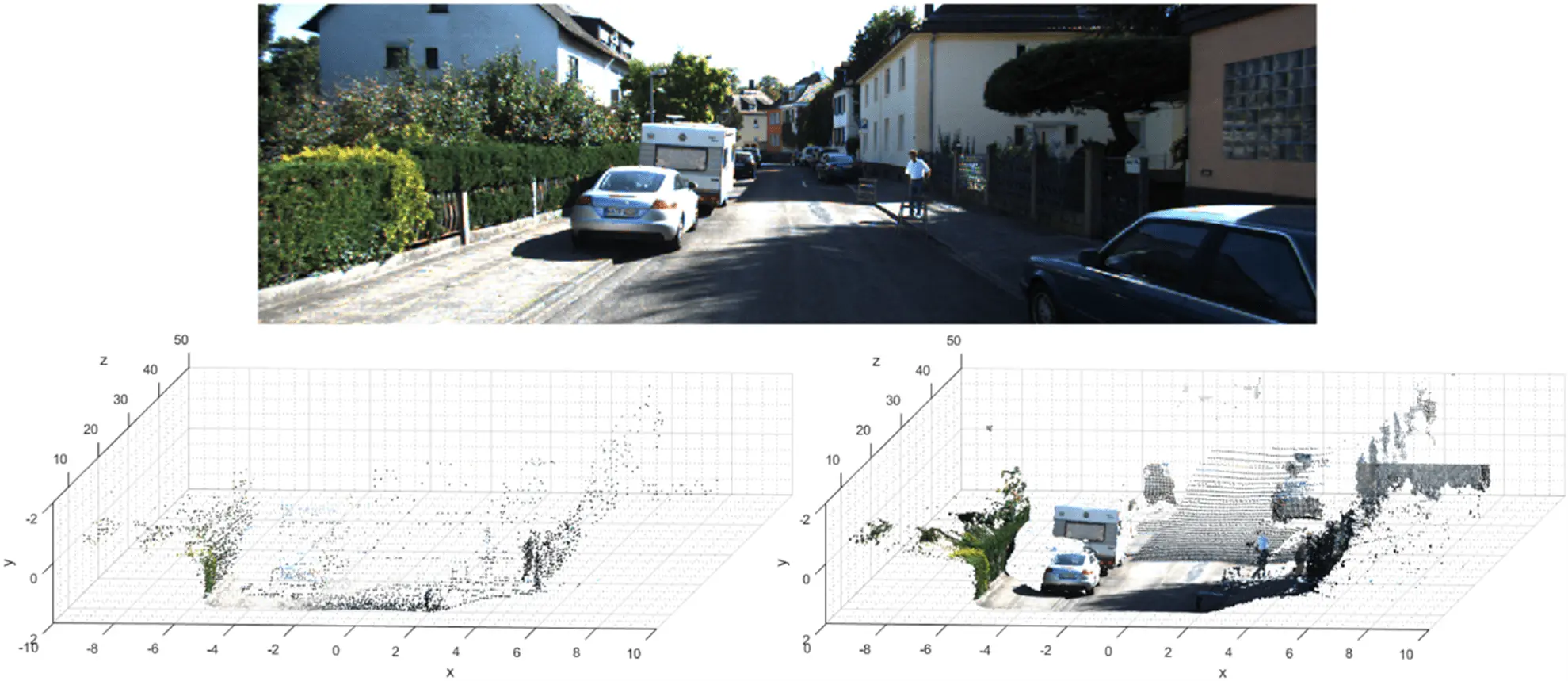

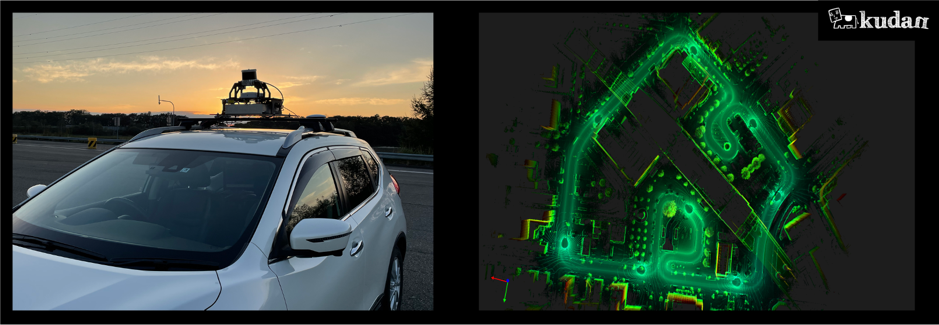

Reconstructing reality through 3D scene mapping is essential in autonomous driving. (Source)

Thanks to tech advancements, the self-driving vehicle industry is steadily growing and becoming more popular. In fact, the autonomous driving industry is set up to generate around $400 billion in revenue by 2035. In this article, we’ll take a closer look at how these technologies work and how they’re already being used in autonomous navigation systems. Let’s get started!

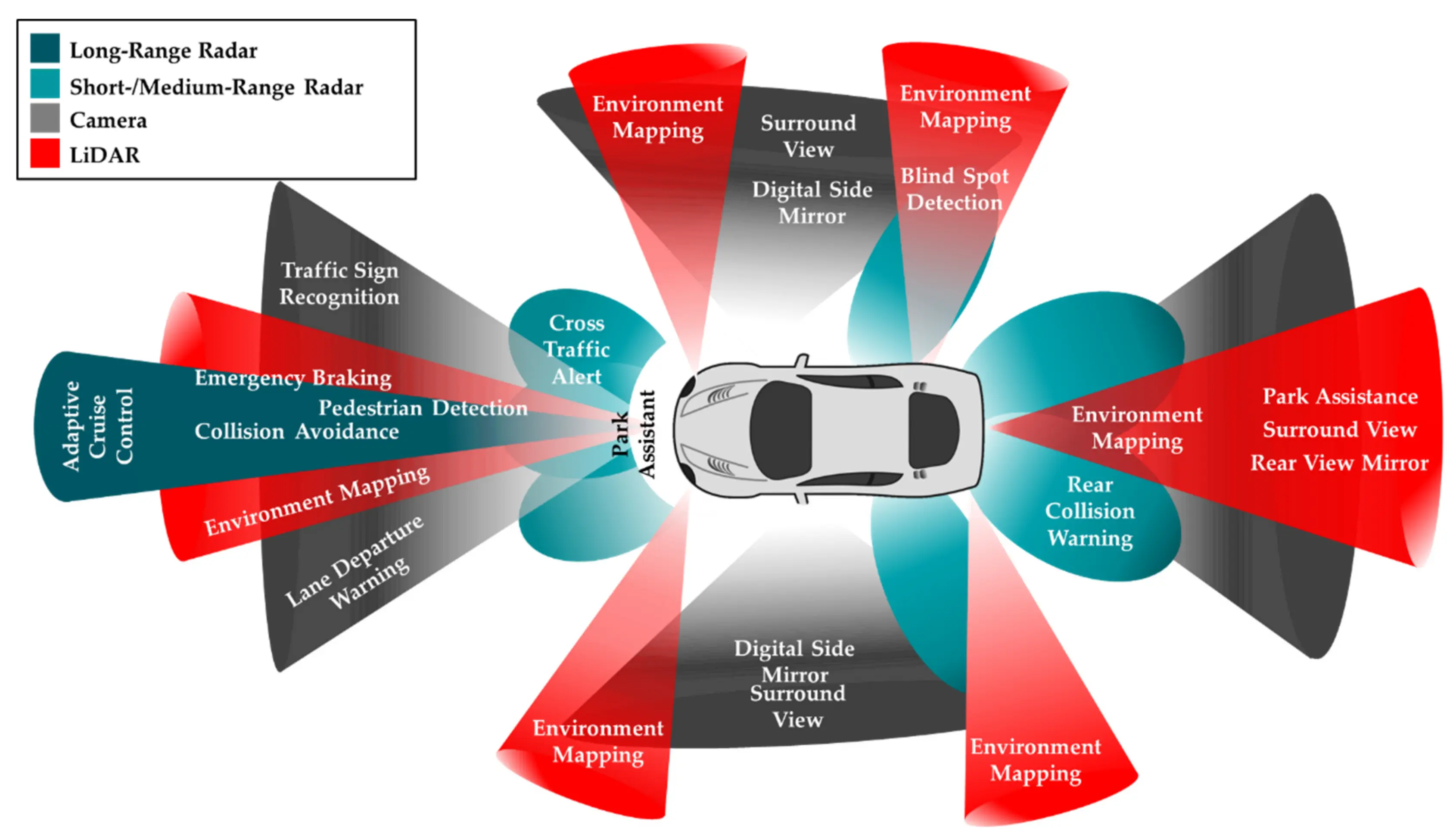

For a self-driving car to be able to maneuver safely, it needs to detect objects and understand their positions and movements around it. This depends on two key processes: scene mapping and sensor fusion.

Scene mapping involves building a detailed, real-time model of the vehicle’s environment. It gathers high-quality data from various sensors to create a spatial map that shows where roads, obstacles, pedestrians, and other vehicles are located. Meanwhile, sensor fusion is used to combine information from different sensors, such as cameras, radar, and GPS, to get a clearer and more accurate view of what’s happening around the vehicle.

Each sensor fills in a piece of the puzzle of autonomous navigation. Here’s a quick look at some of these sensors:

Together, the data collected from these sensors create a reliable picture of the car’s surroundings. Machine learning and deep learning enhance this by analyzing the sensor data to detect patterns and improve decision-making.

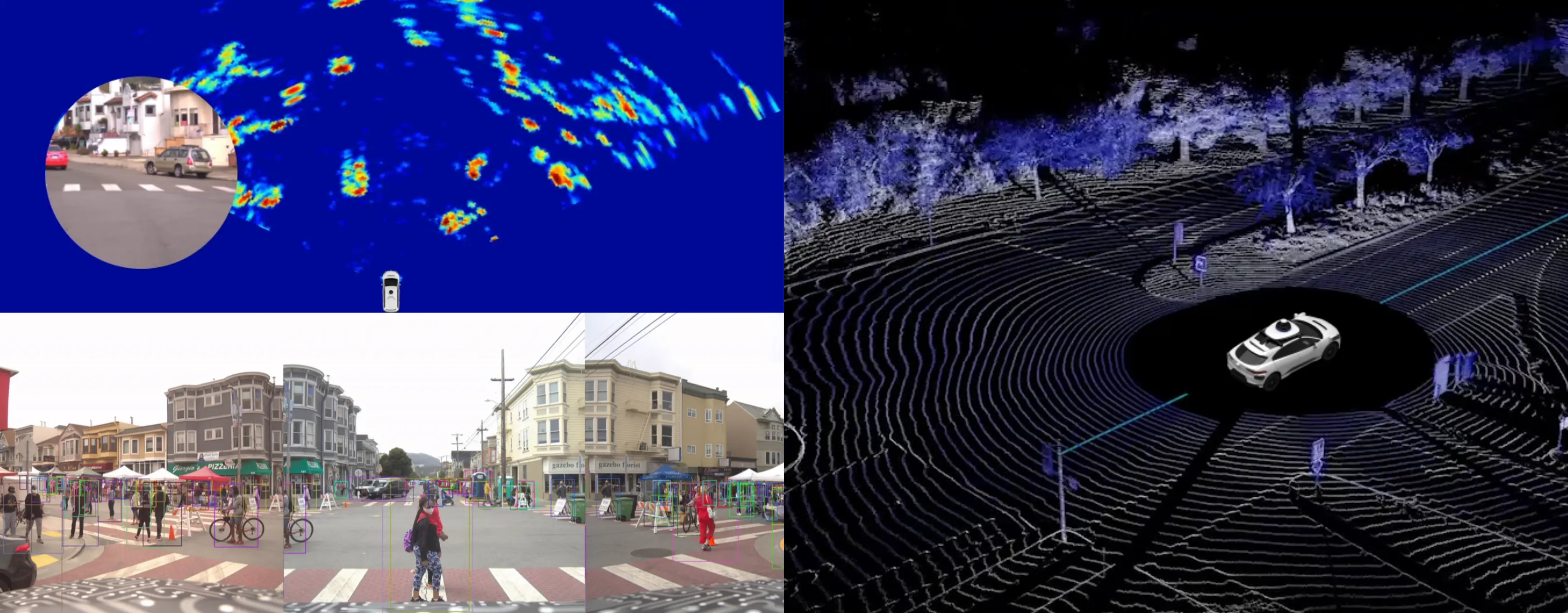

Understanding How Autonomous Vehicles Use Cutting-Edge Tech (Source)

Having a real-time model of the road is crucial for autonomous vehicles. Real-time situational awareness makes it easier for the vehicle to understand spatial relationships, make safe lane changes, brake on time, and take smooth turns. It enables the vehicle to confidently and accurately navigate complex city streets, highways, and unpredictable traffic conditions.

Waymo’s 6th-generation driver system is a good example of autonomous navigation in action. It uses 13 cameras to spot visual details like road signs, traffic lights, and lane lines. These systems also use many LiDAR sensors. Four LiDAR units are used to create 3D maps that measure distance, while six radar sensors track the speed and movement of objects, even in fog or heavy rain. These sensors have overlapping fields of view, so if one fails or is obstructed, the others can still maintain the vehicle’s awareness of its surroundings.

Sensor fusion uses data from different sensors to better understand the surroundings. (Source)

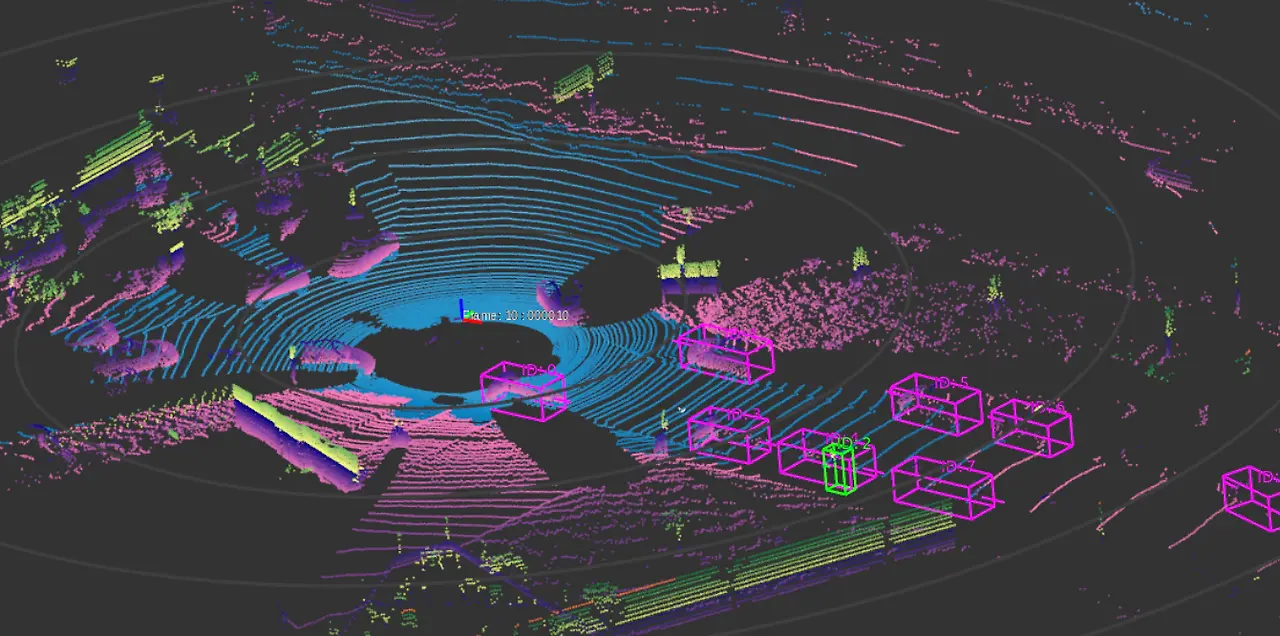

A 3D point cloud is a set of data points plotted in three-dimensional space. These points capture the shape and position of real-world objects like roads, vehicles, buildings, and trees with high detail.

Technologies such as LiDAR (laser-based distance measurement), photogrammetry (3D mapping from images), and depth-sensing cameras (capture depth information) are used to generate these point clouds. Together, these tools provide precise spatial information vital for autonomous systems to work efficiently.

However, just raw point cloud data isn’t enough. It needs to be labeled so that the AI models integrated into autonomous vehicles can be trained to understand what each point represents. Data labeling is the process of assigning a category or class to a group of points in a 3D point cloud, indicating what type of object or surface those points represent.

An Example of Labeled LiDAR 3D Point Cloud Data for Autonomous Driving. (Source)

Once labeled, the data can be used to train AI models. These models learn how to recognize objects, estimate their movement, and respond in real time. In self-driving cars, this enables safer autonomous navigation by helping the vehicle detect dynamic elements like pedestrians or cyclists and predict how they might move.

So far, we’ve explored why a real-time model of the road is essential and how sensor data makes that possible. But how is this model actually created and kept up to date? What’s happening behind the scenes to turn raw inputs into a live, detailed understanding of the world around the vehicle?

To operate safely, many autonomous navigation systems rely on scene reconstruction and real-time mapping to stay updated with their surroundings. Scene reconstruction builds a detailed 3D model of the vehicle’s environment.

This includes static objects like road signs and buildings, as well as moving elements like pedestrians and other vehicles. Real-time mapping ensures this model is continuously updated as the vehicle moves. This ongoing update allows the vehicle to stay responsive to its surroundings.

Having just read about 3D point clouds, you might be wondering: if we can train AI models to interpret point cloud data, why do we still need scene reconstruction? That’s a great question, and here’s the difference:

Simply put, scene reconstruction gives the car a detailed map, while AI gives it the ability to read and interpret that map. The two work together to ensure the vehicle can safely and intelligently navigate complex, dynamic environments.

To make scene reconstruction possible, self-driving cars use a process called Simultaneous Localization and Mapping, or SLAM. It combines inputs from LiDAR, cameras, and inertial sensors through sensor fusion. This integrated data helps the vehicle determine its location with high precision and construct high-definition semantic maps. These maps offer centimeter-level accuracy, enabling navigation even in unpredictable conditions.

With this real-time spatial awareness, the vehicles can perform several key tasks essential for safe driving:

A real-world example is Whale Dynamic’s autonomous delivery vehicles operating in a city in China. These vehicles use Kudan’s 3D-Lidar SLAM technology along with advanced mapping hardware and software. They generate dense point clouds and centimeter-accurate HD semantic maps. As they navigate complex streets, they continuously analyze their surroundings. This lets them precisely localize their position, track moving objects, and plan safe paths in real time.

Using Real-Time 3D Mapping for Scene Reconstruction. (Source)

Now that we’ve seen how self-driving cars use scene mapping and sensor fusion to understand their surroundings, let’s take a closer look at some trends that are shaping the future of this technology:

While these advancements open the door to safer and smarter autonomous driving, here are some important challenges to consider:

Self-driving cars are reshaping the future of autonomous navigation and how we travel in general. By using a wide variety of sensors and real-time 3D maps, they can safely find their way through busy and unpredictable roads.

This results in fewer accidents, less traffic, and better driving in different conditions. Looking ahead, these cars will continue to evolve – becoming more responsive, better connected, and ultimately making our roads safer than ever before.

At Objectways, we support this future by providing precise data labeling services that enhance AI models for autonomous driving and many other applications. We also offer custom AI solutions tailored to your business needs.

Ready to accelerate your AI project? Contact us today.