In recent weeks, two humanoid robots have sparked a lot of buzz in the AI community. Xpeng’s IRON was so lifelike in its movements that the company actually cut the robot open onstage to prove there wasn’t a person inside.

IRON’s leg was cut open on stage. (Source)

Around the same time, 1X introduced Neo, a household assistant robot available for preorder, sparking excitement over the idea of having a personal robot that can help with everyday chores. Headlines like these are appearing more often, and there’s a clear pattern behind them: robots are becoming more capable, adaptable, and human-like in how they understand and interact with their surroundings.

Neo Doing Simple Tasks Around the House. (Source)

While a key driver behind this trend is hardware, data is equally important. In fact, AI data for the robotics industry is what teaches robots how to act, how to adjust, and how to learn from mistakes. Robots aren’t simply programmed with a list of instructions. They learn through examples, practice, and feedback, much like we do.

Earlier this month, the Los Angeles Times featured Objectways and the behind-the-scenes work that makes this possible. They highlighted how our teams record real human demonstrations, annotate every motion, and review thousands of clips to teach robots how to perform tasks like folding towels, packing boxes, or sorting items. Each correction and label ultimately becomes part of the robot’s experience and supports retraining AI models.

In this article, we’ll take a closer look at how visual data is collected and used in robotics training, and how Objectways provides AI data for the robotics industry to help companies build smarter, safer, and more reliable robotic systems.

You can think of a robotic vision system as the part of the robot that sees and interprets the world. It combines camera input, sensor data, and AI models to understand what’s in front of it and how to respond.

Interestingly, you can’t teach a robotic vision system to perform day-to-day tasks solely through programming. It needs to learn from experience. During training, robots practice tasks in real or simulated environments while cameras capture every step.

Each motion is recorded, labeled, and reviewed so the system can learn what worked and what didn’t. Over time, even simple actions like folding laundry or pouring coffee become lessons. Step by step, robots build their understanding of how to succeed and, just as importantly, how to avoid repeating mistakes.

Computer vision in robotics plays a major role by helping robots see and understand their surroundings. But seeing an object is only the first step. To interact with the world the way humans do, robots need experience. This learning happens through a continuous training loop built on real-world data.

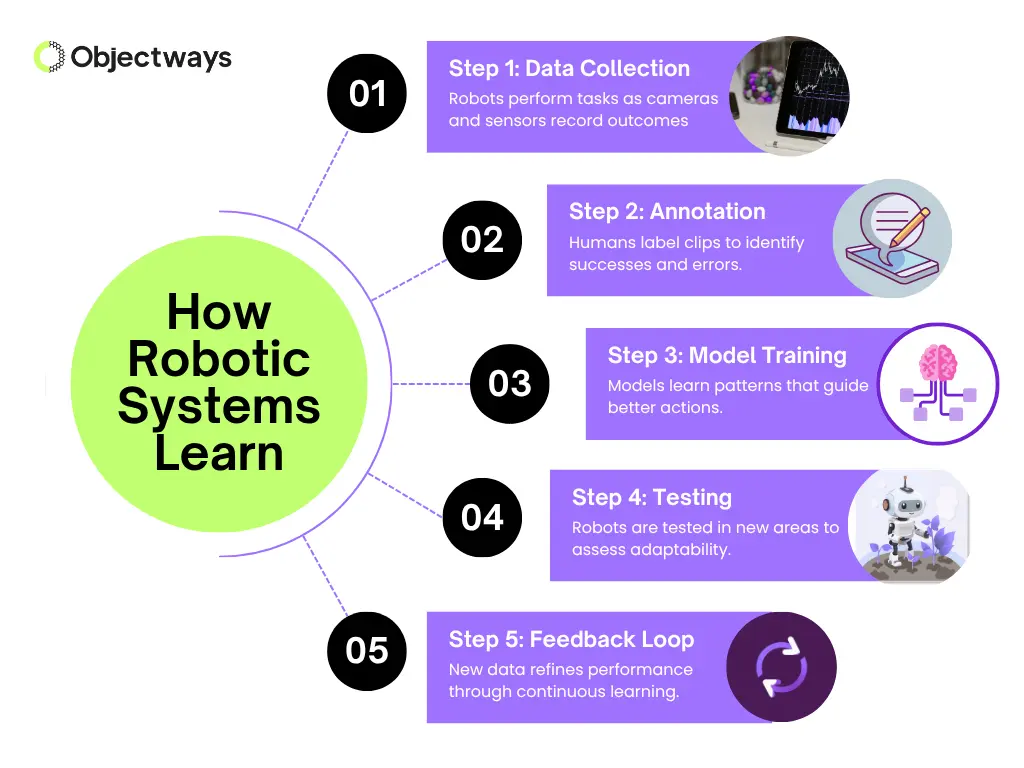

Here is how that process usually works:

How Robotic Systems Learn

You might be wondering what kinds of AI models make this type of learning possible. In robotics, there is a growing focus on multimodal foundation models that can learn from vision, language, and physical motion data together. These models help robots see and recognize what is in front of them, understand instructions, and decide how to act.

Here are some models and approaches gaining attention:

Did you know that studies show that robot cells fail or stop working about 12% of the time?

For example, at a manufacturing site using a Universal Robots UR20 for bin-picking and CNC tending, the system suddenly stopped after entering protective mode. Engineers later found that the problem wasn’t with the robot itself, but with a dented bin causing misfeeds. They fixed the issue remotely and got the system back up and running.

Such scenarios are exactly why regular AI evaluation is essential. Once a vision-guided robotic system is trained, it’s important to test how well it performs in changing real-world conditions.

Human annotators and evaluators can review short video clips of robots performing tasks like folding laundry or pouring coffee. They watch how the robot moves, how long it takes, and how it responds to its environment.

They focus on determining whether the robot’s actions match what it was supposed to do. If something goes wrong, maybe the robot drops an item or pauses in protective mode, they note what happened and why. This feedback is then used to retrain or fine-tune the model, creating a continuous improvement cycle that helps the robot perform better over time.

For instance, if a robot is supposed to fold a shirt, evaluators check whether it folded just one item, folded it correctly, and placed it in the right spot. When errors do happen, they aren’t simply marked as a failure.

Instead, the exact reason is recorded: whether the bin was slightly dented, the camera misread an angle, or a person accidentally got in the way. This level of detailed labeling means engineers can pinpoint the real problem and make targeted improvements rather than guess.

After evaluation, the annotated clips are fed back into the training pipeline so the AI model can learn from them. Patterns in the labeled data make it possible for the system to correct recurring issues, like grip errors, timing delays, or sensor misreads, by updating the model’s parameters and improving its behavior across many situations.

At the same time, examples of correct actions reinforce the behaviors we want the robot to repeat. This continuous feedback loop steadily improves the model, allowing the robot to perform more reliably in real-world environments.

Now that we have a better understanding of the impact of AI data in the robotics industry, let’s look at some real-world applications.

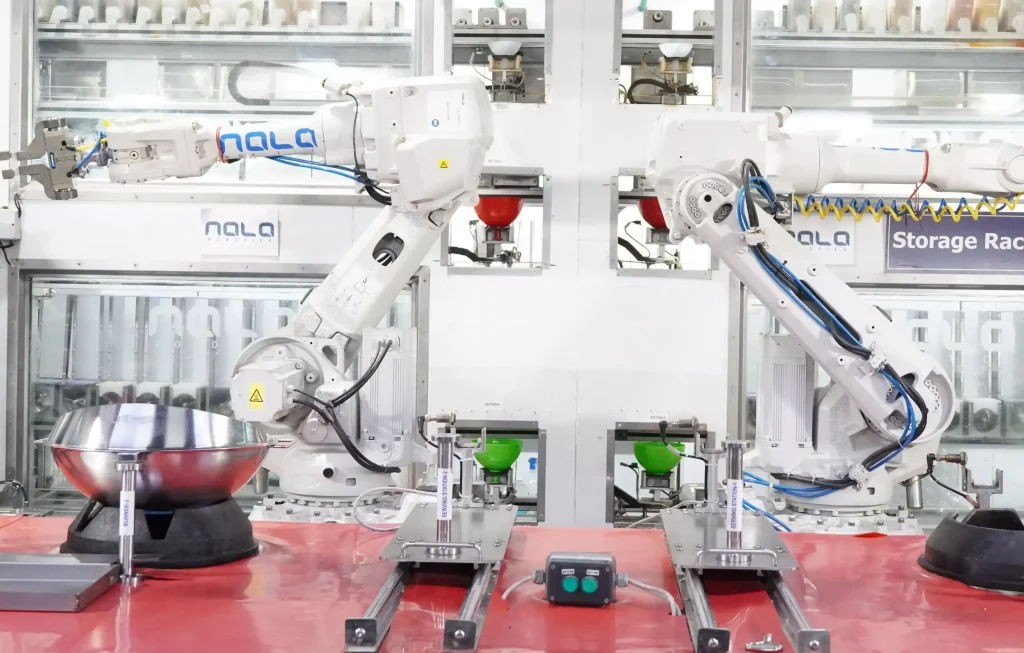

Just like Neo, many companies are developing robots that can perform everyday tasks at home, including cooking. Preparing a meal may feel simple for humans, but for robots it requires precise coordination: chopping vegetables, stirring sauces, adjusting heat, and plating with consistency. Each step has to be executed with accuracy and timing.

A good example of this is Nala Chef, a fully automated multi-cuisine robotic kitchen designed to cook complete meals with precision. Nala Chef uses machine learning, computer vision, and continuous sensor monitoring to execute recipes and adjust to variations in ingredients and conditions.

Nala Chef: An Automated Robotic Kitchen. (Source)

The system tracks more than a thousand parameters in real time and logs each action it takes, including ingredient usage and cooking timing. Its built-in TasteBot compares taste profiles to sensor readings and can adjust seasoning or recipe execution accordingly. Over time, this feedback enables the system to produce meals with more consistent flavor and texture and to better match individual preferences.

The same idea applies in warehouses, where every second counts. Packing a box or placing a label might seem simple, but robots must do it consistently to keep orders moving. By reviewing short video clips, AI teams can quickly spot issues, like crooked labels or boxes that aren’t sealed properly, and adjust the system before small errors turn into bigger ones.

For example, Amazon’s warehouse robots continuously collect data every time they pick up, move, or place an item. This interaction data is used to refine the AI models that control them, especially in cases where a task doesn’t go as planned.

A Warehouse Robot Picking Up a Package (Source)

When a robot struggles to grip an unusual package or misplaces an item, that failure case is analyzed and incorporated into future training. Over time, the system becomes better at handling different shapes, weights, and materials, improving both accuracy and reliability across thousands of real-world scenarios.

If you are building a robotics project or developing the next breakthrough in automation and need AI data for the robotics industry, you are in the right place.

At Objectways, we make it easier for robotics companies to create smarter, safer, and more reliable robotic systems. We handle the data evaluation, data annotation, and quality checks that give robots the examples they need to learn. With over 2,000 specialists, including a large team focused specifically on robotics and sensor data, we can support projects of any size.

Our work often begins with real human demonstration data. In one project, our team recorded and prepared 200 towel-folding demonstrations for a U.S. robotics client training humanoid robots to perform everyday tasks.

Each recording captured subtle movements such as how hands grip fabric, adjust folds, and place items. We then reviewed and filtered the clips, removing those with small inconsistencies so only clear and consistent examples were used for training.

Once the data is collected, our annotation teams label each step of the action. This includes identifying the objects involved, tracking hand and arm movement, marking key phases in the task, and noting errors when they occur.

We also categorize the cause of each issue, for example, whether it resulted from human interference, sensor placement, or motion timing. These detailed annotations give robotics teams the clarity needed to refine and retrain their models more effectively.

We work within the tools and platforms your team already uses. If your data pipeline runs in Encord, we join your project directly. If you have your own internal annotation or evaluation environment, we adapt to that as well. And if you’re starting from scratch, we can set up the workspace and configure the full pipeline for you.

Once the annotation is complete, the data can be exported in your preferred format or delivered by our team, ready for model training. This approach keeps you fully in control of how data moves through your development process. We understand that the AI space is broad and that every team approaches problems differently, so we focus on being flexible and easy to work with rather than forcing a single workflow.

Just like babies, robots don’t start out knowing how to move, hold, or manipulate objects. Every steady pour, precise fold, or neatly packed box comes from repeated examples, feedback, and gradual improvement. Their abilities are learned, not preprogrammed.

As automation advances, robots are becoming more adaptable and reliable because of this continuous learning process. High-quality AI data for the robotics industry is what enables these systems to train, improve, and perform safely in the real world.

Want help building the data behind your robotics project? We’d love to talk.