Why Computer Vision Makes a Great Example

Particularly, data labeling automation is especially useful for annotating images and videos when training models for computer vision tasks, such as segmentation. Computer vision is a field of AI that enables machines to interpret and understand visual information from the world, like images and videos, much like humans do. A popular example of automation in this area is Grounding SAM, an AI model that can automatically suggest object outlines based on a text or image prompt, helping to speed up the annotation process.

In this article, we’ll explore how human-in-the-loop annotation, pre-labeling, and autotagging can improve the speed and accuracy of AI projects. Also, we'll take a closer look at image data labeling as a key example, using computer vision annotation tools like Grounding SAM to illustrate these techniques.

The Role of Humans-in-the-Loop in Data Labeling

Before we dive into the data labeling methods used to automate this traditionally manual process, let’s take a moment to understand why humans are still a crucial part of it.

As annotation projects scale, AI development teams are turning to automation to meet growing demands. However, this automation can only take things so far; humans still play a key role in the annotation workflow.

Consider quality control in a factory. As products move on a conveyor belt, automated systems can ensure their quality, but humans still need to step in occasionally for any complicated final checks before packaging and shipping. That’s exactly what the human-in-the-loop approach brings to data labeling.

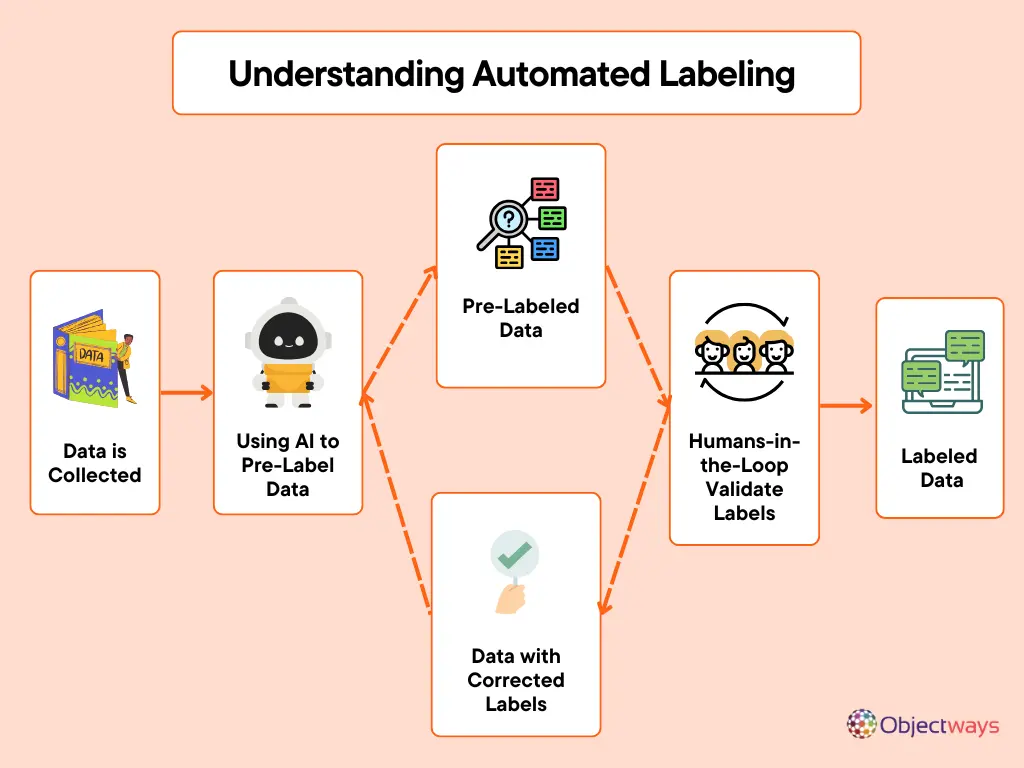

HITL data labeling is a process where machine learning systems and humans collaborate to annotate data. When it comes to computer vision, the machine typically takes the first pass, generating labels or tagging elements in an image, while the human steps in to review, correct, and refine. This collaboration reduces manual effort and also speeds up the whole labeling process.

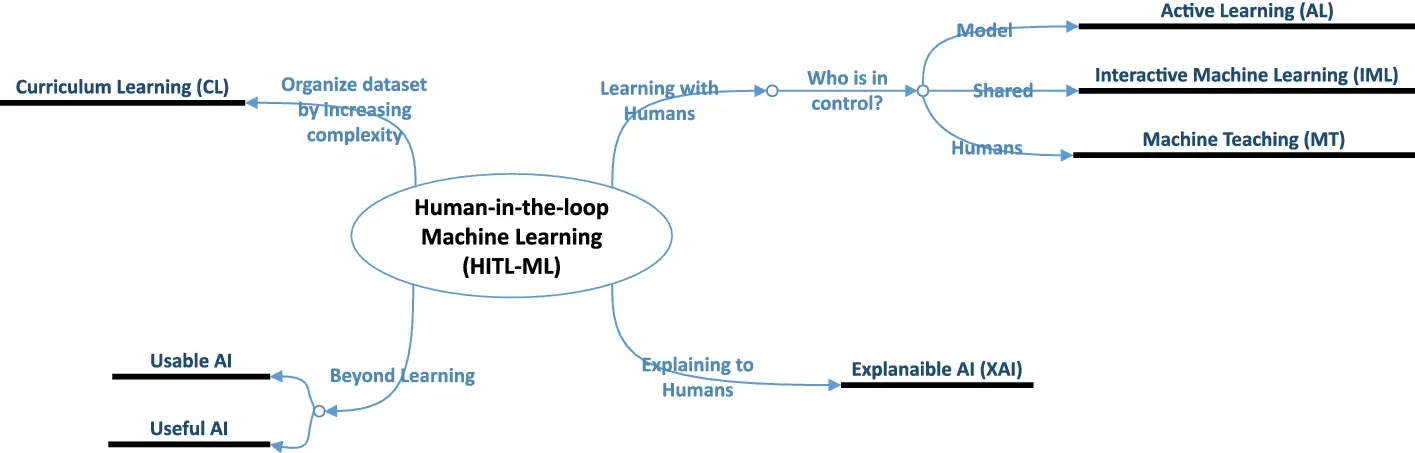

Different Roles of Humans-in-the-Loop in AI (Source)

Human-in-the-loop workflows become important in situations where the data is challenging to make sense of. Let’s say you're labeling thousands of street images for a self-driving car dataset. Automation (for the most part) can help draw boxes around stop signs or traffic lights. But what if a sign is bent, partly covered, or surrounded by clutter? A human can interpret the scene and make the right call, something AI tools still struggle to do reliably.

By combining automation with expert human oversight, we can maintain data quality even as data volume scales. It can stop minor errors from snowballing into more significant issues. Such methods are vital for applications like medical imaging or autonomous driving, where precision is non-negotiable.

A Pre-Label is One Step Closer to the Finish Line

There are many tools and techniques that can be used to automate or assist with data labeling. One such technique is pre-labeling. It uses machine learning to automatically generate initial annotations or pre-labels, like bounding boxes or masks, before a human annotator reviews and finalizes them.

It’s similar to prepping ingredients before cooking - if someone has already chopped the vegetables and marinated the food, you’ll spend less time in the kitchen. In the same way, pre-labeling uses algorithms to make the first move by drawing bounding boxes or suggesting segmentation masks based on model predictions. Then, a human annotator steps in to refine, adjust, or reject those suggestions, making sure the final labels are accurate.

Whether it’s generating bounding boxes for vehicles, outlining buildings in aerial imagery, or proposing object regions in medical scans, pre-labeling reduces manual effort. It makes it possible for annotation teams to work faster, iterate more quickly, and focus their attention on tricky edge cases instead of routine tasks.

An example of annotating an image. (Source)

How Autotagging Simplifies and Speeds Up Annotation

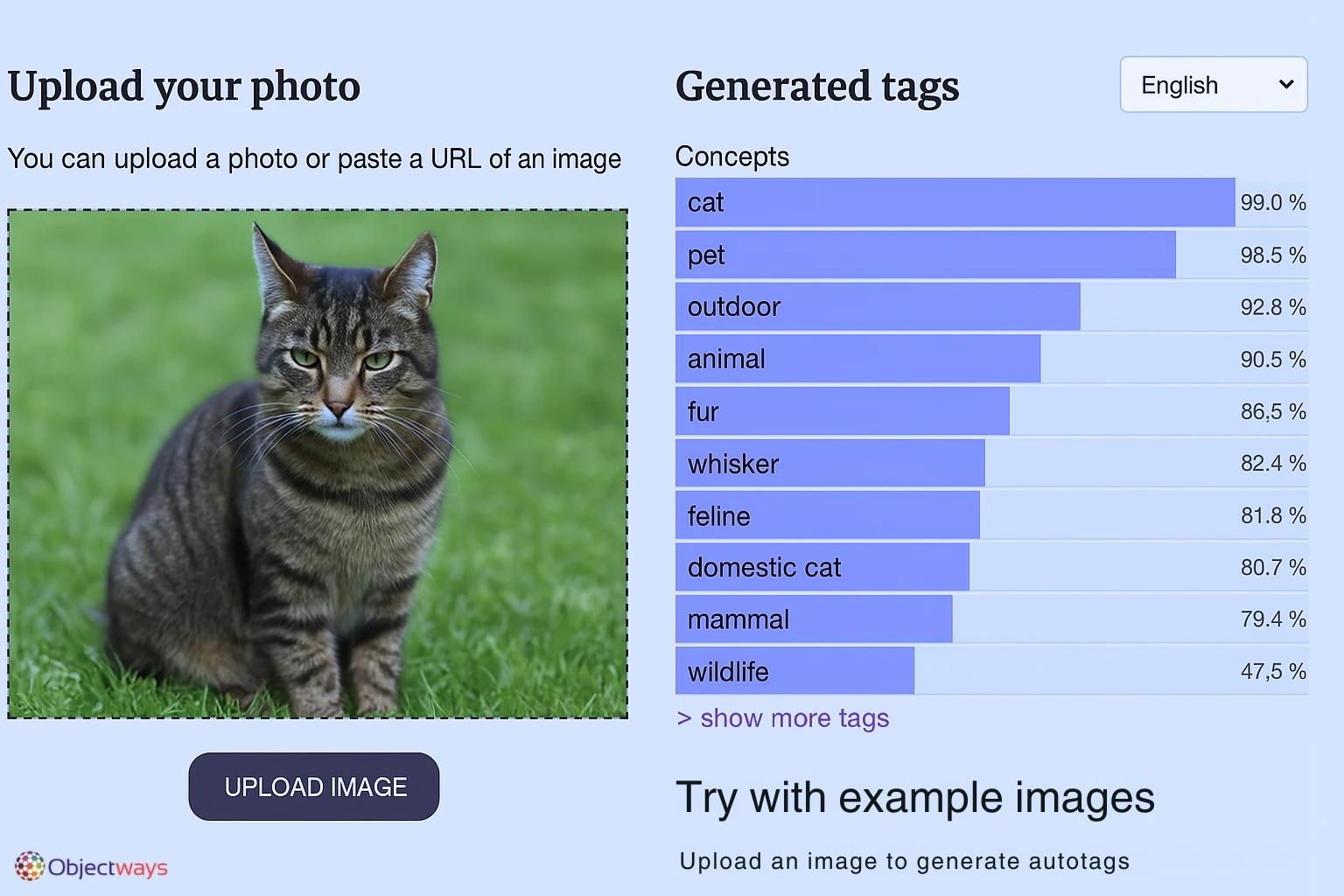

What if you had a tool that could automatically sort and organize your laundry? That would be pretty amazing. While we haven’t quite figured out how to automate laundry, in the world of AI, autotagging can help you handle and organize your data more efficiently. It uses AI to automatically suggest labels by identifying and classifying objects, not just in images, but across formats like text, video, or audio, wherever annotation is needed.

Autotagging is often used alongside pre-labeling, but they both play different roles. While pre-labeling outlines objects, like drawing boxes or masks, autotagging assigns broader tags or categories. For instance, pre-labeling might involve drawing a bounding box around a bicycle, while autotagging labels the image as “outdoor,” “bicycle,” or “traffic.” One focuses on where the object is, the other on what the scene represents.

An example of generating autotags for an image.

Here are some of the advantages of using auto-tags:

- Improves Consistency: AI models apply the same tagging rules across datasets, minimizing human error or inconsistency.

- Scales Easily: Useful for projects involving thousands (or millions) of items where manual tagging would be too slow.

- Speeds Up the Annotation Process: Automatically applies common labels, reducing manual effort and saving time.

- Point-and-click acceptance: Makes review faster and easier - annotators can simply approve, adjust, or reject AI-generated tags with minimal effort.

Prompt-Based Segmentation Made Easy with Grounding SAM

Now that we have a better understanding of the concept of autotags and pre-labels, let’s take a closer look at a computer vision model that can be used to create pre-labels.

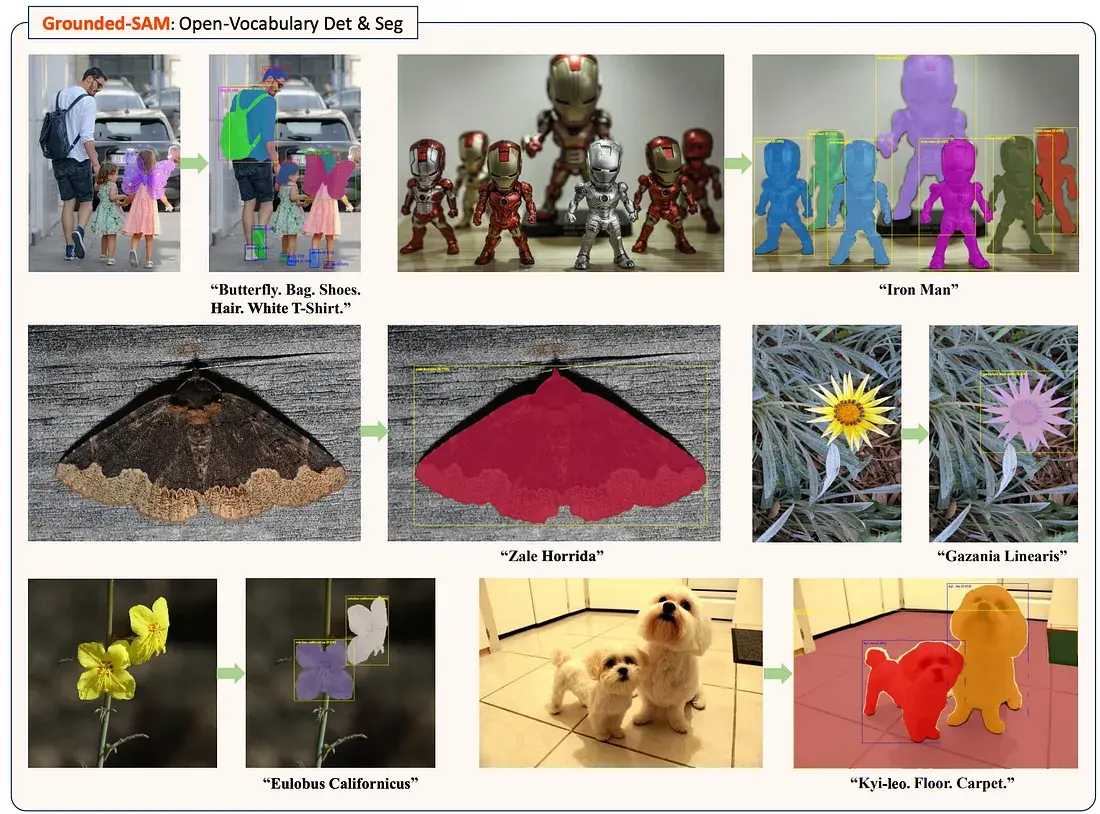

The Segment Anything Model (SAM) is an AI tool that can identify and outline different parts of an image (using segmentation masks), a process known as segmentation. When it's combined with Grounding DINO, a model that can find objects in images based on text prompts, even if it hasn't seen them before (a technique known as zero-shot detection), you get Grounding SAM.

Grounding SAM is used across many applications, and one of the most practical is automating the image data annotation process. It works like a smart assistant that helps you find exactly what you're looking for in an image.

If you give it a prompt like “Iron Man” or “butterfly,” it can quickly detect and highlight those objects. Then human annotators can step in to review, adjust, and finalize the labels. This collaboration keeps the process efficient without sacrificing accuracy.

Using Grounding SAM to outline objects in an image based on a single text prompt. (Source)

Striking the Right Balance Between Speed and Accuracy

Despite the advantages of models like Grounding Sam, there are some limitations, just like any other cutting-edge technology. For example, autopilot systems in planes are fascinating and work well, but there is a reason why pilots are always present. When it’s time to take over in case of emergencies, human pilots are needed.

Automation in data labeling works the same. It takes a lot of pressure off human annotators, but humans-in-the-loop are a necessity for fixing errors and ensuring quality. That’s where quality review mechanisms come in, ensuring AI models don’t just move fast but move in the right direction.

These systems work best when they catch errors early. A quick checkpoint right after the AI model suggests a label or segmentation mask can prevent small issues from snowballing into larger ones. Then comes the feedback loop, the engine behind smarter automation. Every correction a human makes becomes a lesson for the AI model. Over time, the AI model stops making the same errors and begins suggesting labels that need fewer tweaks.

For this system to work, human annotators require a clear rulebook. Well-defined guidelines and proper training are essential for handling edge cases, like blurry images, poor lighting, or overlapping objects.

Breaking Down the Annotation Workflow

Next, let’s walk through an automated annotation process with humans-in-the-loop and Grounding SAM from start to end to get a more comprehensive picture.

It begins with collecting raw data (also known as data sourcing) like images, video frames, or other visual content that needs to be labeled for tasks like object detection or segmentation. Grounding SAM is then used to generate initial labels by responding to prompts (like “car” or “tree”) and identifying those objects in the image. Alongside this, autotagging tools help by adding broader context-based labels such as “outdoor” or “nighttime,” enriching the dataset beyond just object boundaries.

After this first automated pass, human annotators can step in to review and refine the output. They can validate what the model got right, fix what it missed, and ensure the annotations are accurate and consistent. This human-in-the-loop approach strikes a balance between speed and precision - automation handles the bulk of the work, while humans ensure quality.

Building and managing this kind of data labeling workflow at scale can be complex. That’s where Objectways can come in; we specialize in high-quality data labeling, sourcing, and AI support to help teams move faster and smarter. With flexible solutions tailored to your project’s needs, Objectways makes it easier to build accurate, scalable AI systems.

Smarter Data Labeling Starts with the Right Process

Labeling data is one of the most important steps in building good AI models, but it can also be one of the most time-consuming. That's why combining automation tools and human checks makes a huge difference.

Techniques like pre-labeling, autotagging, and Grounding SAM can help speed up the process by giving human annotators a head start. With the right balance of automation and human reviews, teams can label data faster without sacrificing quality, getting AI projects off the ground more efficiently and reliably.

At Objectways, we’re dedicated to delivering high-quality data labeling that sets your AI models up for real-world success. Contact us today and take your AI projects to the next level.

Frequently Asked Questions

- What is a segmentation mask in AI?

- A segmentation mask is a method used to highlight the shape and boundaries of objects in an image. Instead of just marking where an object is, it outlines its exact form, making visual data more detailed and useful for AI training.

- What does Grounding SAM do?

- Grounding SAM is a vision-language model that links text input to specific objects in an image. It helps AI identify and segment objects based on natural language prompts, improving speed and reducing the need for manual instructions.

- How does autotagging help in annotation?

- Autotagging automatically suggests relevant labels for content using AI. It simplifies the annotation process by reducing manual effort, allowing human annotators to review and finalize tags more quickly.

- What is pre-labeling in data annotation?

- Pre-labeling refers to the process where an AI model generates initial annotations, like bounding boxes or segmentation masks, before a human refines them. It accelerates workflows by giving annotators a solid starting point.