There was a time when the word “robot” made people think of The Terminator, a futuristic idea from science fiction. However, today, they are becoming an integral part of our living and working spaces. From performing quality checks in factories to assisting surgeons with delicate procedures, they are now equipped with a wide range of advanced capabilities.

As robots take on more complex tasks, their design and intelligence keep improving to meet real-world needs. Thanks to artificial intelligence (AI), robotics engineering, and computer vision, they can now recognize objects, understand their surroundings, and interact with people in more human-like ways.

As a result of these abilities, AI-powered robots are being used in almost every major industry. In fact, there are now more than four million of them working in factories around the world.

In this article, we’ll look at how AI helps robots make smarter decisions in everyday situations. We’ll also explore how combining different sensors, a process known as sensor fusion, helps them better understand the world around them. Let’s get started!

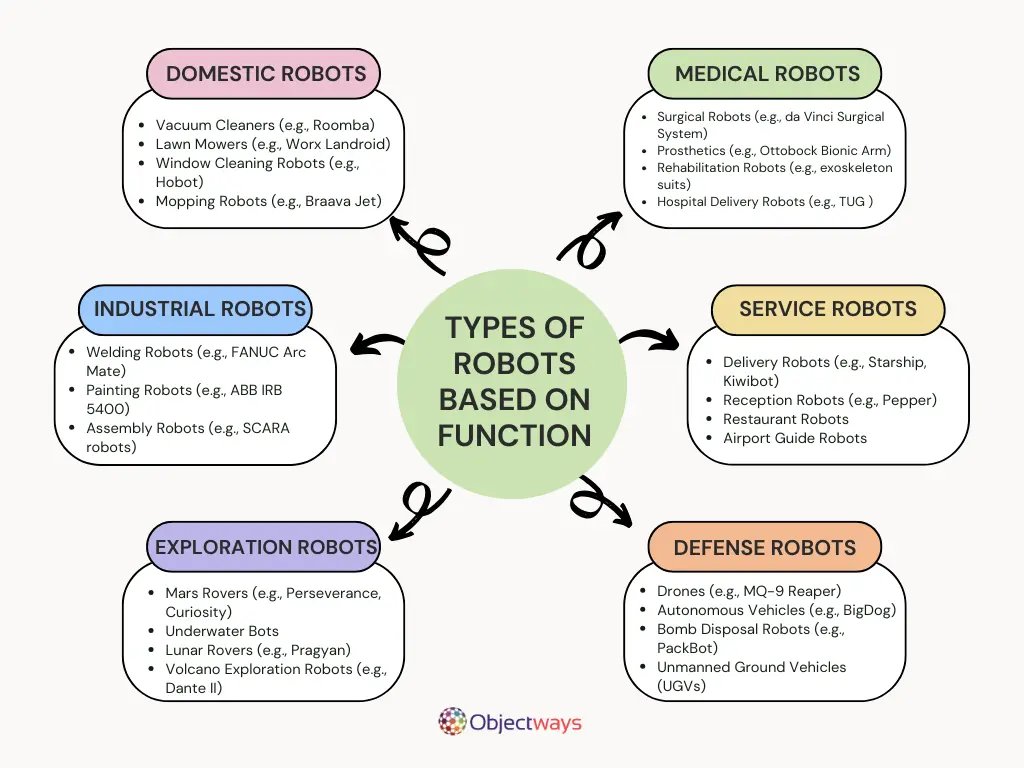

Different Types of Robots

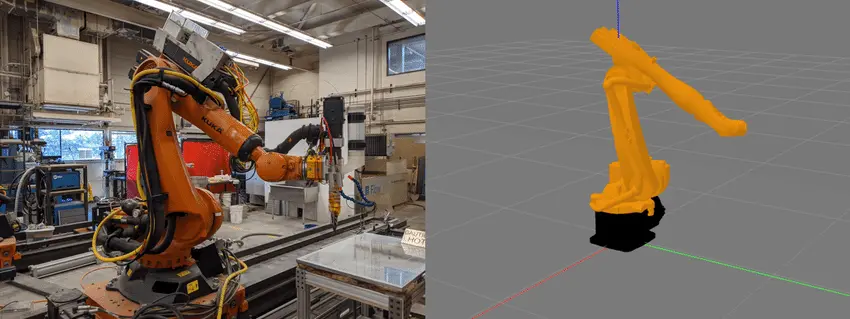

Robotics engineers are pushing the boundaries of robotics by improving the design of materials, actuators, control algorithms, and embedded computing systems. An example is how lighter and more agile robotic arms make it easier for machines to operate safely in shared spaces and conserve energy in dynamic setups. Such improvements also make robots more precise and better able to adapt to the conditions they work in.

The ultra-lightweight robotic arm, which was showcased at the tech event CES 2025, is a great representation of this technology. It was built to handle industrial tasks while easily adapting to different environments. Made with lightweight materials and designed for precise, smooth movement, this type of robot is well-suited for manufacturing, service jobs, and can even help around the home.

Ultra-Lightweight Robotic Arm at CES 2025. (Source)

The onboard systems that control these robots are also advancing. New embedded processors help them process input data faster and react in real time. Some research teams are also using AI to optimize the control code that guides their movements and actions. For instance, at UC Berkeley, robots are learning to put together IKEA furniture. This may sound simple, but assembling IKEA furniture involves a mix of planning, coordination, and adaptability, making it a tough challenge for robots.

For robots to work safely in complex places, they need to understand what’s going on around them. They can do this through sensor fusion, which focuses on combining data from different sensors, like cameras and motion trackers, to get a clearer, more accurate view of their surroundings.

For instance, NVIDIA’s DRIVE technology uses this method to assist autonomous vehicles. It collects and processes data from many sensors, like LiDAR and cameras, to make data-driven decisions in challenging conditions like traffic and weather.

The same approach is also being used for small and compact robots as well. At CES 2025, Samsung showcased its latest version of Ballie, a small home robot. Ballie can learn the layout of your home, follow voice commands, and even project useful info onto walls or tables.

Similarly, robots are assisting scientists with studying our natural environment. RoboTurtle, a robot made by Beatbot, can move on land and swim underwater like a real turtle. It uses sound waves, cameras, and pressure sensors to explore hard-to-reach underwater areas.

The RoboTurtle uses data from different sensors to navigate underwater. (Source)

Such AI-driven robotic systems depend on high-quality data that represents a variety of real-world situations. At Objectways, we work with robotics teams to build the datasets behind these systems. By sourcing and annotating multi-sensor data, we help develop models that can handle complex inputs and operate safely in environments like factories, roads, and warehouses.

Many of today’s robots are designed to work directly alongside people. Some even have a humanoid appearance to make that interaction more natural. These systems, known as collaborative robots or cobots, are designed to perform tasks in ways similar to humans. They use advanced sensors to gather data about their environment, which helps them navigate, detect motion, adapt to changes, and safely carry out tasks – especially ones that might be difficult or dangerous for people.

Cobots are especially useful in the manufacturing and logistics industry. They can help with heavy lifting, provide tools, or perform quality checks. On assembly lines, they can assist workers by performing repetitive or physically tiring work. This kind of human-robot collaboration can boost productivity and create safer, more efficient workplaces.

As industries look for more flexible tools, robots are also becoming a vital part of logistics operations. Amazon’s Digit is a great example. Amazon uses Digit robots and other shelf-moving units to support package sorting and transport. These robots share warehouse space with human workers and help manage the speed and scale of distribution.

Amazon’s Humanoid Digit Robots Performing Tasks in a Warehouse. (Source)

Industry 4.0 is the new face of how smart factories and warehouses function. They use AI and robotics to automate processes, speed up operations, and make decisions based on real-time data.

Here are some of the ways robotics engineering is driving Industry 4.0:

An Industrial Robot (Left) and its Digital Twin in a Virtual Setting (Right). (Source)

In the future, robotics will likely move far beyond factory floors and controlled environments. Innovative systems are being built to handle messy, unpredictable, and human-centered spaces. Whether it is assisting farmers in agriculture or supporting healthcare workers, these robots can adapt, learn, and respond in ways that earlier machines could not.

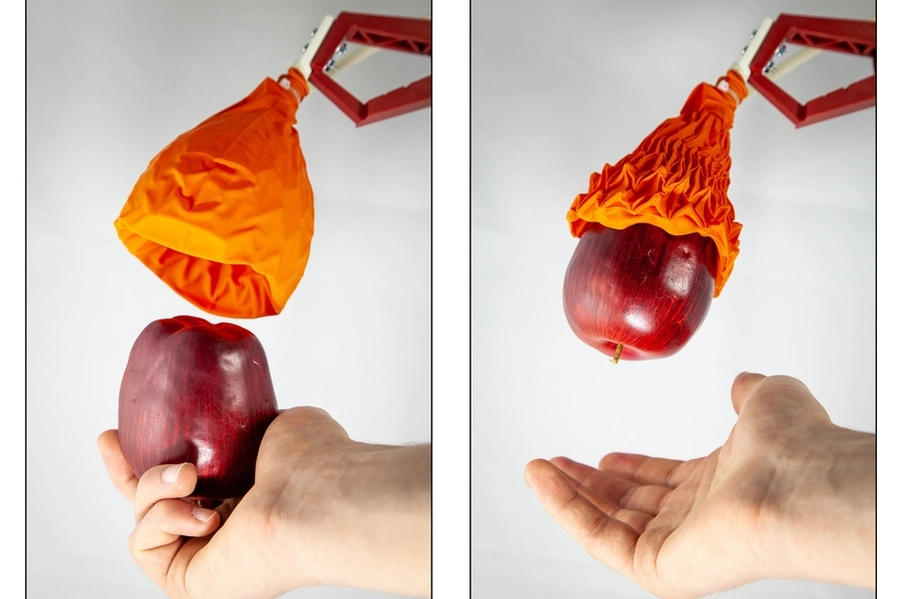

One of the most promising areas is soft robotics, such as the soft robotic grippers by MIT. They are built from flexible materials rather than hard metal. Such soft grippers can safely pick up fragile biological samples or irregular shapes in food packaging lines.

MIT’s Robotic Arm with Soft Grippers Picking Up an Apple. (Source)

The robotics engineering field is expected to grow in new and exciting directions. For example, swarm robotics, inspired by how ants or bees work together, is being used in Australia, where small robots team up to plant and spray crops more efficiently.

Another example is the use of wearable robots in physically demanding jobs. AI-powered exoskeletons help people lift, walk, or stand for longer periods with less strain. Robots are also becoming more common in areas like elder care, environmental monitoring, and remote maintenance.

As robots take on more complex roles across industries and public spaces, they also bring about new questions. Some of these are about how robots work. Others are about how we use them, who is responsible for them, and how we can make sure they are used fairly and safely. These challenges are gaining the attention of both technical experts and the broader robotics engineering and AI communities.

Here are some key considerations being discussed in the robotics space:

Robotics engineering and AI are steadily becoming part of connected systems that help industries solve real-world problems more accurately and efficiently. From factory floors to farmlands and hospitals, robots are now being adopted into workflows that require real-time awareness and data-driven decision-making.

As these systems become more advanced, tools like AI can help robots adapt to situations that are difficult to predict. At the same time, methods like sensor fusion allow them to gather and process data from their surroundings in a way that feels more complete and more human-like.

The future of robotics isn’t dependent on just technology. It also depends on how carefully we build these systems, how well we train them, and how responsibly we use the data that drives them.

At Objectways, we help bring the future of robotics to life. Our team of experts provides detailed, high-quality annotated datasets that help robots detect objects, understand spatial layouts, and navigate the world more safely.

We work with LiDAR, camera feeds, and motion data to support robotics teams in industries like logistics, manufacturing, and autonomous driving. Beyond data annotation, we also offer end-to-end AI development services, helping clients build, train, and deploy intelligent systems from start to finish.

Ready to build smarter robotic systems? We’re here to power your vision with the high-quality data and AI expertise you need. Let’s talk – book a call with us today.